- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Problems with CentOs 7 and MM 0.9.52

- LIVEcommunity

- Discussions

- General Topics

- Re: Problems with CentOs 7 and MM 0.9.52

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-23-2019 04:38 AM

Hi guys,

I used to run standalone MM 0.9.50 with CentOS 7, perfectly. Last week I updated MM to 0.9.52 with the help of @lmori and the proccess was completed with success. See ( https://live.paloaltonetworks.com/t5/MineMeld-Discussions/Updating-MineMeld-from-0-9-50-to-the-lates... ).

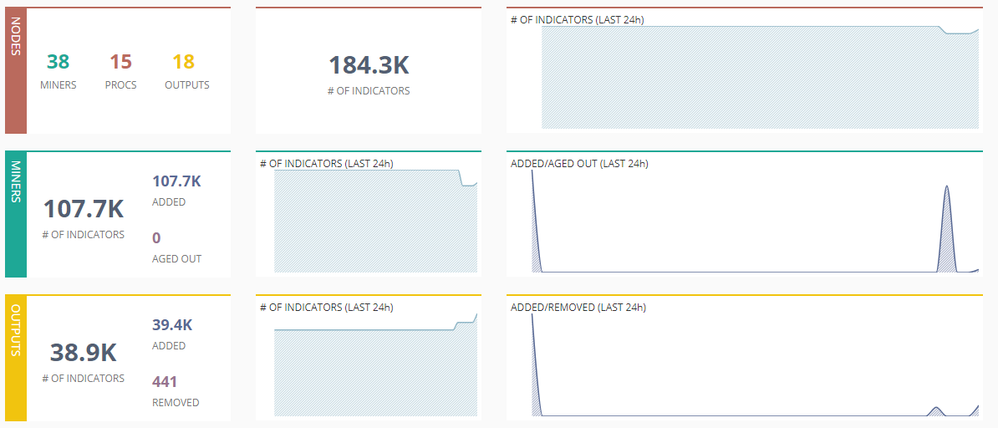

However since the upload my MM doesn't work the same way. On my Dashboard is visible that my miner works fine, more than 90K indicators, but almost none of them ara available, less than 1K in the outputs (see figure below).

If we detailed the proccess, we see that the status of many nodes is "stopped". The number of indicators forwarded by the miners is high.

The number of indicators forwarded by the aggregators is almost the same.

But the number of indicators available by the outputs is extrmely low.

Has anybody experienced something similar? How you dealed with the problem?

Best Regards.

Accepted Solutions

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-23-2019 07:12 AM

Hi guys

this problem started when I was trying to update to 0.9.52 version, but took so long that I finally completed the process with 0.9.60 version. To solve the problem with 0.9.60 version, you should execute the folowing, after the known basic steps:

ln -s /usr/lib64/python2.7/lib-dynload/_sqlite3.so /usr/local/lib/python2.7/lib-dynload/

This solve the problem of getting "Bad Gateway" message in MM WebGUI.

Best regards

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-23-2019 04:55 AM

Hi @danilo.souza,

could you try this:

- in /opt/minemeld/engine/core/minemeld/run open the file launcher.py

- at line 284 change

mbusmaster.wait_for_chassis(timeout=10)

into this

mbusmaster.wait_for_chassis(timeout=60)

and restart

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-23-2019 06:08 AM

Hi @lmori

first I restarted just the engine through the web interface, but not changed. Then I restarted my server and I got a bit more indicators.

I still have many nodes with the staus "stopped". And the number of the indicators in the output isn't matching yet.

What is this parameter?

mbusmaster.wait_for_chassis

Can it be changed even higher? What is the consequence?

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-29-2019 09:52 AM

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-04-2019 10:11 AM

Hi @lmori

I'm sorry to insist. I still have nodes with the staus "stopped". I tryed to find any other evidences of the problem, but I got nothing. Unhappy, this is happening since I upgraded to 0.9.52.

Did you have the opportunity to see my last reply (https://live.paloaltonetworks.com/t5/MineMeld-Discussions/Problems-with-CentOs-7-and-MM-0-9-52/m-p/2...)?

Best regards.

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-04-2019 11:24 AM

Our server team just upgraded my Minemeld to CentOS7 0.9.52 and it only lists about 29k indicators but only 43 total in the output. I have an Ubuntu 14.04 image running 0.9.52 and it has 231k indicators and 77k output.

We have never had CentOS running yet but that is our PROD operating system and they want us to use it. Right now I just have my test box feeding the PAN the EDL.

I tried bumping up to 60 on the timeout.

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-11-2019 03:52 AM

Hi @xhoms and @lmori

Apparently there is a problem with the version 0.9.52 and the CentOS. Do you have any other known issue? Is it being addressed? It is impacting our environment. Do you advise rolling back to 0.9.50 version? If yes, How to do it?

Best regards.

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-11-2019 04:24 AM

Hi @danilo.souza,

yes, I am looking into this. The problem seems to be related to RabbitMQ.

If you want to install the previous version, you can use the update procedure for Ansible but in the file change the line 7 to look like roles/minemeld/tasks/core.yml:

version: "0.9.50.post4"

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-11-2019 08:54 AM

That doesn't work either.

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-12-2019 02:49 AM

Hi @StephenBradley and @lmori

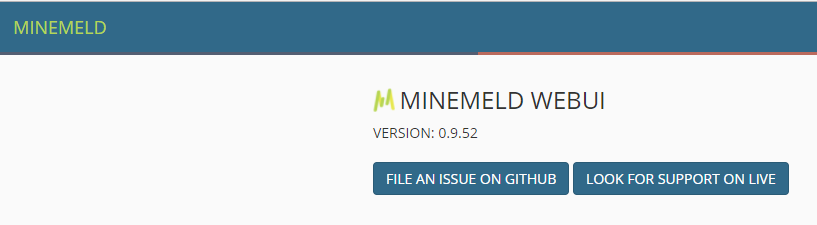

I can confirm it is extremely unstable. I tried rolling back to the previous version using the tips of @lmori in the previous post. At first it failed and present a fatal error. I enabled the extensions the error desapeared, but I'm still getting the "stopped" status for some nodes. The curious point is that, after the rolling back, I'm still getting the 0.9.52 vrsion in MM WEBGUI.

Did you experienced something similar @StephenBradley ?

Best regards.

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-21-2019 11:15 AM

Hi @lmori

I'm having some issues with the upload of MM to 0.9.52, as reported previously. After the preocedure you told me to follow to rool back to 0.9.50, my machine has not worked properly. First of all, it still shows I'm using the 0.9.52 version, as I showed in my last post.

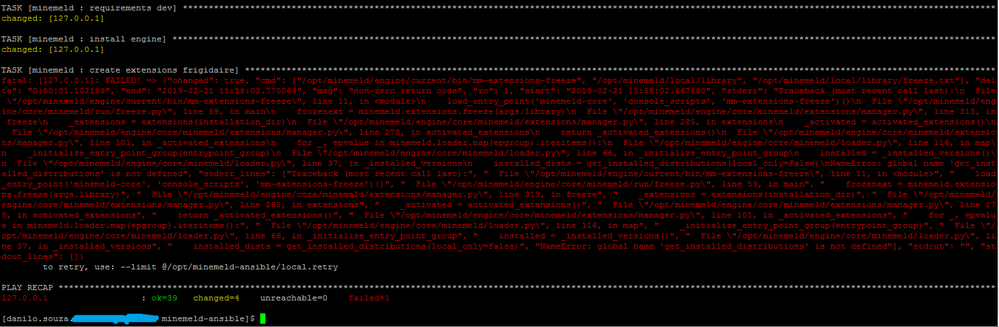

I tried to repeat the procedure and now I get an error

Could you tell me what is the best pratice to get my MM back to work? Is is really affecting (downgrading) my experience with MM.

Best regards.

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-27-2019 11:47 PM

Hi @danilo.souza,

something is wrong in the downloaded code, it seems to be pretty old.

Please do this:

- remove your current minemeld-ansible directory and /opt/minemeld/engine, /opt/minemeld/www and /opt/minemeld/prototypes directories

- copy /opt/minemeld/local contents somewhere for backup

- update your CentOS 7 packages to the latest versions (yum update)

- follow the instructions here: https://github.com/PaloAltoNetworks/minemeld-ansible#howto-on-centos-7rhel-7

- DO NOT SPECIFY VERSION MASTER!

The new version of the playbook will install the MineMeld version that is still in beta but is way more reliable on CentOS7.

Luigi

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-28-2019 06:00 AM

Tried but all I get is this.

TASK [minemeld : minemeld-node-prototypes repo] *********************************************************************************************************************************************************************************************

ok: [127.0.0.1]

TASK [minemeld : minemeld-node-prototypes current link] *************************************************************************************************************************************************************************************

ok: [127.0.0.1]

TASK [minemeld : minemeld-core repo] ********************************************************************************************************************************************************************************************************

fatal: [127.0.0.1]: FAILED! => {"before": "bdd6879cc2c72094702d301e3e1bae4a198eed5f", "changed": false, "msg": "Local modifications exist in repository (force=no)."}

to retry, use: --limit @/opt/minemeld-ansible/local.retry

PLAY RECAP **********************************************************************************************************************************************************************************************************************************

127.0.0.1 : ok=31 changed=0 unreachable=0 failed=1

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-28-2019 08:58 AM

Hi @StephenBradley,

did you delete the directory /opt/minemeld/prototypes before rerunning the playbook?

Thanks,

luigi

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-01-2019 05:49 AM

Well it seemed to have gotten farther but now I get a firewall not running error.

- 1 accepted solution

- 18151 Views

- 40 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Eve-NG Palo Alto VM ARP Issue in General Topics

- Cortex XDR Agent Services in Endpoint (Traps) Discussions

- Edit personal information in General Topics

- Problem with viewing and downloading 30-day logs on Panorama server in Panorama Discussions

- XSOAR Multi-Engine Deployment on CentOS7 in Cortex XSOAR Discussions