- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Expedition csv logs stuck in pending

- LIVEcommunity

- Tools

- Expedition

- Expedition Discussions

- Re: Expedition csv logs stuck in pending

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

Expedition csv logs stuck in pending

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-26-2018 07:43 AM - edited 06-27-2018 07:55 AM

Hi everyone,

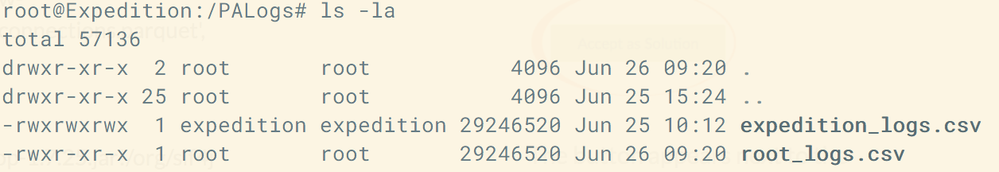

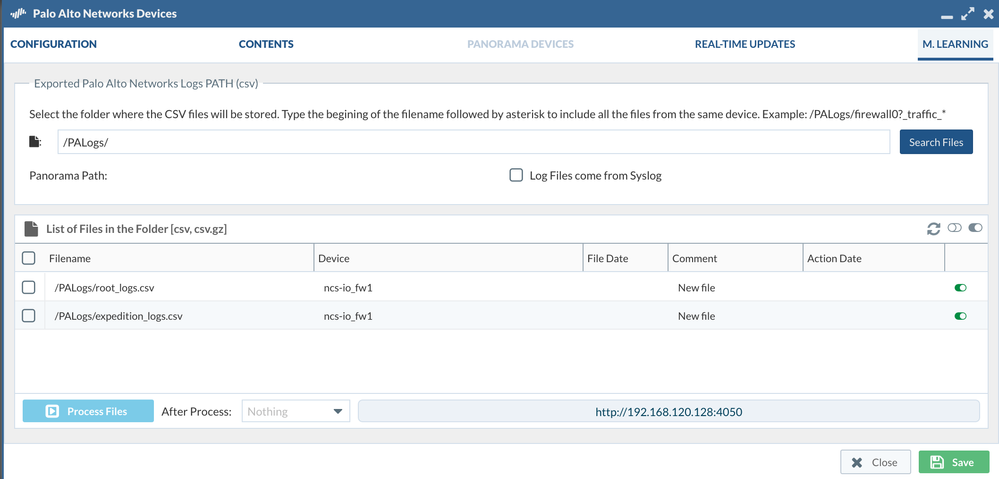

I have added firewall logs from our Palo Alto 5000 series to the Expedition VM /PALogs . I have copied the orginal .csv as a duplicate with root as the owner and the original with expedition as the owner. Both files appear in Devices > M.LEARNING. When I run Process Files the job remains in pending and nothing happens. Any ideas what the issue may be?

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 12:18 AM

That URL that you called "weird message" is something expected and desired, as provides the link to see how the processing is going under Spark computation.

The fact that failed is not desired, tho. Check if you have files in /tmp with a name starting with error_, for instance /tmp/error_logCoCo, which would provide information regarding the execution of the CSV processing into parquet (internal format for later log analysis) and errors that may have occurred.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 04:05 AM

That is good the weird message is expected behavior! I do have the /tmp/error_logCoCo and the contents of that file is below. In addition, we are running PAN-OS 9.04 on the firewalls, so I am not sure if Expedition can read the CSV files from that version of code. However, I am running the latest version of Expedition.

ubuntu@ip-10-170-1-35:/tmp$ more error_logCoCo

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.4.3-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

---- CREATING SPARK Session:

warehouseLocation:/data/spark-warehouse

+------------+--------+--------------------+----+------------+

| fwSerial|panosver| csvpath|size|afterProcess|

+------------+--------+--------------------+----+------------+

|012001008166| 9.0.0|/home/expedition/...| 972| null|

+------------+--------+--------------------+----+------------+

Memory: 5838m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 5978112

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 2152

My IP:........................ 10.170.1.35

Expedition IP:................ 10.170.1.35:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1580321790_traffic_files.csv

Parquet output path:.......... file:///data/connections.parquet

Temporary folder:............. /data

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

+------------+--------+--------------------+----+------------+--------+---+---------------+

| fwSerial|panosver| csvpath|size|afterProcess| grouped|row|accumulatedSize|

+------------+--------+--------------------+----+------------+--------+---+---------------+

|012001008166| 9.0.0|/home/expedition/...| 972| null|grouping| 1| 972.0|

+------------+--------+--------------------+----+------------+--------+---+---------------+

Selection criteria: 0 < accumulatedSize and accumulatedSize <= 5978112

Processing from lowLimit:0 to highLimit:5978112 with StepLine:5978112

Few logs can fit in this batch:1

9.0.0:/home/expedition/logs/ivc-ind-mdf1-fw1_traffic_2020_01_29_last_calendar_day.csv

Logs of format 7.1.x NOT found

Logs of format 8.0.2 NOT found

Logs of format 8.1.0-beta17 NOT found

Logs of format 8.1.0 NOT found

Logs of format 9.0.0 found

Logs of format 9.1.0-beta NOT found

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.4.3-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

---- CREATING SPARK Session:

warehouseLocation:/data/spark-warehouse

One loop done

+--------+--------+-------+----+------------+

|fwSerial|panosver|csvpath|size|afterProcess|

+--------+--------+-------+----+------------+

+--------+--------+-------+----+------------+

Memory: 5838m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 5978112

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 2153

My IP:........................ 10.170.1.35

Expedition IP:................ 10.170.1.35:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1580321882_traffic_files.csv

Parquet output path:.......... file:///data/connections.parquet

Temporary folder:............. /data

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

Exception in thread "main" java.util.NoSuchElementException: next on empty iterator

at scala.collection.Iterator$$anon$2.next(Iterator.scala:39)

at scala.collection.Iterator$$anon$2.next(Iterator.scala:37)

at scala.collection.IndexedSeqLike$Elements.next(IndexedSeqLike.scala:63)

at scala.collection.IterableLike$class.head(IterableLike.scala:107)

at scala.collection.mutable.ArrayOps$ofInt.scala$collection$IndexedSeqOptimized$$super$head(ArrayOps.scala:234)

at scala.collection.IndexedSeqOptimized$class.head(IndexedSeqOptimized.scala:126)

at scala.collection.mutable.ArrayOps$ofInt.head(ArrayOps.scala:234)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:441)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.4.3-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

---- CREATING SPARK Session:

warehouseLocation:/data/spark-warehouse

+--------+--------+-------+----+------------+

|fwSerial|panosver|csvpath|size|afterProcess|

+--------+--------+-------+----+------------+

+--------+--------+-------+----+------------+

Memory: 5838m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 5978112

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 2154

My IP:........................ 10.170.1.35

Expedition IP:................ 10.170.1.35:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1580322005_traffic_files.csv

Parquet output path:.......... file:///data/connections.parquet

Temporary folder:............. /data

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

Exception in thread "main" java.util.NoSuchElementException: next on empty iterator

at scala.collection.Iterator$$anon$2.next(Iterator.scala:39)

at scala.collection.Iterator$$anon$2.next(Iterator.scala:37)

at scala.collection.IndexedSeqLike$Elements.next(IndexedSeqLike.scala:63)

at scala.collection.IterableLike$class.head(IterableLike.scala:107)

at scala.collection.mutable.ArrayOps$ofInt.scala$collection$IndexedSeqOptimized$$super$head(ArrayOps.scala:234)

at scala.collection.IndexedSeqOptimized$class.head(IndexedSeqOptimized.scala:126)

at scala.collection.mutable.ArrayOps$ofInt.head(ArrayOps.scala:234)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:441)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.4.3-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

---- CREATING SPARK Session:

warehouseLocation:/data/spark-warehouse

+--------+--------+-------+----+------------+

|fwSerial|panosver|csvpath|size|afterProcess|

+--------+--------+-------+----+------------+

+--------+--------+-------+----+------------+

Memory: 5838m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 5978112

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 2157

My IP:........................ 10.170.1.35

Expedition IP:................ 10.170.1.35:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1580328172_traffic_files.csv

Parquet output path:.......... file:///data/connections.parquet

Temporary folder:............. /data

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

Exception in thread "main" java.util.NoSuchElementException: next on empty iterator

at scala.collection.Iterator$$anon$2.next(Iterator.scala:39)

at scala.collection.Iterator$$anon$2.next(Iterator.scala:37)

at scala.collection.IndexedSeqLike$Elements.next(IndexedSeqLike.scala:63)

at scala.collection.IterableLike$class.head(IterableLike.scala:107)

at scala.collection.mutable.ArrayOps$ofInt.scala$collection$IndexedSeqOptimized$$super$head(ArrayOps.scala:234)

at scala.collection.IndexedSeqOptimized$class.head(IndexedSeqOptimized.scala:126)

at scala.collection.mutable.ArrayOps$ofInt.head(ArrayOps.scala:234)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:441)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.4.3-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

---- CREATING SPARK Session:

warehouseLocation:/data/spark-warehouse

+--------+--------+-------+----+------------+

|fwSerial|panosver|csvpath|size|afterProcess|

+--------+--------+-------+----+------------+

+--------+--------+-------+----+------------+

Memory: 5838m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 5978112

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 2158

My IP:........................ 10.170.1.35

Expedition IP:................ 10.170.1.35:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1580328212_traffic_files.csv

Parquet output path:.......... file:///data/connections.parquet

Temporary folder:............. /data

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

Exception in thread "main" java.util.NoSuchElementException: next on empty iterator

at scala.collection.Iterator$$anon$2.next(Iterator.scala:39)

at scala.collection.Iterator$$anon$2.next(Iterator.scala:37)

at scala.collection.IndexedSeqLike$Elements.next(IndexedSeqLike.scala:63)

at scala.collection.IterableLike$class.head(IterableLike.scala:107)

at scala.collection.mutable.ArrayOps$ofInt.scala$collection$IndexedSeqOptimized$$super$head(ArrayOps.scala:234)

at scala.collection.IndexedSeqOptimized$class.head(IndexedSeqOptimized.scala:126)

at scala.collection.mutable.ArrayOps$ofInt.head(ArrayOps.scala:234)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:441)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

ubuntu@ip-10-170-1-35:/tmp$

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 05:48 AM

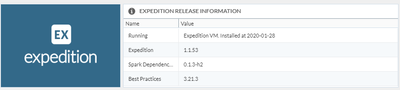

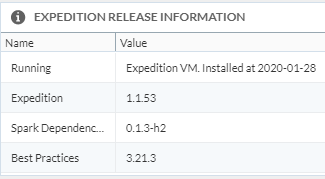

Which version of Expedition are you running? 1.1.53?

For some reason, Expedition is trying to process "no files". For instance, you will see that the /tmp/1580328212_traffic_files.csv file may be empty.

This was an issue we fixed back in 1.1.50 (if I remember correctly), so I just want to confirm that you are running 1.1.53 and it is then a new bug.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 07:05 AM

Yes, I am running version 1.1.53.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 07:06 AM

Could you contact us to fwmigrate at paloaltonetworks dot com to do a Zoom session and check live the issue?

Best,

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 07:09 AM

Ran a new file this morning and received the same error. The "1580390733_traffic_files.csv" is empty as you stated it would be.

---- CREATING SPARK Session:

warehouseLocation:/data/spark-warehouse

+--------+--------+-------+----+------------+

|fwSerial|panosver|csvpath|size|afterProcess|

+--------+--------+-------+----+------------+

+--------+--------+-------+----+------------+

Memory: 5838m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 5978112

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 2162

My IP:........................ 10.170.1.35

Expedition IP:................ 10.170.1.35:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1580390733_traffic_files.csv

Parquet output path:.......... file:///data/connections.parquet

Temporary folder:............. /data

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

Exception in thread "main" java.util.NoSuchElementException: next on empty iterator

at scala.collection.Iterator$$anon$2.next(Iterator.scala:39)

at scala.collection.Iterator$$anon$2.next(Iterator.scala:37)

at scala.collection.IndexedSeqLike$Elements.next(IndexedSeqLike.scala:63)

at scala.collection.IterableLike$class.head(IterableLike.scala:107)

at scala.collection.mutable.ArrayOps$ofInt.scala$collection$IndexedSeqOptimized$$super$head(ArrayOps.scala:234)

at scala.collection.IndexedSeqOptimized$class.head(IndexedSeqOptimized.scala:126)

at scala.collection.mutable.ArrayOps$ofInt.head(ArrayOps.scala:234)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:441)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

ubuntu@ip-10-170-1-35:/tmp$

ubuntu@ip-10-170-1-35:/tmp$ more 1580390733_traffic_files.csv

fwSerial,panosver,csvpath,size,afterProcess

/

ubuntu@ip-10-170-1-35:/tmp$

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2020 07:26 AM

Until we do a Zoom session, would it be possible that the firewall log files are actually empty?

The best would be to check it live and discard other options

- 35093 Views

- 51 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Expedition not showing Panorama Device groups in API Output manager in Expedition Discussions

- Generate XML gets stuck in Expedition Discussions

- Expedition Stuck at "Reading Config files" in Expedition Discussions

- Can't generate output files in Expedition Discussions

- Expedition SRX to Palo Alto pending in Expedition Discussions