- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

ML gets stuck at "Pending"

- LIVEcommunity

- Tools

- Expedition

- Expedition Discussions

- Re: ML gets stuck at "Pending"

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

08-01-2018 04:08 PM

I started by running the command

| scp export log traffic start-time equal 2018/07/30@00:00:00 end-time equal 2018/07/30@23:45:00 to expedition@172.30.200.117:/PALogs/mltest.csv |

on my PA220.

root@Expedition:/PALogs# ls -l

total 64296

-rw-rw-r-- 1 expedition expedition 65830760 Aug 1 17:35 mltest.csv

drwxr-xr-x 2 www-data www-data 4096 Aug 1 17:45 sparkLocalDir

drwxr-xr-x 2 www-data www-data 4096 Aug 1 17:37 spark-warehouse

root@Expedition:/PALogs#

What am I missing here?

- Mark as New

- Subscribe to RSS Feed

- Permalink

09-09-2019 04:26 AM

This worked for me too!

All files being processed within a minute now.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-20-2020 04:49 AM

Hello @dgildelaig,

i have the same issue with my Expedition. I did everything according the official guide and for some reason the ML is stucked on Pending. Could you please help me out, it really urgent.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-20-2020 09:21 AM

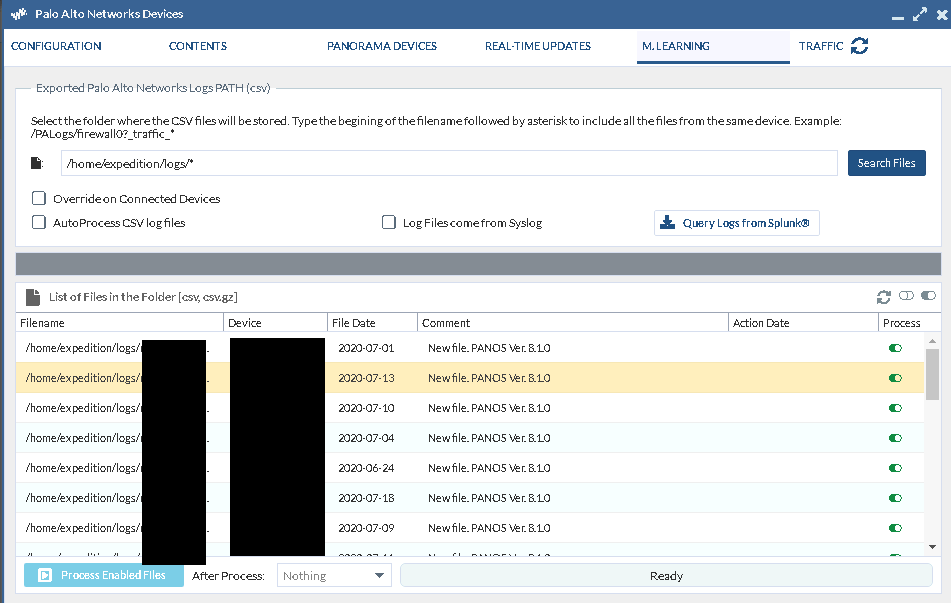

You are within a Panorama device. To be able to process the files, you need to specify it from the FW devices.

Notice that you can set up the auto-process so you do not need to get into the devices in the future for processing CSV log files

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-20-2020 10:43 AM

I've setup LOG export on each FW separately. I'm receiving the LOGs from the FW - that's not a problem. My problem with data processing. The option "process" is not available. I've tried everything, even check the auto-process option , without any luck.

Any Idea?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-20-2020 11:01 AM

The process button is not available because you are trying to click the button within a Panorama device.

You can only click that button if you ara within a FW device in Expedition

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-21-2020 02:03 AM

what is your suggestion ... to add each FW separately ?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-24-2020 08:56 AM

Under the Panorama Devices tab in the Panorama, you can click on "Retrieve connected devices".

This will create all the devices separately for you. Within them, you will see that they inherit the settings for ML from their Panorama, so you only need to check the auto-process checkbox.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-27-2020 04:24 AM

I did it ... i can see 32CSV pending to be processed ... Auto-process CSV log files is checked ... nothing happened

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-27-2020 04:49 AM

Please, contact us to fwmigrate@paloaltonetworks.com to coordinate a session in which we will show you the steps to execute, mainly:

- process the logs from the NGFW and not from the Panorama device.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-27-2020 05:32 PM

Can you post the solution here? I have the same issue

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-28-2020 09:02 AM

- 44722 Views

- 26 replies

- 3 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Expedition not showing Panorama Device groups in API Output manager in Expedition Discussions

- Unable to Remove Unused Objects process get stuck in Expedition Discussions

- Generating Set & XML gets stuck at Generating Network Interfaces Tunnel information in Expedition Discussions

- Generate XML gets stuck in Expedition Discussions

- Expedition Stuck at "Reading Config files" in Expedition Discussions