- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Log Processing not working

- LIVEcommunity

- Tools

- Expedition

- Expedition Discussions

- Log Processing not working

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-01-2019 05:45 AM

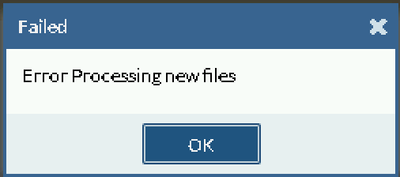

Greetings! My instance of Expedition (1.1.43) has stopped processing all logs. The devices detail shows the new logs. I've clicked process logs from there and it appears a job is added but the job includes zero tasks and never completes or fails. I've tried clicking the little green "play" button and get "Error processing log files". /tmp/PAN_LogCoCo.log is empty. Any suggestions of things I can look at or do to kick start this log processing issue? Thanks!

Accepted Solutions

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-04-2019 01:41 AM

We are working on an issue related to CSV files processing.

I expect to release an update later today or tomorrow when this issue has been addressed.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-01-2019 08:01 AM

I have the exact same issue after the upgrade from 1.41 to this version.

I saw some errors in cli while upgrading.

Did you also update Expedition?

Regards

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-04-2019 01:41 AM

We are working on an issue related to CSV files processing.

I expect to release an update later today or tomorrow when this issue has been addressed.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 02:56 AM - edited 11-05-2019 02:56 AM

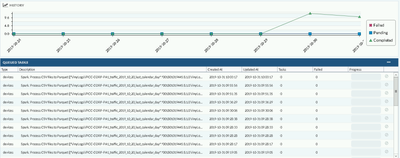

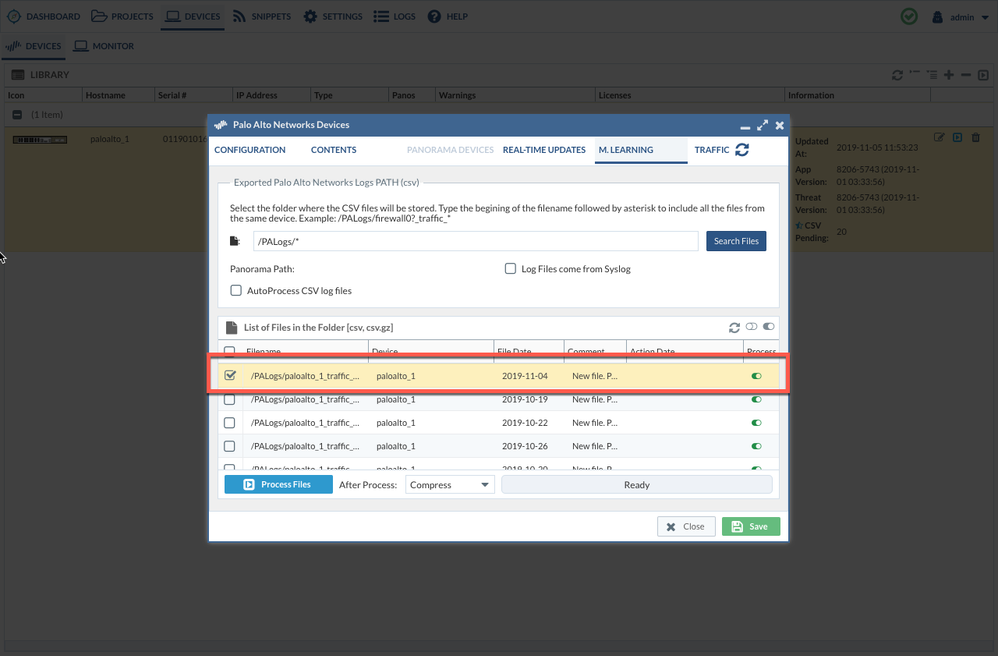

I have now upgraded to the latest version 1.1.45 and it seems that the logs are being processed.

Even though I've only selected one log file to be processed, all pending (20) files seem to be processed in one time.

I'll check if the processing of logs ends ok now.

Regards,

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 03:04 AM

When you say that "only selected one file" are you referring to having the other files set as Ignored?

If so, I will open a bug ticket to check the issue.

Otherwise, remember that the way to state which files are going to be processed is by defining which files are ignored. By default, all files are set to be processed.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 03:08 AM - edited 11-05-2019 03:11 AM

Edit: ok, all files are going to be processed except the ones you set to ignore.

I meant to say that I'm only selecting one file to test if the processing would work again.

Since it's taking that long I'm assuming that all logs are being processed:

root@Expedition:/home/expedition# tail -f /tmp/error_logCoCo

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.4.3-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

---- CREATING SPARK Session:

warehouseLocation:/datastore/spark-warehouse

+------------+--------+--------------------+----+------------+

| fwSerial|panosver| csvpath|size|afterProcess|

+------------+--------+--------------------+----+------------+

|011901016881| 8.1.0|/PALogs/paloalto_...|4957| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4332| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4629| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|3861| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4629| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|3840| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|5264| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|3892| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|3646| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4783| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4772| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4619| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|5161| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4752| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4076| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4895| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|3789| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|4742| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|5059| Compress|

|011901016881| 8.1.0|/PALogs/paloalto_...|5407| Compress|

+------------+--------+--------------------+----+------------+

Memory: 6373m

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[3]

Available RAM (MB):........... 6525952

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 152

My IP:........................ 10.3.1.30

Expedition IP:................ 10.3.1.30:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:................./tmp/1572951103_traffic_files.csv

Parquet output path:.......... file:///PALogs/connections.parquet

Temporary folder:............. /datastore

---- AppID DB LOAD:

Application Categories loading...

Application Categories loaded

+------------+--------+--------------------+----+------------+--------+---+---------------+

| fwSerial|panosver| csvpath|size|afterProcess| grouped|row|accumulatedSize|

+------------+--------+--------------------+----+------------+--------+---+---------------+

|011901016881| 8.1.0|/PALogs/paloalto_...|4957| Compress|grouping| 1| 4957.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4332| Compress|grouping| 2| 9289.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4629| Compress|grouping| 3| 13918.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|3861| Compress|grouping| 4| 17779.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4629| Compress|grouping| 5| 22408.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|3840| Compress|grouping| 6| 26248.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|5264| Compress|grouping| 7| 31512.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|3892| Compress|grouping| 8| 35404.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|3646| Compress|grouping| 9| 39050.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4783| Compress|grouping| 10| 43833.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4772| Compress|grouping| 11| 48605.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4619| Compress|grouping| 12| 53224.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|5161| Compress|grouping| 13| 58385.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4752| Compress|grouping| 14| 63137.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4076| Compress|grouping| 15| 67213.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4895| Compress|grouping| 16| 72108.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|3789| Compress|grouping| 17| 75897.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|4742| Compress|grouping| 18| 80639.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|5059| Compress|grouping| 19| 85698.0|

|011901016881| 8.1.0|/PALogs/paloalto_...|5407| Compress|grouping| 20| 91105.0|

+------------+--------+--------------------+----+------------+--------+---+---------------+

Selection criteria: 0 < accumulatedSize and accumulatedSize <= 6525952

Processing from lowLimit:0 to highLimit:6525952 with StepLine:6525952

Few logs can fit in this batch:20

8.1.0:/PALogs/paloalto_1_traffic_2019_10_25_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_11_01_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_11_02_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_31_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_19_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_17_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_11_03_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_28_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_11_05_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_27_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_30_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_22_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_11_04_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_18_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_26_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_21_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_29_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_20_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_23_last_calendar_day.csv,/PALogs/paloalto_1_traffic_2019_10_24_last_calendar_day.csv

Logs of format 7.1.x NOT found

Logs of format 8.0.2 NOT found

Logs of format 8.1.0-beta17 NOT found

Logs of format 8.1.0 found

Logs of format 9.0.0 NOT found

Logs of format 9.1.0-beta NOT found

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 03:09 AM

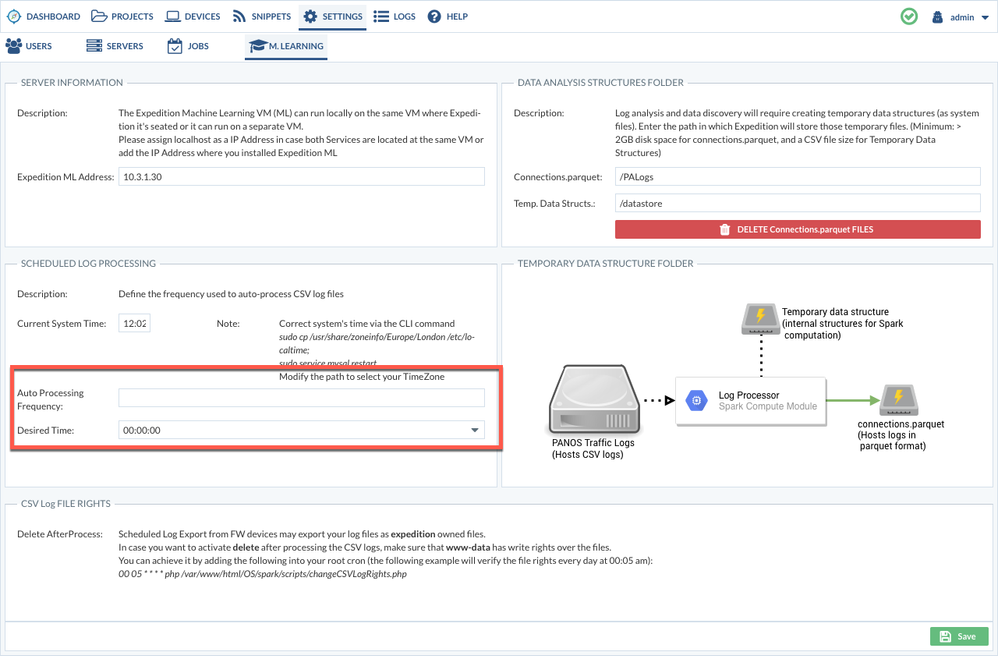

Another thing which isn't ok is the machine learning auto processing

It should be hard coded, but it's showing as empty in the gui

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 03:20 AM

I meant to say that I've only select one file.

But you explained that all will be processed except the ones that you set to ignore.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 04:17 AM

All files have been processed now.

The only thing I need to verify now is the automatic processing of the logs.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 04:25 AM

I am modifying the interface to avoid this confusion. You are not the first one, neither the second or the tenth that though only the check-boxed would be processed.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 06:21 AM

Unfortunately I'm not seeing the logs processed. I do not get an error now, and it appears that logs process as there are several java processes that spin up when I click the "process files" button. The jobs show failed but no clue as to why. Also a link to the error_logCoCo is included below. I haven't seen anything in there that indicates where the problem is. Any thoughts? Thanks!

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 01:10 PM

UPDATE: I noticed a low disk space error in the log. Took care of that (Moved location of DATA ANALYSIS STRUCTURES FOLDERs). Re-processed the files and things worked as expected.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-05-2019 01:19 PM

I encountered this error also, due to the longer time the logs weren't processed the foldersize got bigger.

I've added some additional space and relaunched the processing and all went ok.

I'm still seeing the (reduced) logfiles even if you select the "delete logs after processing"

- 1 accepted solution

- 11987 Views

- 12 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Need to in Expedition tool from scrach in Expedition Discussions

- EXPEDITION download link is opening in Expedition Discussions

- Panorama exported firewall logs 'PANOS Ver. Unsupported' in Expedition Discussions

- Checkpoint to Palo Alto in Expedition Discussions

- Unable to Remove Unused Objects process get stuck in Expedition Discussions