- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Output JSON for Incident Mapping

- LIVEcommunity

- Discussions

- Security Operations

- Cortex XSOAR Discussions

- Re: Output JSON for Incident Mapping

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-05-2021 12:41 PM

Hi all,

We have several incidents that we need to work on the mapping of, but they are relatively rare and are not pulled from the (SplunkPy) integration often enough that they are in any of the events that we get when we do the mapping (6.0) and pull from the integration.

They have been classified correctly, and we have several instances in XSOAR, so what we would like to do is to export the JSON from an existing incident and load it into the mapper to map the fields.

We've tried several commands (PrintContext and DumpJSON) but neither seem to give us the incident entries.

How can we best export events as JSON to load into the mapper and map fields?

Thanks,

Sean

Accepted Solutions

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-08-2021 04:19 AM

You could export an existing incident and make the labels the main fields on the incident and use this JSON as a file input into the mapping.

For example, create an automation script called "exportIncidentLabels" and use the following code:

incident = demisto.incident().get('labels', {})

parsed_incident = dict()

for item in incident:

parsed_incident[item['type']] = item['value']

demisto.results([json.dumps(parsed_incident)])

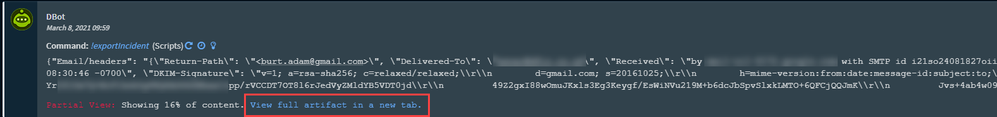

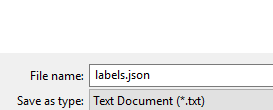

Then execute it from the war room of the desired incident that contains the relevant labels. When the results show, download them as a file:

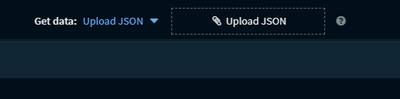

Then open the mapper and use:

However you get the data out, the mapper JSON input file expects a JSON list of dictionaries. Each array entry is considered a new incident and the JSON dictionary is considered the "rawJSON" input into an incident.

[

{

"incident1_field1": "value1",

"incident1_field2": "value2"

},

{

"incident2_field1": "value1",

"incident2_field2": "value2"

}

]

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-05-2021 02:41 PM

Hi Sean,

In your current mapper, do you map any unmapped fields into the labels in the context data?

Regards

Adam

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-08-2021 04:19 AM

You could export an existing incident and make the labels the main fields on the incident and use this JSON as a file input into the mapping.

For example, create an automation script called "exportIncidentLabels" and use the following code:

incident = demisto.incident().get('labels', {})

parsed_incident = dict()

for item in incident:

parsed_incident[item['type']] = item['value']

demisto.results([json.dumps(parsed_incident)])

Then execute it from the war room of the desired incident that contains the relevant labels. When the results show, download them as a file:

Then open the mapper and use:

However you get the data out, the mapper JSON input file expects a JSON list of dictionaries. Each array entry is considered a new incident and the JSON dictionary is considered the "rawJSON" input into an incident.

[

{

"incident1_field1": "value1",

"incident1_field2": "value2"

},

{

"incident2_field1": "value1",

"incident2_field2": "value2"

}

]

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-11-2021 10:04 AM

So I finally got around to it and I added the script. It outputs the labels nicely. I ran them though CyberChef to make sure everything was correct, and there were no formatting issue, but when I upload in the Mapping Editor I get this:

Error parsing request

json: cannot unmarshal number into Go struct field queryDTOnList.list of type map[string]interface {}

This is the same message I get when I export from Splunk as JSON as well.

- Mark as New

- Subscribe to RSS Feed

- Permalink

03-11-2021 10:09 AM

I figured out. The JSON needs top be enclosed in '[' and ']' for it to work.

Adding this to the start and end of the file after the export from the script worked!

Thanks!

- 1 accepted solution

- 13162 Views

- 5 replies

- 1 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- map incident data in Cortex XSOAR Discussions

- XSIAM - Vulnerability field (Issues) in Cortex XSIAM Discussions

- populating playbook custom node/script output in Dashboard and Reports in Cortex XSOAR Discussions

- Feature Request – LLDP/CDP Support for Network-Based Endpoint Discovery in Cortex XDR Discussions

- Extracting fields from Context/JSON in Playbook in Cortex XSOAR Discussions