- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Nodes keep stopping - how to start and keep them started?

- LIVEcommunity

- Discussions

- General Topics

- Re: Nodes keep stopping - how to start and keep them started?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

Nodes keep stopping - how to start and keep them started?

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-04-2018 12:11 PM

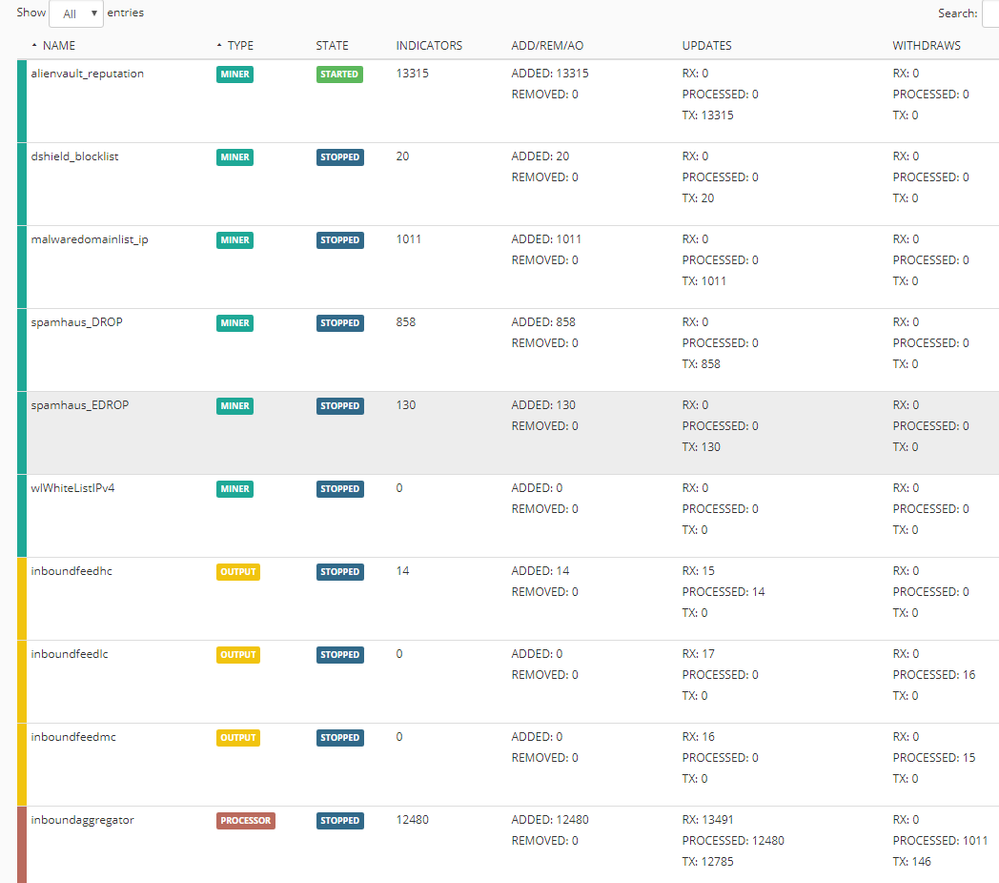

Just spun up a new Minemeld server and its working however the nodes like to just stop and I am not sure how to get them to start up and stay started. Rebooting will bring everything back up and they wiull be started for about a minute then they all stop (see screenshot). At home I dont have this issue, all the nodes stay running/started and do their polling, etc without issues. I should be seeing waaaaaaay more indicators specially on the alienvault_reputation node. Thoughts?

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-05-2018 07:46 AM

I am going to reply to my own post here with more info... I have been trying things to get this working and I have discovered the following:

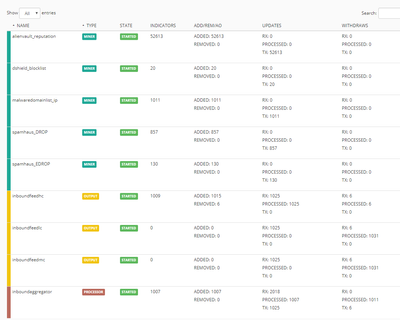

* If I remove the alienvault_reputation input from the inboundaggregator then commit the alienvault_reputation miner gathers everything and all the nodes stay up and running (but of course now the EDL will be missing the ~60,000 objects from the alienvault node

* If I add the alienvault node back into the aggregator and commit then it tries to poll and stops around 10,000 objects (sometimes less) and then all the nodes grind to a halt again

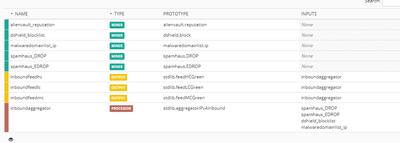

Here you can see all nodes running and the alienvault poll completes

But only works when it is *not* added to the inboundaggregator (seen here)

As soon as I add it to the aggregator and commit all heck breaks loose and it breaks.

* At home I have a VM running minemeld with the exact same configuration but do not have this issue at all. The only difference between the minemeld instance at home and this one is at home I am using Ubuntu 14.04 and here in the office we are trying to get this going using CentOS 7 so I installed it using the Ansible recipe.

p.s. Maybe I need to update Minemeld? But there seems to be no minemeld update script when installing using Ansible, how does one update Minemeld on an Ansible CentOS install?

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-07-2018 08:46 AM

Hi @hshawn,

this looks like a lack of computing resources to me. Any chance to take a look to /opt/minemeld/log/minemeld-engine.log to see if there is any clue there?

How does your home computer compares to the one failing to deliver?

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-07-2018 09:27 AM - edited 06-07-2018 09:36 AM

Thanks @xhoms,

This VM here is about 2.5 times the CPU/RAM and disk space being used in my home lab setup with 2vCPU and 4GB of RAM. Here is the output from the log requested I ran a tail -f after a reboot and then adding the alienvault reputation list to the aggregator. After about a minute or so all nodes are now stopped and dead

2018-06-07T09:21:49 (2157)basepoller._huppable_wait INFO: hup is clear: False

2018-06-07T09:22:37 (2146)mgmtbus._merge_status ERROR: old clock: 86 > 82 - dropped

2018-06-07T09:22:37 (2146)mgmtbus._merge_status ERROR: old clock: 23 > 22 - dropped

2018-06-07T09:22:37 (2146)mgmtbus._merge_status ERROR: old clock: 21 > 19 - dropped

Traceback (most recent call last):

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/greenlet.py", line 327, in run

result = self._run(*self.args, **self.kwargs)

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 561, in _ioloop

conn.drain_events()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/connection.py", line 323, in drain_events

return amqp_method(channel, args)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/channel.py", line 241, in _close

reply_code, reply_text, (class_id, method_id), ChannelError,

NotFound: Basic.publish: (404) NOT_FOUND - no exchange 'inboundaggregator' in vhost '/'

<Greenlet at 0x7ff0b5cf5f50: <bound method AMQP._ioloop of <minemeld.comm.amqp.AMQP object at 0x7ff0b8218650>>(10)> failed with NotFound

2018-06-07T09:22:49 (2157)amqp._ioloop_failure ERROR: _ioloop_failure: exception in ioloop

Traceback (most recent call last):

Traceback (most recent call last):

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 567, in _ioloop_failure

g.get()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/greenlet.py", line 251, in get

raise self._exception

NotFound: Basic.publish: (404) NOT_FOUND - no exchange 'inboundaggregator' in vhost '/'

2018-06-07T09:22:49 (2157)chassis.stop INFO: chassis stop called

2018-06-07T09:22:49 (2157)base.state INFO: spamhaus_EDROP - transitioning to state 8

2018-06-07T09:22:49 (2157)basepoller.stop INFO: spamhaus_EDROP - # indicators: 130

2018-06-07T09:22:49 (2157)base.state INFO: dshield_blocklist - transitioning to state 8

2018-06-07T09:22:49 (2157)basepoller.stop INFO: dshield_blocklist - # indicators: 20

2018-06-07T09:22:52 (2157)base.state INFO: inboundaggregator - transitioning to state 8

2018-06-07T09:22:52 (2157)ipop.stop INFO: inboundaggregator - # indicators: 16761

2018-06-07T09:22:52 (2157)base.state INFO: inboundfeedhc - transitioning to state 8

2018-06-07T09:22:52 (2157)base.state INFO: spamhaus_DROP - transitioning to state 8

2018-06-07T09:22:52 (2157)basepoller.stop INFO: spamhaus_DROP - # indicators: 855

2018-06-07T09:22:52 (2157)base.state INFO: inboundfeedmc - transitioning to state 8

2018-06-07T09:22:52 (2157)base.state INFO: malwaredomainlist_ip - transitioning to state 8

2018-06-07T09:22:52 (2157)basepoller.stop INFO: malwaredomainlist_ip - # indicators: 1011

2018-06-07T09:22:52 (2157)base.state INFO: inboundfeedlc - transitioning to state 8

2018-06-07T09:22:52 (2157)base.state INFO: alienvault_reputation - transitioning to state 8

2018-06-07T09:22:52 (2157)basepoller.stop INFO: alienvault_reputation - # indicators: 52338

2018-06-07T09:22:52 (2157)chassis.stop INFO: Stopping fabric

Traceback (most recent call last):

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/hub.py", line 544, in switch

switch(value)

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 575, in _ioloop_failure

l()

File "/opt/minemeld/engine/core/minemeld/fabric.py", line 100, in _comm_failure

self.chassis.fabric_failed()

File "/opt/minemeld/engine/core/minemeld/chassis.py", line 181, in fabric_failed

self.stop()

File "/opt/minemeld/engine/core/minemeld/chassis.py", line 217, in stop

self.fabric.stop()

File "/opt/minemeld/engine/core/minemeld/fabric.py", line 112, in stop

self.comm.stop()

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 675, in stop

sc.disconnect()

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 424, in disconnect

self.channel.close()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/channel.py", line 176, in close

self._send_method((20, 40), args)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/abstract_channel.py", line 56, in _send_method

self.channel_id, method_sig, args, content,

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/method_framing.py", line 221, in write_method

write_frame(1, channel, payload)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/transport.py", line 182, in write_frame

frame_type, channel, size, payload, 0xce,

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/socket.py", line 460, in sendall

data_sent += self.send(_get_memory(data, data_sent), flags)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/socket.py", line 437, in send

return sock.send(data, flags)

error: [Errno 104] Connection reset by peer

<built-in method switch of greenlet.greenlet object at 0x7ff0b5cf5190> failed with error

Let me know what you think, thanks!

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-07-2018 10:33 AM

More data to process...

The issue seems to lie with that alienvault feed. If I remove that I can add all the miners and add them to the aggregator that I want. Right now I have had 10 miners running without issues. If anyone can identify the issue with the alienvault feed I will pop it back in there but for now I am just happy to have some hc and mc lists working

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-09-2018 07:44 AM

This weekend I spun up a new CentOS VM and did a fresh install of Minemeld using ansible, then I added the alienvault.reputation feed to it and saw the same behavior I am seeing in Minemeld on the corp network. the alienvault feed starts to poll and then all the nodes die and Minemeld becomes dead weight.

This leads me to believe something with the ansible install or with CentOS does not agree or play nice with something in the alienvault.reputation feed.

What I can confirm:

* All works great with the Ubuntu OVA setup no issues at all

* Breaks when adding alienvault reputation feed on an ansible installed CentOS setup

* I have reached the end of my troubleshooting abilities on this one, if anyone wants to take a crack at it all the logs and info should be in this thread but I can provide more details if needed

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-10-2018 04:34 PM

Hi Shawn,

I'm going to try to replicate your MM installation on CentOS 7.

Did you use this ansible playbook?

If so, did you keep "minemeld_version: develop" or "Stable" ?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-10-2018 04:56 PM

Yes, that is the playbook I used. Fresh install of latest CentOS 7 and used the stable minemeld branch. It should install without issues but you might run into an error with an obsolete package that you need to manually install and then start the ansible install again and it will finish up pretty quickly.

Once everything is up and running try adding the alienvault.reputation feed and see how it goes.

Good luck! Let us know how it goes

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-10-2018 10:35 PM - edited 07-10-2018 10:36 PM

Tonight I finished building this out in my lab. Centos 7 and the ansible 'stable' minemeld branch. Added the alienvault.reputation feed, and sure enough it killed off most of the four other miners when it hit ~15-18k indicators, which is about 3-5 minutes after the engine started. Restarting the engine will restart all miners, but the failure mode is consistent. The alienvault.reputation miner never advances past that number (or completes polling), despite the feed having around 60k indicators (as seen on a successful polling in my Ubuntu MM). Interesting. The feed works fine in my Ubuntu MM with just 1 GB ram and 1 core (and like 20 other feeds)

I noted that this miner uses Class: minemeld.ft.csv.CSVFT, and i wonder if it's an issue with that class on Centos. To confirm, i found another miner using this class (bambenekconsulting.c2_ipmasterlist) to see if the behavior is the same, but it completes polling successfully. Only 437 indicators, so next I'll try a feed of the same class with more indicators, closer to the scale of alienvault. This will be a bit of trial/error work.

Another option I found is to try running it in Docker, which contains all the necessary dependencies in the container: https://hub.docker.com/r/jtschichold/minemeld/ but i'm out of time tonight. I'll take a look at this later on this week, unless you want to take a first pass @hshawn

Cheers

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-11-2018 03:57 PM

I did try other miners of the same class, and they worked without incident, but were all small. So perhaps it's scale related. I created a new miner (not a clone) to temporarily mine the alienvault.reputation database from a website I control. This new miner crashed in the same way the included alienvault.reputation one did.

I noted minemeld-traced.log is non-zero bytes, and upon looking in there it seems the engine crashes due to unhandled exceptions.

2018-07-11T00:43:37 (8424)storage.remove_reference WARNING: Attempt to remove non existing reference: 2e5b0b9c-c47b-44Traceback (most recent call last):

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/greenlet.py", line 327, in run

result = self._run(*self.args, **self.kwargs)

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 561, in _ioloop

conn.drain_events()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/connection.py", line 323, in drain_events

return amqp_method(channel, args)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/connection.py", line 529, in _close

(class_id, method_id), ConnectionError)

ConnectionForced: (0, 0): (320) CONNECTION_FORCED - broker forced connection closure with reason 'shutdown'

<Greenlet at 0x7fb4e5de5230: <bound method AMQP._ioloop of <minemeld.comm.amqp.AMQP object at 0x7fb4e5e021d0>>(0)> fai

Everthing begins to fall apart in the minemeld-engine.log here (before this timestamp things appear healthy):

2018-07-11T00:38:59 (9727)mgmtbus._merge_status ERROR: old clock: 90 > 74 - dropped

2018-07-11T00:38:59 (9727)mgmtbus._merge_status ERROR: old clock: 21 > 20 - dropped

2018-07-11T00:38:59 (9727)mgmtbus._merge_status ERROR: old clock: 20 > 19 - dropped

2018-07-11T00:38:59 (9727)mgmtbus._merge_status ERROR: old clock: 22 > 20 - dropped

Traceback (most recent call last):

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/greenlet.py", line 327, in run

result = self._run(*self.args, **self.kwargs)

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 561, in _ioloop

conn.drain_events()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/connection.py", line 323, in drain_events

return amqp_method(channel, args)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/channel.py", line 241, in _close

reply_code, reply_text, (class_id, method_id), ChannelError,

NotFound: Basic.publish: (404) NOT_FOUND - no exchange 'inboundaggregator' in vhost '/'

<Greenlet at 0x7f7939978190: <bound method AMQP._ioloop of <minemeld.comm.amqp.AMQP object at 0x7f793b0d1d10>>(11)> fa

2018-07-11T00:39:17 (9741)amqp._ioloop_failure ERROR: _ioloop_failure: exception in ioloop

Traceback (most recent call last):

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 567, in _ioloop_failure

g.get()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gevent/greenlet.py", line 251, in get

raise self._exception

NotFound: Basic.publish: (404) NOT_FOUND - no exchange 'inboundaggregator' in vhost '/'

2018-07-11T00:39:17 (9741)chassis.stop INFO: chassis stop called

When comparing this to my Ubuntu MM, I noted that there are errors, but no 'clock' errors in the logs. I started poking around in the python to see how clock is used, but am now out of time again. Documenting all of this here before it slips out of my mind.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-12-2018 07:55 AM

I gave the docker image a shot yesterday it seems to be still a work in progresss, it did come up but loging into minemeld was not working so I shelved it and went back to poking at the CentOS setup. It sounds like @tyreed you are going down some rabbit holes with this, don't stare at it too long, your vision may blur 😉

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-13-2018 05:26 AM

Hi @tyreed,

I have fixed this issue in the latest version of minemeld-ansible. Could you try reinstalling the instance with the last minemeld-ansible playbook please?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-13-2018 07:58 AM - edited 07-13-2018 08:10 AM

@lmori I took the following steps this morning:

* refresh git repo

* re-run ansible playbook

* reboot server

* add alienvault reputation

results: it looked like it was going to be happy but after about a minute all the nodes stopped and the alienvault poll stopped at around 17k (there are usually around 50k in there)

After removing alienvault and comitting everything is happy again (but it takes about 8 minutes to restart the engine when this happens). Is there a better way to update the system? Should I completely wipe out the previous install and redo it from zero?

TIA!

Errors in the log around the time of this event (possibly unrelated):

[2018-07-13 07:41:04 PDT] [887] [ERROR] Exception on /status/minemeld [GET]

Traceback (most recent call last):

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1988, in wsgi_app

response = self.full_dispatch_request()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1641, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1544, in handle_user_exception

reraise(exc_type, exc_value, tb)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1639, in full_dispatch_request

rv = self.dispatch_request()

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1625, in dispatch_request

return self.view_functions[rule.endpoint](**req.view_args)

File "/opt/minemeld/engine/core/minemeld/flask/aaa.py", line 125, in decorated_view

return f(*args, **kwargs)

File "/opt/minemeld/engine/core/minemeld/flask/aaa.py", line 135, in decorated_view

return f(*args, **kwargs)

File "/opt/minemeld/engine/core/minemeld/flask/statusapi.py", line 171, in get_minemeld_status

status = MMMaster.status()

File "/opt/minemeld/engine/core/minemeld/flask/mmrpc.py", line 51, in status

return self._send_cmd('status')

File "/opt/minemeld/engine/core/minemeld/flask/mmrpc.py", line 41, in _send_cmd

self._open_channel()

File "/opt/minemeld/engine/core/minemeld/flask/mmrpc.py", line 38, in _open_channel

self.comm.start()

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 595, in start

c = amqp.connection.Connection(**self.amqp_config)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/connection.py", line 165, in __init__

self.transport = self.Transport(host, connect_timeout, ssl)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/connection.py", line 186, in Transport

return create_transport(host, connect_timeout, ssl)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/transport.py", line 299, in create_transport

return TCPTransport(host, connect_timeout)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/amqp/transport.py", line 95, in __init__

raise socket.error(last_err)

error: [Errno 111] Connection refused

[2018-07-13 07:41:05 +0000] [887] [ERROR] Error handling request

Traceback (most recent call last):

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gunicorn/workers/async.py", line 52, in handle

self.handle_request(listener_name, req, client, addr)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gunicorn/workers/ggevent.py", line 159, in handle_request

super(GeventWorker, self).handle_request(*args)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/gunicorn/workers/async.py", line 105, in handle_request

respiter = self.wsgi(environ, resp.start_response)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 2000, in __call__

return self.wsgi_app(environ, start_response)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1996, in wsgi_app

ctx.auto_pop(error)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/ctx.py", line 387, in auto_pop

self.pop(exc)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/ctx.py", line 376, in pop

app_ctx.pop(exc)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/ctx.py", line 189, in pop

self.app.do_teardown_appcontext(exc)

File "/opt/minemeld/engine/current/lib/python2.7/site-packages/flask/app.py", line 1898, in do_teardown_appcontext

func(exc)

File "/opt/minemeld/engine/core/minemeld/flask/mmrpc.py", line 207, in teardown

g.MMMaster.stop()

File "/opt/minemeld/engine/core/minemeld/flask/mmrpc.py", line 55, in stop

self.comm.stop()

File "/opt/minemeld/engine/core/minemeld/comm/amqp.py", line 685, in stop

self.rpc_out_channel.close()

AttributeError: 'NoneType' object has no attribute 'close'

Update: I tried not adding alienvault to the aggregator until it finished polling. The alienvault node will completely poll and finish if it is not added to the aggregator, however once it is added then that is when the nodes get killed.

- 14859 Views

- 22 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- GRPC status UNAVAILABLE in intelligent offload in VM-Series in the Private Cloud

- SCM Essentials Questions in Strata Cloud Manager

- LGTV and Netflix bugs out when going through Palo Alto in General Topics

- Starting as a Prisma SASE SME and need help! in Prisma SD-WAN Discussions

- Reminder: Stopping attacks with AI-Powered Endpoint security starts in an hour in Cortex XDR Discussions