- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Terraform NGFW provider failing to get token for CloudFirewallAdmin

- LIVEcommunity

- Products

- Network Security

- Cloud NGFW for AWS

- Cloud NGFW for AWS Discussions

- Re: Terraform NGFW provider failing to get token for CloudFirewallAdmin

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

Terraform NGFW provider failing to get token for CloudFirewallAdmin

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-28-2022 01:49 AM - edited 04-28-2022 06:32 AM

Hi,

I am trialling out the TF provider in this repo and I have successfully built the provider locally. I am able to configure it as per the settings mentioned in the doc. To give a brief overview, I have

- Subscribed to Palo NGFW in AWS Marketplace

- Added our sandbox AWS Account to Palo NGFW and run the Cloudformation template which creates cross-account IAM roles.

- Enabled Programmatic access and created the IAM role mentioned in the docs to grant access to API Gateway. I have also tagged the IAM role with

Key=NGFWaasRole, Value=CloudFirewallAdmin

Key=NGFWaasRole, Value=CloudRulestackAdmin

tags as mentioned in the other ticket - I have granted sts:AssumeRole permission so that any authenticated user in my sandbox account can assume the above role.

- After setting up all of this, I supply the following config values to the provider

host: "api.us-east-1.aws.cloudngfw.paloaltonetworks.com"

region: "us-east-1"

arn: "<The arn of the API Gateway IAM Role I setup in step 3>"

The provider initialises successfully when I run terraform init, however when I run terraform plan, it errors as per the screenshot below

I can only speculate (since I am not familiar with golang, but the code for ngfw client is here ) that the client is failing to execute steps 8 and 9 mentioned here

Another thing to note is that the tags mentioned in step 5 of the above article are different from the ones you have mentioned in the linked ticket above. Is there any reason for this difference? Also, the Github repo linked in step 6 has a broken link, so I cannot view the CFT examples.

Any help would be appreciated since I am now effectively blocked in automating the NGFW firewall creation.

Regards,

Shreyas

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-04-2022 10:16 AM

Thanks for the info. I have run terraform plan multiple times and the rulestack commit status is “PreCommitDone”. I am unable to delete it from the UI since it gives that error in the previously posted screenshot. I will look to moving commit_rulestack to separate plan, which means that NGFW resource will need to be in its own plan as well? It has a dependency on the rullestack being committed before the NGFW is successfully deployed.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-04-2022 10:22 AM

...huh. Is the rulestack associated with a NGFW which you've spun up? If so, you might have to bring down / delete that NGFW before you can delete the rulestack.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-04-2022 10:43 AM - edited 05-04-2022 10:51 AM

NBS Internal

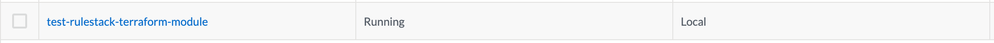

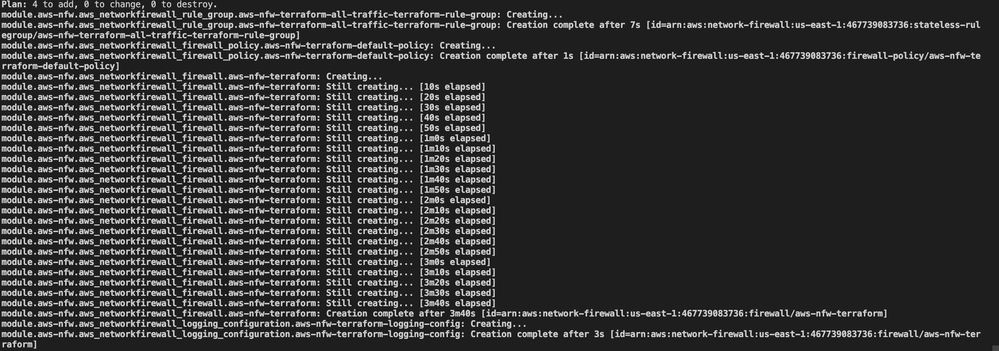

Yes. If you look at the terraform plan output from previous posts, I am attempting creation of barebones firewall, with a rulestack that has a single security rule.

So, in my head I understand the execution sequence as

1. Rule is created

2. Rulestack is created with the above rule

3. Rulestack needs to be committed.

4. Rulestack is associated with the NGFW

5. NGFW is created.

In my case, I believe I am stuck at step 3. Rulestack is not properly committed, and hence the first time around, NGFW creation in “tf apply” ran for 5 minutes and 30 seconds before timing out. Now I am not sure if “tf apply” on NGFW was waiting internally on Commit_Rulestack to finish or it was waiting for its own creation.

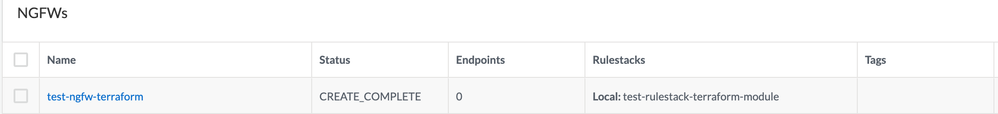

Another thing to note is that in the UI my NGFW via terraform appeared in CREATE_FAIL status (after 5.5 minutes timeout).

I was able to delete the firewall in the UI, but unable to delete the rule stack.

I will investigate creation of separate tfstate/plan for rulestack and commit_rulestack.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-05-2022 08:56 AM - edited 05-05-2022 08:57 AM

@gfreeman The way in which resources get created "outside" terraform is not helping at all. I was able to create rulestack and firewall , yet when I try to create vpc endpoints on my side using vpc endpoint service for the NGFW, I get

│ Error: Error(1): Commit process unfinished.

In the UI, everything looks ok

Below is my terraform code

data "cloudngfwaws_ngfw" "palo-ngfw" {

name = var.ngfw-name

}

data "aws_vpc_endpoint_service" "palo-ngfw-vpce-service" {

service_name = data.cloudngfwaws_ngfw.palo-ngfw.endpoint_service_name

}

resource "aws_vpc_endpoint" "palo-ngfw-vpce-private-subnet-1" {

vpc_id = var.inspection-vpc-id

service_name = data.aws_vpc_endpoint_service.palo-ngfw-vpce-service.service_name

vpc_endpoint_type = data.aws_vpc_endpoint_service.palo-ngfw-vpce-service.service_type

subnet_ids = [var.inspection-vpc-private-subnet-1-id]

private_dns_enabled = false

tags = {

"Name" = "palo-ngfw-vpce-private-subnet-1"

}

}

resource "aws_vpc_endpoint" "palo-ngfw-vpce-private-subnet-2" {

vpc_id = var.inspection-vpc-id

service_name = data.aws_vpc_endpoint_service.palo-ngfw-vpce-service.service_name

vpc_endpoint_type = data.aws_vpc_endpoint_service.palo-ngfw-vpce-service.service_type

subnet_ids = [var.inspection-vpc-private-subnet-2-id]

private_dns_enabled = false

tags = {

"Name" = "palo-ngfw-vpce-private-subnet-2"

}

}

resource "aws_route" "inspection-rtb-tgw-subnet-1" {

route_table_id = var.inspection-vpc-tgw-rtb-subnet-1-id

destination_cidr_block = "0.0.0.0/0"

vpc_endpoint_id = aws_vpc_endpoint.palo-ngfw-vpce-private-subnet-1.id

}

resource "aws_route" "inspection-rtb-tgw-subnet-2" {

route_table_id = var.inspection-vpc-tgw-rtb-subnet-2-id

destination_cidr_block = "0.0.0.0/0"

vpc_endpoint_id = aws_vpc_endpoint.palo-ngfw-vpce-private-subnet-2.id

}

What am I missing?

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-09-2022 09:36 AM - edited 05-09-2022 09:42 AM

This feels like TAC territory again to me... You are in a situation where even using the GUI is not working / allowing you to recover.

The AWS config I am honestly not very familiar with, so I won't be able to provide useful feedback on that part of it, sorry..

But to double back on what I was saying before: you'll want to have 3 separate directories for your Terraform config:

dir1) All the code that defines both the rulestack and its contents (prefix lists, intelligent feeds, etc)

dir2) cloudngfwaws_commit_rulestack only

dir3) All your cloudngfwaws_ngfw instances

-- Edit --

Forgot to mention: and you only run the Dir3 terraform code if the commit shows as successfully committed from Dir2 (check using terraform show). Currently the cloudngfwaws_commit_rulestack only returns an error if issuing the commit command fails, not if the commit itself fails. It's possible that this will change before the provider exits beta, but this is the current implementation.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-12-2022 02:45 AM

Thanks @gfreeman but does not sound "fully automated" to me. That's more semi-automated where I have run some terraform code, manually run terraform show, or write from grep commands to search for correct status, and then run the next batch of terraform code. Is it not possible to fully automate this (for example my polling the API to see if rulestack was committed, then create firewall etc)? For example, if I attempt to create AWS Network Firewall, it's just as simple as that

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-12-2022 08:53 AM

That's fair, the way it is now you have to check the status manually and then conditionally launch the 3rd directory's terraform apply. This is why I said that it's possible that the cloudngfwaws_commit_rulestack resource could change between now and launch to error on commit failure, so that you could at least pair the cloudngfwaws_ngfw resources in the same plan file as the commit. This would get the workflow down from three directories to two directories....

But because of the commit, there really isn't a way to have the commit be in the same plan file as the rulestack configuration.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-12-2022 11:01 AM

Thanks. Is it possible to add dependency on the commit_rulestack resource before creating NGFW? This way NGFW resource waits on commit_rulestack success, which reflects in the plan “creation complete” and then NGFW creation starts. That’s how I imagine it working. Maybe I need to look at AWS provider code to see how they implement this dependency wait.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-12-2022 03:31 PM

Yes, this is possible I think, and the modification that I think might go in before the provider is officially released.

Right now the polling for commit verification is in the Read stage of the provider. If that is also moved into the Create and Update steps, then the resource ensures that the commit must succeed or else the resource will error out. Then, when specifying the rulestack in the cloudngfwaws_ngfw resource, you reference the rulestack from the cloudngfwaws_commit_rulestack resource to create the dependency.

You'll still need two terraform directories, I think... Otherwise users will need to manually build up an explicit depends_on and put that inside the cloudngfwaws_commit_rulestack definition, and that won't get fun once there's even a couple hundred resources per rulestack, much less thousands.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-13-2022 01:45 AM

@gfreeman That sounds like a much better solution. If the NGFW resource references rulestack name from the commit_rulestack attributes, then that should automatically add an implicit dependency on commit_rulestack before NGFW resource creation is kicked off. I think creation should be cascaded:

1. security_rule is created. The create and update of security_rule monitors successful creation and fails if it does not go through.

2. rulestack is created. The create and update of rulestack monitors successful creation and fails if it does not go through.

3. same as above for commit_rulestack.

4. Finally same as above for NGFW. This way at any point if the async creation fails (most of the palo resources seem to async generated, even when using UI. So you can create a rulestack in UI and it takes a few seconds or more to create it. The you have async validate/commit. Ditto for firewall.)

- 14679 Views

- 24 replies

- 1 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Cloud NGFW for AWS (SCM Try and Buy): Deployment Guide with Terraform in Cloud NGFW for AWS Articles

- Terraform in Cloud NGFW for AWS Discussions

- Terraform provider inconsistencies and issues with IAM role tags in Cloud NGFW for AWS Discussions

- Terraform NGFW provider failing to get token for CloudFirewallAdmin in Cloud NGFW for AWS Discussions

- Cloud NGFW for AWS - FAQ in Cloud NGFW for AWS Articles