- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Enhanced Security Measures in Place: To ensure a safer experience, we’ve implemented additional, temporary security measures for all users.

Unlock your full community experience!

MineMeld to Extract Indicators From generic API

- LIVEcommunity

- Articles

- General Articles

- MineMeld to Extract Indicators From generic API

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

on 07-07-2018 01:57 AM - edited on 12-14-2021 07:42 AM by jforsythe

Note: Palo Alto Networks made an end-of-life announcement about the MineMeld™ application in AutoFocus™ on August 1, 2021. Some of the below information may be outdated. Please read this article to learn about our recommended migration options.

Introduction

Although MineMeld was conceived as a threat sharing platform, reality has shown many users are taking advantage of its open and flexible engine to extract dynamic data (not threat indicators) from generic APIs.

- The highly successful case of extracting O365 dynamic data (IP's, domains and URL's) from its public facing API Endpoint

- Many users relying on MineMeld to track the public IP space from cloud and CDN providers like AWS, Azure, CloudFlare, Fastly as a much more robust and scalable alternative to mapping them with FQDN objects.

- Or even people using MineMeld to extract the list of URL's to videos published in specific YouTube playlist or channels via the corresponding Google API.

All these are examples of MineMeld being used to extract dynamic data from public API's.

Depending on the source, a new class (python code) may be needed to implement the client-side logic of the API we're willing to mine. But, in many case, the already available ready-to-consume "generic classes" could be used instead. This way the user could "mine" its generic API without the need to deep dive into the GitHub project contribution.

The "generic classes"

There are, basically, three "generic classes" that can be reused in many applications:

- The HTTPFT class: Create a prototype for this class when you need to extract dynamic data from content delivered in HTML or PlainText (text/plain, text/html)

- The SimpleJSON class (I love this one!): Do you need to extract dynamic data from an API that delivers the response as a JSON Document? You're all set with a protorype of this class!

- The CSVFT class: Some services still use variants of CSV (delimiter-based multi-field lines) to deliver its content.

The following is the rule of thumb that will let you know if the API you want to extract dynamic data from can be "mined" using MineMeld by providing just a prototype for one of these classess (without providing a single line of code!)

- The transport must be HTTP/HTTPS

- None or basic authentication (user + password)

- Single transaction (one call retrieves the whole indicator list – no pagination)

- Indicators are provided in plain, html, csv or json format.

The following sections in this article will teach you how to use these generic classes to mine an example API that provides real-time temperature for four MineMeld-relateed cities in the world:

| Format | API URL |

| CSV | https://test.minemeld.com/csv |

| HTML | https://test.minemeld.com/html |

| JSON | https://test.minemeld.com/json |

Mining a CSV API

We will start with CSV because it is, probably, the easiest one between the generic classes. The theory of operations is:

- The CSVFT class will perform a HTTP/S API Call without (or basic) authentication. The expected result will be table-like document where every line will contain an indicator plus additional attributes separated by a known delimiter.

- Before the CSV parser kicks in, a regex pattern will be used to discard lines that should not be processed (i.e. comments)

- The prototype will provide configuration elements to the CSV parser to perform the correct field extraction from each line.

First of all, lets call the demo csv api and analyze the results:

Request ->

GET /csv HTTP/1.1

Host: test.minemeld.com

Response Headers <-

HTTP/2.0 200 OK

content-type: text/csv

content-disposition: attachment; filename="minemeldtest.csv"

content-length: 432

Response Body <-

# Real-Time temperature of MineMeld-related cities in the world.

url,country,region,city,temperature

https://ajuntament.barcelona.cat/turisme/en/,ES,Catalunya,Barcelona,12.24

http://www.turismo.comune.parma.it/en,IT,Emilia-Romagna,Parma,16.03

http://santaclaraca.gov/visitors,US,California,Santa Clara,8.98

- The API returns a test/csv content and suggests us to store the results as an attachment with the name minemeldtest.csv.

- Regarding the content, it looks like 4 data records are provided with up to 5 fields each: url, country, region, city and temperature. There are, as well, two lines that do not provide any value and that should be discarded (the one with the comment and the one withe the field headers)

- And, as for the CSV parsing task, it looks like the fields are clearly delimited by the comma character.

We're ready to go to configure our prototype to mine this API with the CSVFT class.

Step 1: Create a new prototype using any CSVFT-based one as starting point.

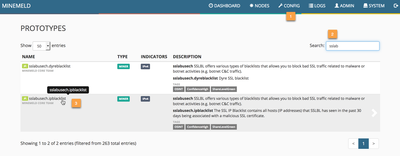

We will use the prototype named "sslabusech.ipblacklist" as our starting point. Just navigate to the config panel, click on the lower right icon (the one with the three lines) to expose the prototype library and click on the sslabusech one.

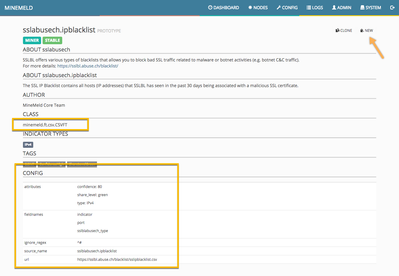

Cliking on the sslabuse prototype will reveal its configuration as shown in the following picture.

The most important value in the prototype is the class is applies to. In this case, the CSVFT one we want to leverage. Our mission is to create a new prototype and to change its configuration to accomplish our goal to mine the demo CSV API. The following is the set of changes we will introduce:

- Name, Description and Tags (to make it searchable in the prototype library)

- Inside the CONFIG section:

- We will replace url with https://test.minemeld.com/csv

- We will change the indicator type to URL and set the confidence level to 100

- Provide our own set of fieldnames

- Define the ignore_regex pattern as "^(?!https)" (to discard all lines except the ones starting with "https")

- Describe the source_name as minemeld-test

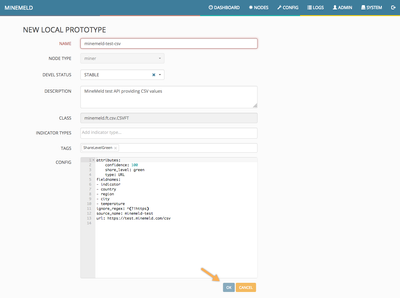

Simply click on the NEW button and modify the prototype as shown in the following picture.

Please, take a closer look to the fieldname list and realize the first name in our prototype list to be "indicator" (in the CSV body the first field was suggested to be "url" instead). The CSV engine inside the CSVFT class will extract all comma separated values from each line and use the one matching the column named "indicator" as the value containing the indicator we want to extract. Any other fieldname will be extracted and attached as additional attributes to the indicator.

Step 2: Clone the prototype as a working node (miner) in the MineMeld engine

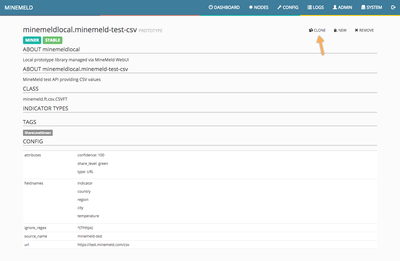

Clicking on OK will store this brand new prototype into the library and the browser will be sent to it. Just change the search field to reveal our csv prototype and then click on it.

Now it is time to clone this prototype into a working node into the MineMeld engine. So just click on the CLONE button, give the new miner node a name and commit the new configuration.

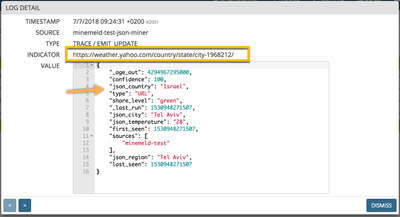

Step 3: Verify the node status.

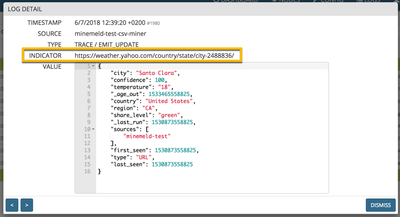

Once the engine restarts you should see a new node in your MineMeld engine with 4 indicators in it. Click on it, then click on its LOG button and, finally, click on any log entry to reveal the indicator details.

As shown in the last picture, the extracted indicators are of URL type and additional attributes like city, region, country and temperature are attached to it.

Other optional configuration parameters supported by the CSVFT class are:

- fieldname: in case it to be null, then the values extracted from the first parsed lines will be used as fieldnames (remember that one of the fields must be named "indicator")

- delimiter, doublequote, escapechar, quotechar and skipinitialspace control the CSV parser behavior as described in the Python reference guide

Mining a HTML API

In this section you will be provided with steps needed to use the HTTPFT class to mine dynamic data exposed in the response to a HTTP request (typically text/plain or text/html). If you have not done so, please review the complete process described in the section "Mining a CSV API" to understand concepts like "creating a new prototype", "cloning a prototype as a working node", etc.

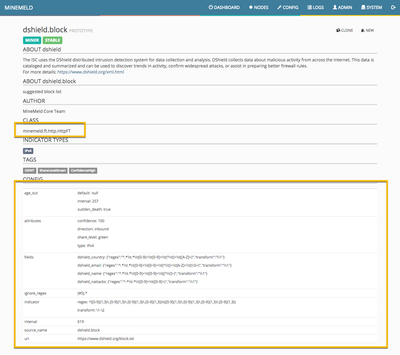

To build a new HTTPFT class we first need base prototype that already leverages this class. In this example we will use the prototype named dshield.block as the base.

Let's take a deeper look to the HTML API response to figure out how to generate a valid prototype to accomplish our mission.

Request ->

GET /html HTTP/1.1

Host: test.minemeld.com

Response Headers <-

HTTP/2.0 200 OK

content-type: text/html

content-length: 1626

Response Body <-

<!DOCTYPE html><html><head><link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.1.1/css/bootstrap.min.css" integrity="sha384-WskhaSGFgHYWDcbwN70/dfYBj47jz9qbsMId/iRN3ewGhXQFZCSftd1LZCfmhktB" crossorigin="anonymous"><title>Real-Time temperature of MineMeld-related cities in the world.</title></head><body><div class="container"><table class="table-striped">

<tr><td><code>city</code></td><td><code>country</code></td><td><code>region</code></td><td><code>temperature</code></td><td><code>url</code></td></tr>

<tr><td><code class="small">Barcelona</code></td><td><code class="small">ES</code></td><td><code class="small">Catalunya</code></td><td><code class="small">12.24</code></td><td><code class="small">https://ajuntament.barcelona.cat/turisme/en/</code></td></tr>

<tr><td><code class="small">Parma</code></td><td><code class="small">IT</code></td><td><code class="small">Emilia-Romagna</code></td><td><code class="small">16.03</code></td><td><code class="small">http://www.turismo.comune.parma.it/en</code></td></tr>

<tr><td><code class="small">Santa Clara</code></td><td><code class="small">US</code></td><td><code class="small">California</code></td><td><code class="small">8.98</code></td><td><code class="small">http://santaclaraca.gov/visitors</code></td></tr>

</table></div></body></html>

So, what do we have here? A HTML table whose rows are provided in individual file lines and with each value in its own table cell.

First of all we have to get rid of all lines not belonging to table rows. We can achieve this with the ignore_regex class configuration parameter.

ignore_regex: ^(?!<tr><td>)

Next, we need a regex pattern to extract and transform our values from each line. The HTTPFT class leverages Python's re module and accepts configuration parameters both for the indicator itself and any additional attribute. Any Regular Expression strategy will be valid. We will use the following one in this example:

(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)

It is a large expression with 10 capturing groups. The first capturing group (\1) extracts the first cell and the second capturing group (\2) the value inside that given cell. Group 3 extracts cell number 2 and group 4 the value inside that second cell. And so on.

As the indicator (the URL) is in the first cell, then the corresponding configuration to achieve our goal must be:

indicator:

regex: '(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)'

transform: \2

For the remaining attributes we can leverage the same regular expression but with different transformations.

fields:

country:

regex: '(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)'

transform: \4

region:

regex: '(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)'

transform: \6

city:

regex: '(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)'

transform: \8

temperature:

regex: '(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)(<td><code class="small">([^<]+)<\/code><\/td>)'

transform: \10

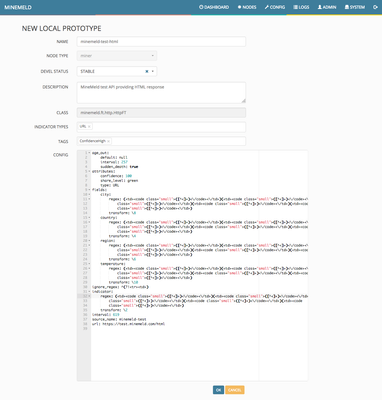

Combine all these configuration parameters into our desired HTTPFT class prototype as shown in the following picture.

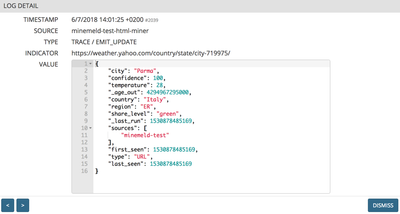

And, finally, clone this prototype as a working (miner) node into the MineMeld engine to verify it is working as expected.

Other optional configuration parameters supported by the HTTPFT class are:

- verify_cert: It controls if the SSL cetificate should be verified (default - true) or not (false)

- user_agent: To use a customer provided HTTP User Agent string

- encoding: To deal with non UTF-8 (default) content.

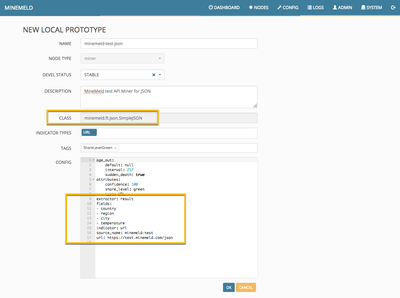

Mining a JSON API

(Please review the complete process described in the section "Mining a CSV API" to understand concepts like "creating a new prototype", "cloning a prototype as a working node", etc.)

The SimpleJSON class features a JMESPath engine to process any JSON document returned by the API call. Take your time to visit http://jmespath.org/ and follow the tutorial to be able to understand all concepts we're going to share in this section.

The following is the theory of operations for JSON miners:

- The node will perform a non (or basic) authenticated HTTP/S requests and a JSON document is expected to be received in the response.

- The document will be transformed with a JMESPath expression that MUST convert the original document into an array of simple objects (just key-value pairs).

- Finally, the indicator key will extract the main value and any additional field key will be extracted and attached as additional attributes to the indicator.

Let's take a closer look to the test JSON API we're going to mine:

Request ->

GET /json HTTP/1.1

Host: test.minemeld.com

Response Headers <-

HTTP/2.0 200 OK

content-type: application/json

content-length: 861

Response Body <-

{ "description": "Real-Time temperature of MineMeld-related cities in the world.", "result": [ { "url": "https://ajuntament.barcelona.cat/turisme/en/", "country": "ES", "region": "Catalunya", "city": "Barcelona", "temperature": 12.24 }, { "url": "http://www.turismo.comune.parma.it/en", "country": "IT", "region": "Emilia-Romagna", "city": "Parma", "temperature": 16.03 }, { "url": "http://santaclaraca.gov/visitors", "country": "US", "region": "California", "city": "Santa Clara", "temperature": 8.98 } ] }

The JSON document looks quite easy and with a element (result) that already provides us the needed array of objects. So, our JMESPath extractor will be:

extractor: result

You can check the expressión in the JMESPath site to verify this expression will return the following array of objects

[

{

"url": "https://weather.yahoo.com/country/state/city-772777/",

"country": "Spain",

"region": "Catalunya",

"city": "Sant Cugat Del Valles",

"temperature": "22"

},

{

"url": "https://weather.yahoo.com/country/state/city-719975/",

"country": "Italy",

"region": "Emilia-Romagna",

"city": "Parma",

"temperature": "21"

},

{

"url": "https://weather.yahoo.com/country/state/city-2488836/",

"country": "United States",

"region": "California",

"city": "Santa Clara",

"temperature": "20"

}

]

At this point we just need to identify the object attributes that contains 1) the indicator itself and 2) any additional attribute we want to attach to the indicator. In our case, the configuration for it will be:

indicator: url

fields:

- country

- region

- city

- temperature

It is time to put all these configuration statements into a SimpleJSON class prototype. We can use, for example, the aws.AMAZON standard library prototype as the base.

Did you noticed the "json" prefix in all extracted additional attributes? You can control that and a few other behaviors of the class with the following optional class configuration elements:

- prefix: that will be attached to any additional attribute attached to the indicator.

- verify_cert: It controls if the SSL cetificate should be verified (default - true) or not (false).

- headers: A list that allows you add additional headers to the HTTP request.

- client_cert_required, cert_file and key_file can be used to force a HTTP request with client certificate authentication.

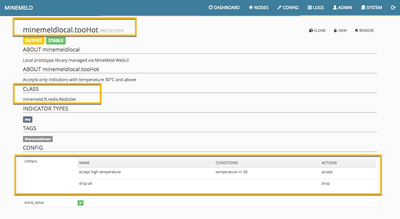

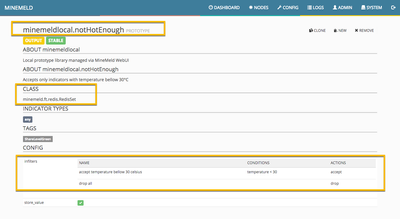

Bonus Track: using indicator attributes

Wondering why would anyone extract additional attributes from the feed and not just the indicator value?

Lets's imagine we want to provide two feeds with Yahoo Weather urls of cities:

- One of them will be called "time-to-beach" and will list URL's of cities where population is over 30°C and, probably, preparing themselves to go to the beach or outdoor swim pools.

- The other one called "no-beach-time-yet" will list cities with current temperature bellow 30°C

We can achieve that with the input and output filtering capabilities of the MineMeld engine nodes. Let me share with you a couple of screenshots of the prototypes that will do this job:

Clone each one of these two prototypes as working output nodes and connect their inputs to the JSON miner you created. That should build a graph like the one shown in the picture.

At the time of writing this article, only one of the four cities in the feed is over 30°C.

GET /feeds/no-beach-time-yet HTTP/1.1

...

http://www.turismo.comune.parma.it/en

https://ajuntament.barcelona.cat/turisme/en/

----

GET /feeds/time-to-beach HTTP/1.1

...

http://santaclaraca.gov/visitors

Hey!

Just a quick question that maybe I didn't quite understand. If the HTML external list I'm going to use for my prototype is protected by a simple user/password combination how do I tell MineMeld to authenticate before extracting the info?

https://test.minemeld.com/json ->

{"message": "Internal server error"}

I don't want to open a support ticket but if anyone is monitoring this thread and they could investigate the test.minemeld.com instance

Hi @Michael_D ,

thanks for letting us know the example was not working anymore. Yahoo discontinued his weather API. Just the example to another provider.

Took me a while to get this figured out, but @lachlanjholmes on the PA community slack group had the answer and want to credit him.

I'm posting it here to help others in case who may have a similar issue.

Parsing a JSON feed that contains an array of ip addresses, like the datadog feeds:

{

"version": 40,

"modified": "2021-04-02-17-00-00",

"agents": {

"prefixes_ipv4": [

"3.228.26.0/25",

"3.228.26.128/25",

"3.228.27.0/25",

"3.233.144.0/20"

],

"prefixes_ipv6": [

"2600:1f18:24e6:b900::/56",

"2600:1f18:63f7:b900::/56"

]

}

}

you need to use the extractor:

extractor: agents.prefixes_ipv4[].{ip:@}

The extractor get's the prefixes_ipv4[] array, then the {ip:@} formats that into an array of objects like the following:

[

{

"ip": "3.228.26.0/25"

},

{

"ip": "3.228.26.128/25"

},

{

"ip": "3.228.27.0/25"

},

{

"ip": "3.233.144.0/20"

}

]

Then it's a simple matter of using

indicator: ip

To extract each ip to the list.

- 28754 Views

- 4 comments

- 2 Likes

-

"Address Objects"

1 -

10.0

2 -

10.1

2 -

10.2

3 -

8.1

1 -

9.0

1 -

9.1

1 -

ACC

1 -

Active-Passive

1 -

AD

1 -

address objects

1 -

admin roles

1 -

Administration

6 -

Administrator Profile

1 -

Advanced URL Filtering

2 -

Advanced WildFire

1 -

Alibaba

2 -

Alibaba Cloud

3 -

Ansible

1 -

antivirus

1 -

API

2 -

applications

2 -

APS

1 -

Asset Management

1 -

Authentication

6 -

Authentication Profile

1 -

Authentication Sequence

1 -

automatically acquire commit lock

1 -

Automation

3 -

AWS

6 -

Azure

1 -

Basic Configuration

4 -

Beacon

2 -

Beacon2020

1 -

Best Practices

2 -

Block List

1 -

categories

1 -

certificates

1 -

Certification

1 -

Certifications

1 -

Certifications & Exams

1 -

CLI

4 -

CLI command

3 -

Cloud Automation

1 -

Cloud Identity Engine

1 -

Cloud NGFW

1 -

Cloud Security

1 -

Collector Group

1 -

Commit Process

1 -

Community News

1 -

Configuration

9 -

Configure Next Generation Firewall

1 -

console

1 -

Cortex

1 -

Cortex Data Lake

2 -

Cortex XDR

5 -

COVID-19

1 -

CPSP

1 -

cyber elite

1 -

Cyberelite

11 -

dag

2 -

Debug

1 -

debugging

2 -

Default Policy

1 -

Deployment

1 -

discussions

1 -

EDL

3 -

education

2 -

Education and Training

2 -

Education Services

2 -

Educational Services

1 -

Effective Routing

1 -

Endpoint

1 -

ESXi

1 -

Events

1 -

expedition

1 -

export

1 -

failover

1 -

FAQ

1 -

Filtering

2 -

Firewall

2 -

Firewall VM-Series

2 -

Focused Services

2 -

Focused Services Proactive Insights

1 -

gateway

1 -

Gateway Load Balancer

3 -

Gateway Loadbalancer

2 -

GCP

5 -

GCP Firewall

1 -

geolocation

1 -

Getting Started

1 -

Github

1 -

Global Protect

1 -

Global Protect Cookies

1 -

GlobalProtect

8 -

GlobalProtect App

1 -

globalprotect gateway

1 -

GlobalProtect Portal

2 -

google

2 -

Google Cloud

3 -

google cloud platform

4 -

GWLB

3 -

Hardware

2 -

hash

1 -

Header Insertion

1 -

High Availability

1 -

How to

1 -

HTTP

1 -

https

1 -

Hybrid Cloud

1 -

ike

3 -

import

1 -

Installation & Upgrade

1 -

IoT

2 -

IoT Security

1 -

IPSec

4 -

IPSec VPN Administration

1 -

kerberos

1 -

Kubernetes

1 -

Layer 2

2 -

Layer 3

1 -

Learning

1 -

licenses

1 -

local user

3 -

Log Cluster Design

1 -

Log Collection

1 -

Log Collector Design

1 -

Log Forwarding

1 -

Log4Shell

1 -

Logging

2 -

login

1 -

Logs

3 -

Malware

1 -

Management

8 -

Management & Administration

5 -

MFA

1 -

microsoft

2 -

Microsoft 365

1 -

minemeld

24 -

multi factor authentication

1 -

multi-factor authentication

1 -

multi-vsys

1 -

NetSec

1 -

NetSec Newsletter

1 -

Network Security

37 -

network-security

1 -

Networking

1 -

New Apps

1 -

News

1 -

newsletter

2 -

Next Generation Firewall

2 -

Next-Generation Firewall

42 -

next-generation firewall. network security

1 -

Next-Generation Firewall. NGFW

4 -

NGFW

25 -

NGFW Configuration

10 -

NGFW Newsletter

1 -

Objects

2 -

Oracle Cloud

1 -

Oracle Cloud Infrastructure

1 -

OTP

1 -

PA-3200 Series

1 -

PA-400

1 -

pa-440

2 -

PA-5400 series

1 -

PA-800 Series

1 -

pa-820 firewall

1 -

Packet Buffer

1 -

packet debug

1 -

packet diag

1 -

PAN-OS

17 -

PAN-OS 10.2

1 -

PAN-OS 11.0

1 -

PAN-OS 9.1

1 -

Panorama

8 -

Panorama 8.1

1 -

Panorama 9.1

1 -

Panorama Configuration

2 -

Panorama HA

1 -

PBF

1 -

PCNSA

2 -

PCNSE

2 -

policies

2 -

policy

3 -

Policy Based Forwarding

1 -

Prisma

1 -

Prisma Access

5 -

Prisma SD-WAN

1 -

proactive insights

1 -

QRadar

1 -

Radius

1 -

Ransomware

1 -

region

1 -

Registration

1 -

Release Notes

1 -

reporting and logging

1 -

Risk Management

1 -

Routing

1 -

SAML

1 -

SASE

2 -

script

2 -

SD WAN

1 -

SD-WAN

1 -

SDWAN

1 -

Search

1 -

Security Advisory

1 -

Security automation

1 -

security policy

4 -

security profile

1 -

Security Profiles

2 -

Session Packet

1 -

Setup & Administration

7 -

Site-to-Site VPN

1 -

Split Tunneling

1 -

SSL

1 -

SSL Decryption

2 -

SSL Forward Proxy

1 -

SSO

1 -

Strata Logging Service

2 -

Support Guidance

1 -

syslog

1 -

Tag

2 -

Tags

2 -

Terraform

2 -

TGW

3 -

threat log

1 -

Threat Prevention

2 -

Threat Prevention License

1 -

Threat Prevention Services

1 -

Tips & Tricks

2 -

tls

1 -

traffic_log

1 -

Transit Gateway

1 -

Traps

1 -

Troubleshoot

2 -

Troubleshoot. logs

1 -

troubleshooting

5 -

tunnel

2 -

Tutorial

2 -

Ubuntu 16.04

1 -

Unified Monitoring

1 -

Upgrade

2 -

upgrade-downgrade

3 -

url categories

1 -

URL filtering

2 -

URL-Filtering

1 -

User ID Probing

1 -

User-ID

1 -

User-ID & Authentication

1 -

User-ID mapping

1 -

userid

1 -

VM Series

1 -

VM-Series

15 -

VM-Series on AWS

6 -

VM-Series on GCP

2 -

VPC Flow logs

1 -

VPN

2 -

VPNs

4 -

Vulnerability Protection

1 -

Webinar

1 -

WildFire

3 -

Wildfire License

1 -

wmi

1 -

XDR

1 -

xml

2 -

XML API

2

- Previous

- Next