- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Dataplane issue

- LIVEcommunity

- Discussions

- General Topics

- Re: Dataplane issue

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-19-2019 09:54 AM

Hello

i have 2 Palo Alto in HA Mode Active/Passive and yesterday the Active when down and i lost all the LACPs ,then i start to troubleshooting to see the cause and i found this

could you tell me if is this bug issue or interface issue please ?

18/11/2019 16:09:23 ha ha2-link-change 0 general critical All HA2 links down

18/11/2019 16:09:23 ha session-synch 0 general high HA Group 1: Ignoring session synchronization due to HA2-unavailable

18/11/2019 16:09:23 ha ha2-link-change 0 general high HA2-Backup link down

18/11/2019 16:09:23 ha ha2-link-change 0 general critical HA2 link down

18/11/2019 16:09:23 general general 0 general critical Chassis Master Alarm: HA-event

18/11/2019 16:09:23 ha state-change 0 general critical HA Group 1: Moved from state Active to state Non-Functional

18/11/2019 16:09:23 ha dataplane-down 0 general critical HA Group 1: Dataplane is down: path monitor failure

18/11/2019 16:09:23 general general 0 general high 9: dp0-path_monitor HB failures seen, triggering HA DP down

18/11/2019 16:08:42 general general 0 general critical pktlog_forwarding: Exited 4 times, must be manually recovered.

18/11/2019 16:06:39 general general 0 general high all_pktproc_4: exiting because missed too many heartbeats

also the logs from the firewall

019-11-18 16:06:40.883 +0100 Dataplane HA state transition: from 5 to 5

2019-11-18 16:06:55.079 +0100 sysd notificatioon for object sw.mgmt.runtime.ncommits

2019-11-18 16:06:55.079 +0100 Peer HA3 MAC is 00:00:00:00:00:00

2019-11-18 16:06:55.079 +0100 Peer HA2 MAC is 00:00:00:00:00:00

2019-11-18 16:06:55.079 +0100 Peer HA2 MAC is e8:98:6d:67:ec:4b

2019-11-18 16:06:55.081 +0100 Dataplane HA state transition: from 5 to 5

2019-11-18 16:07:17.862 +0100 sending periodic gratuitous arp or nd/mld messages for all interfaces

2019-11-18 16:07:17.862 +0100 Send gratuitous ARP

2019-11-18 16:08:17.863 +0100 sending periodic gratuitous arp or nd/mld messages for all interfaces

2019-11-18 16:08:17.866 +0100 Send gratuitous ARP

2019-11-18 16:09:17.872 +0100 sending periodic gratuitous arp or nd/mld messages for all interfaces

2019-11-18 16:09:17.873 +0100 Send gratuitous ARP

2019-11-18 16:09:23.490 +0100 sysd notificatioon for object ha.app.local.lib-states

2019-11-18 16:09:23.505 +0100 Peer HA3 MAC is 00:00:00:00:00:00

2019-11-18 16:09:23.505 +0100 Peer HA2 MAC is 00:00:00:00:00:00

2019-11-18 16:09:23.505 +0100 Peer HA2 MAC is e8:98:6d:67:ec:4b

2019-11-18 16:09:23.506 +0100 Dataplane HA state transition: from 5 to 2

2019-11-18 16:09:23.506 +0100 Set dataplane interface link properties

2019-11-18 16:09:23.506 +0100 Device in inactive HA state, shut down all ports

2019-11-18 16:09:23.489 +0100 debug: ha_slot_sysd_dp_down_notify_cb(src/ha_slot.c:1006): Got initial dataplane down (slot 1; reason path monitor failure)

2019-11-18 16:09:23.489 +0100 The dataplane is going down

2019-11-18 16:09:23.489 +0100 debug: ha_rts_dp_ready_update(src/ha_rts.c:1119): RTS slot 1 set to NOT ready

2019-11-18 16:09:23.489 +0100 debug: ha_rts_dp_ready(src/ha_rts.c:791): Update dp ready bitmask for slots ; changed slots 1 for local device

2019-11-18 16:09:23.490 +0100 debug: ha_peer_send_hello(src/ha_peer.c:5066): Group 1 (HA1-MAIN): Sending hello message

Hello Msg

---------

flags : 0x0 ()

state : Active (5)

priority : 100

cookie : 9557

num tlvs : 2

Printing out 2 tlvs

TLV[1]: type 67 (DP_RTS_READY); len 4; value:

00000000

TLV[2]: type 11 (SYSD_PEER_DOWN); len 4; value:

00000000

2019-11-18 16:09:23.490 +0100 Warning: ha_event_log(src/ha_event.c:47): HA Group 1: Dataplane is down: path monitor failure

2019-11-18 16:09:23.490 +0100 Going to non-functional for reason Dataplane down: path monitor failure

2019-11-18 16:09:23.490 +0100 debug: ha_state_transition(src/ha_state.c:1420): Group 1: transition to state Non-Functional

2019-11-18 16:09:23.490 +0100 debug: ha_state_start_monitor_holdup(src/ha_state.c:2642): Skipping monitor holdup for group 1

2019-11-18 16:09:23.490 +0100 debug: ha_state_monitor_holdup_callback(src/ha_state.c:2740): Going to Non-Functional state state

2019-11-18 16:09:23.490 +0100 debug: ha_state_move(src/ha_state.c:1516): Group 1: moving from state Active to Non-Functional

2019-11-18 16:09:23.490 +0100 debug: sysd_queue_wr_event_add(sysd_queue.c:915): QUEUE: queue write event already added

2019-11-18 16:09:23.490 +0100 Warning: ha_event_log(src/ha_event.c:47): HA Group 1: Moved from state Active to state Non-Functional

2019-11-18 16:09:23.490 +0100 debug: ha_sysd_dev_state_update(src/ha_sysd.c:1431): Set dev state to Non-Functional

2019-11-18 16:09:23.490 +0100 debug: ha_state_move_action(src/ha_state.c:1331): No state transition script available on current platform

2019-11-18 16:09:23.490 +0100 debug: ha_sysd_dev_alarm_update(src/ha_sysd.c:1397): Set dev alarm to on

2019-11-18 16:09:23.490 +0100 debug: ha_state_move_degraded(src/ha_state.c:1836): Group 1: Non-functional loop count updated to 1

2019-11-18 16:09:23.490 +0100 debug: ha_state_check_nonfunc_hold(src/ha_state.c:2767): No non-func hold based on product/mode/dp/sys state

2019-11-18 16:09:23.490 +0100 debug: ha_peer_send_hello(src/ha_peer.c:5066): Group 1 (HA1-MAIN): Sending hello message

would be very helpful to have a good answer from you

thank you

Matheus

Accepted Solutions

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-19-2019 10:44 AM

Hello there.

I am sure this will be multiple emails, back and forth, but this is what I see (understand from your logs)

HA2 link down (there is no more connectivity between your HA2 (which is session to session based synch)

HA Group 1: Dataplane is down: path monitor failure (you have or maybe did not configure the FW's virtual router to do continuous icmp pings to sites upstream to the FW, and those upstream IPs were no longer responsive. Because no longer responsive, then the FW felt/believed that your monitored path was no longer existent, and wanted to fail over to your backup FW.

Group 1: transition to state Non-Functional (this means there is an error in your configuration.... and am guessing it is on the HA configuration side.

To me, the FW was monitoring its links and saw that both links were no longer available and the path monitoring (network is down), so it went into error (non functional), all of which appears to be completely normal.

The question(s) that we have, are what interfaces are you monitoring? What path(s) are you monitoring?

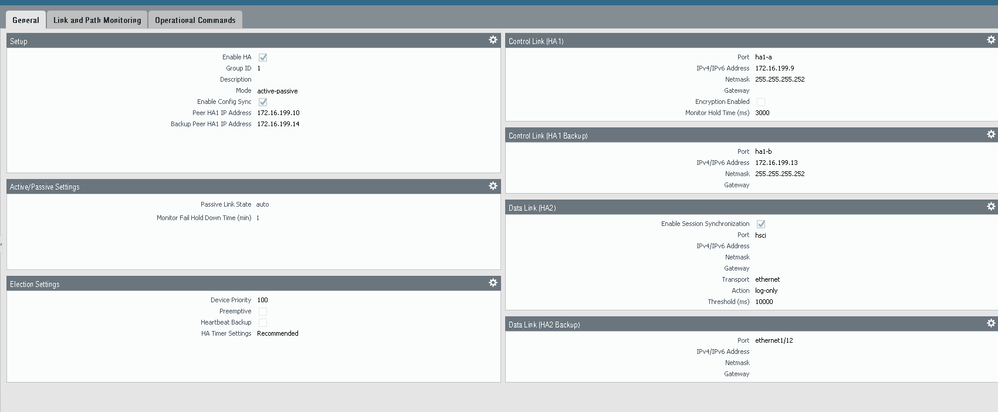

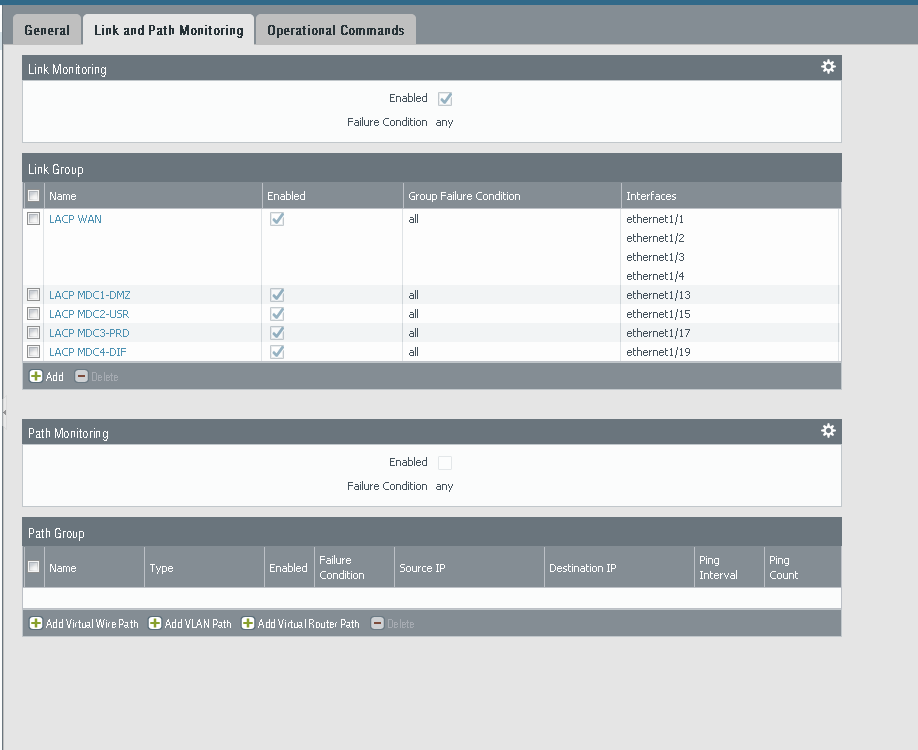

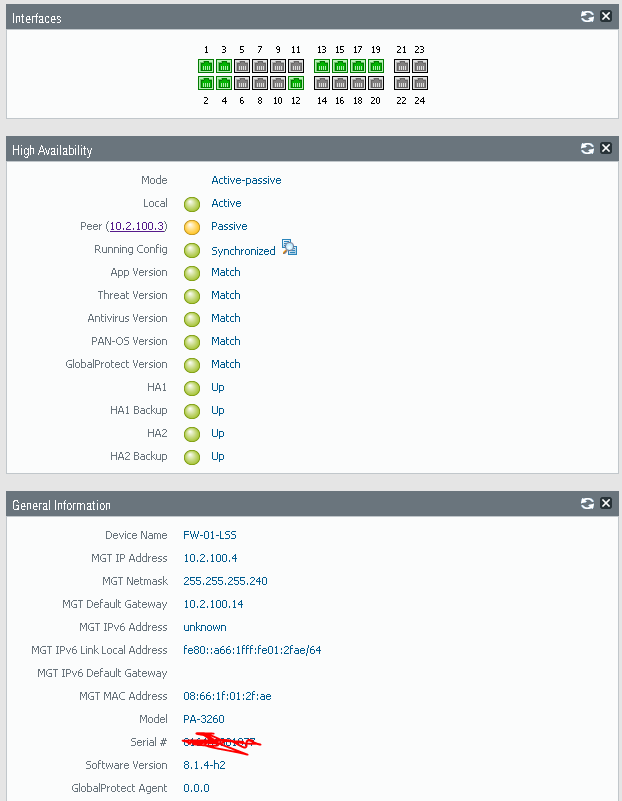

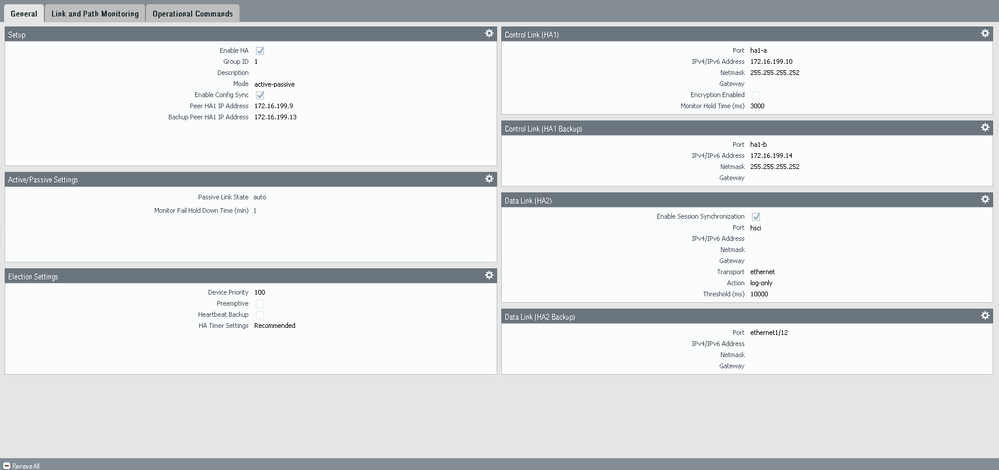

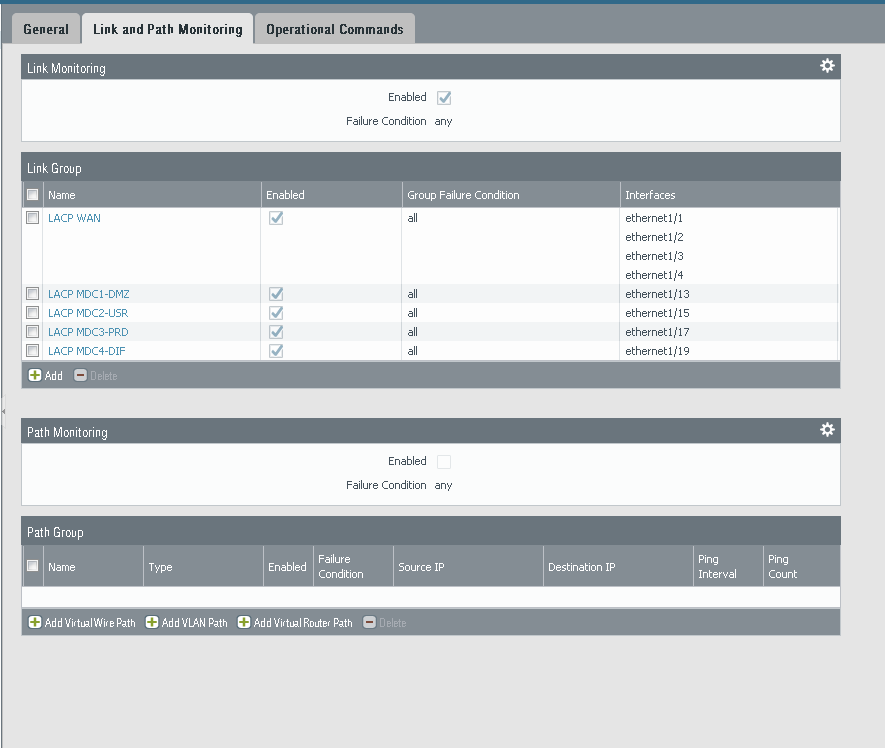

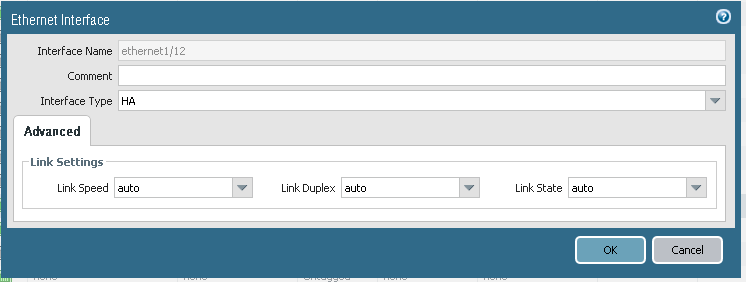

We would need to see screen capture of your HA configuration (nothing private in the config discloses sensitive/user info,) so please upload screen captures of your HA config and your Link/Path monitoring.

What did you do to resolve your issues as well? This will help us as well.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-19-2019 07:09 PM

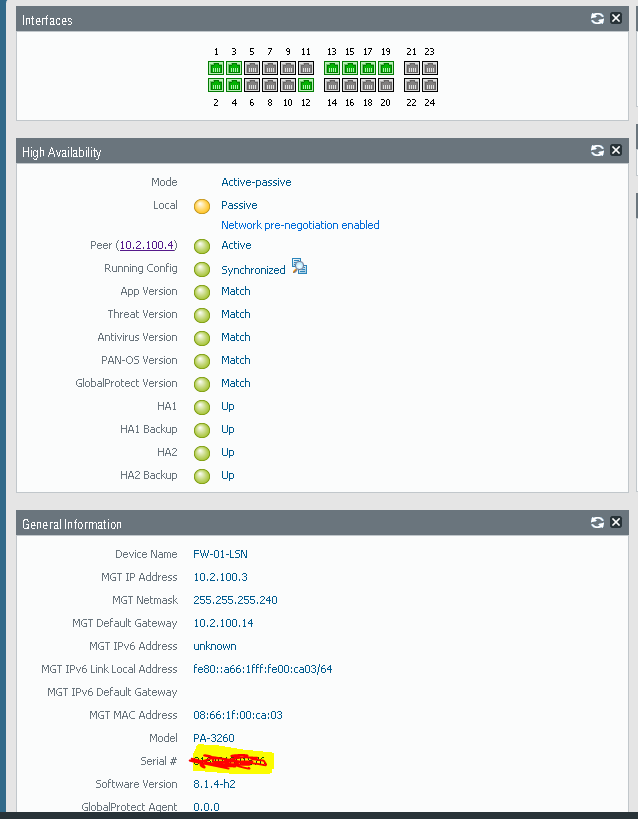

You need to start planning an upgrade on your system. You are currently running 8.1.4-h2 and it sounds like you could have run into PAN-106914 which was addressed in 8.1.9 on your HA2 interfaces. The path-monitoring failures I would ignore as a side effect of the failover; you don't have path monitoring enabled on either units according to your screenshots and a link monitoring event would report as such.

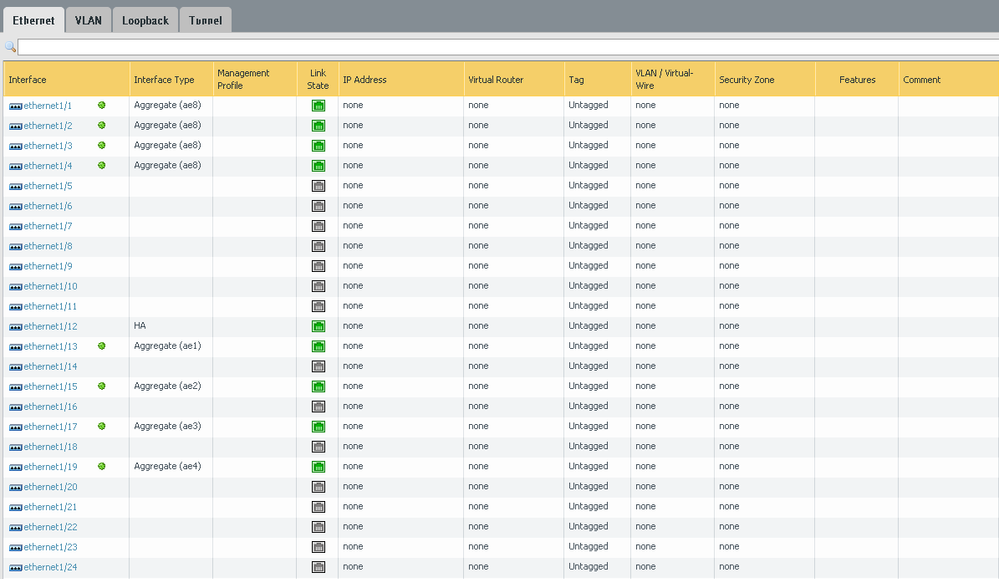

One that that immediately seems odd in your configuration is the use of LACP and aggregate links when you are relying on a sole link. It won't cause any issues, but its completely unnecessary from the look of things outside of your ae8 WAN links.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-19-2019 10:44 AM

Hello there.

I am sure this will be multiple emails, back and forth, but this is what I see (understand from your logs)

HA2 link down (there is no more connectivity between your HA2 (which is session to session based synch)

HA Group 1: Dataplane is down: path monitor failure (you have or maybe did not configure the FW's virtual router to do continuous icmp pings to sites upstream to the FW, and those upstream IPs were no longer responsive. Because no longer responsive, then the FW felt/believed that your monitored path was no longer existent, and wanted to fail over to your backup FW.

Group 1: transition to state Non-Functional (this means there is an error in your configuration.... and am guessing it is on the HA configuration side.

To me, the FW was monitoring its links and saw that both links were no longer available and the path monitoring (network is down), so it went into error (non functional), all of which appears to be completely normal.

The question(s) that we have, are what interfaces are you monitoring? What path(s) are you monitoring?

We would need to see screen capture of your HA configuration (nothing private in the config discloses sensitive/user info,) so please upload screen captures of your HA config and your Link/Path monitoring.

What did you do to resolve your issues as well? This will help us as well.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-19-2019 01:23 PM

Hi Steve

tks for the quickly answer:)

i m new in palo alto sorry im from cisco world ..also i m not to familiar yet with my setup as i just started not long time ago so still discovering stuff

HA Group 1: Dataplane is down: path monitor failure (you have or maybe did not configure the FW's virtual router to do continuous icmp pings to sites upstream to the FW, and those upstream IPs were no longer responsive. Because no longer responsive, then the FW felt/believed that your monitored path was no longer existent, and wanted to fail over to your backup FW.

there is no icmp pings from HA to HA ..

To me, the FW was monitoring its links and saw that both links were no longer available and the path monitoring (network is down), so it went into error (non functional), all of which appears to be completely normal.

Monitoring which links ? the LACP that i have to the network Core ?or HA ?

thank you very much

Matheus

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-19-2019 07:09 PM

You need to start planning an upgrade on your system. You are currently running 8.1.4-h2 and it sounds like you could have run into PAN-106914 which was addressed in 8.1.9 on your HA2 interfaces. The path-monitoring failures I would ignore as a side effect of the failover; you don't have path monitoring enabled on either units according to your screenshots and a link monitoring event would report as such.

One that that immediately seems odd in your configuration is the use of LACP and aggregate links when you are relying on a sole link. It won't cause any issues, but its completely unnecessary from the look of things outside of your ae8 WAN links.

- Mark as New

- Subscribe to RSS Feed

- Permalink

11-27-2019 06:15 AM

HI BPRY

tks for your answer

i noticed this config is very strange and bad at the same time hehhe we will plan a update of PANOS soon

tks

matheus

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-30-2023 12:33 AM

Perhaps its problem is bug ,you should update you system.

- 2 accepted solutions

- 27399 Views

- 5 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- PA1420 IKE packet disappear between receive (ingress) and firewall session state in General Topics

- while do the factory reset of pa 5250 showing error: findfs: unable to resolve 'label=sysroot0 in Next-Generation Firewall Discussions

- Do Palo Alto VMs support GCP N4 gve driver? in General Topics

- XSIAM V3.4 upgrade - anyone having issues? in Cortex XSIAM Discussions

- Panorama scheduled export path in Panorama Discussions