- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

NFS Performance - really poor

- LIVEcommunity

- Discussions

- General Topics

- Re: NFS Performance - really poor

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

NFS Performance - really poor

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-16-2014 09:27 PM

Hello everyone,

To start, this is my first post ever and I'll try to be as complete as I can. Yes we are working with ETAC, who are very helpful. However the solution is evasive and I hope someone else in the community has experienced something similar to this. Without the PA's in the mix, NFS performance is 750-800GB/hr. No indication of CPU or interface overrun issues on the PA.

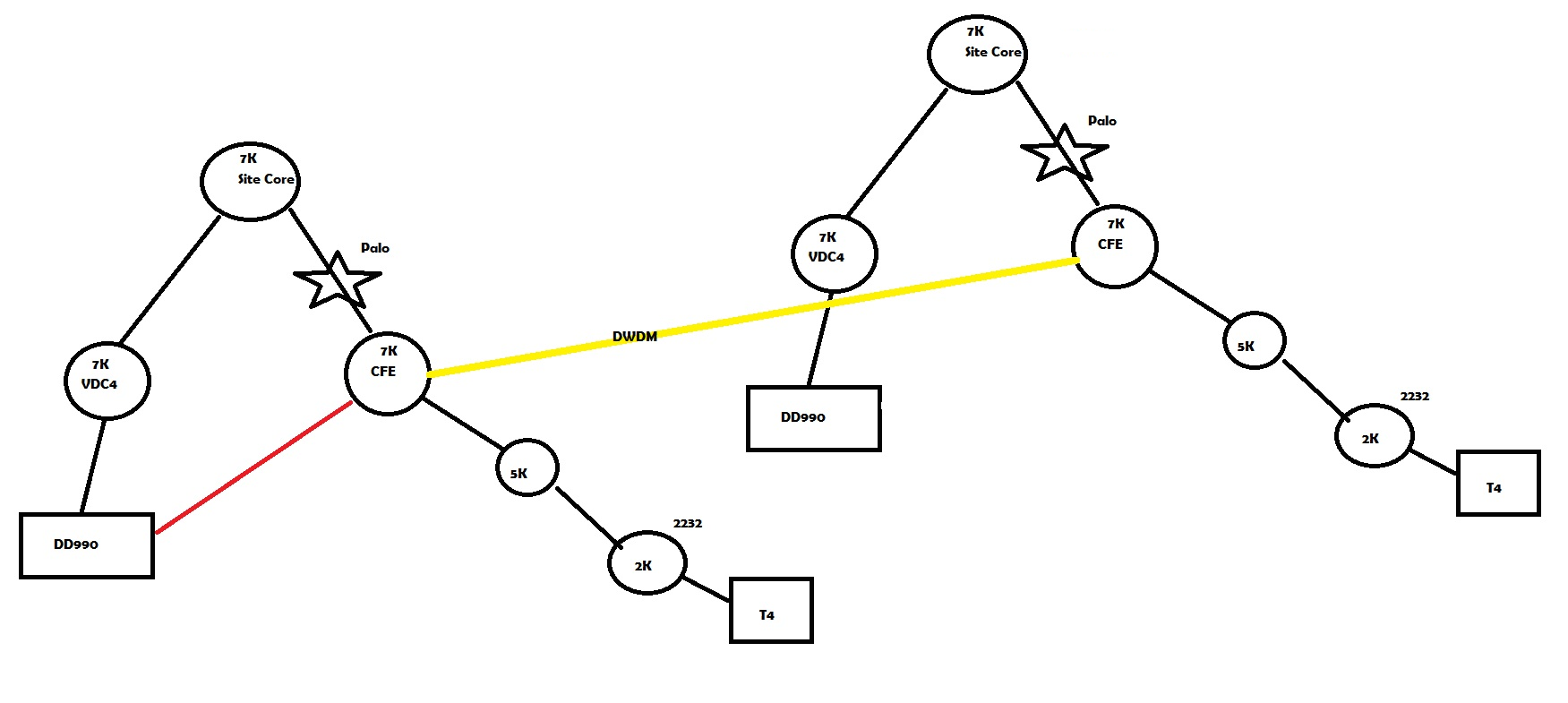

Background: Experiencing dramatic and severe performance drop off when using NFS to push large volume of data through a Palo Alto. Data transfer rate starts out at 1GB/s+ (600GB/hr) and then drops off suddenly to 20-25GB/hr. There are two instances where this does not occur: First when client is 1Gb connected and second when target server is located at Hotsite. In both cases, the traffic traverse an identically configured pair of 5060's running 5.011x code. The main difference between the two sites is in the second case traffic traverses a 2.5Gb DWDM link but NFS transfer rate is 400+GB/hr.

Topology: (vWire interface on 10GB SFP+, multimode fiber)

Pair of Palo Alto 5060 configured as Active/Active with HA3 packet forwarding disabled (i.e. no load balancing), each star below is a cluster. For the 10GB connected hosts and PA's the switch infrastructure is Nexus 7000, 5000 and 2200 series switches. 1GB connected hosts with no problems are connected to C7000, C6500, and 4948. Policy is permit anything at the moment.

To date we have tried several approaches to resolving the issue.

- Isolated network communications to a single PA, this removed A/A from the mix. This didn't result in any performance difference.

- Set asymmetric bypass to enable

- Specific line of policy for client/server with threat protections removed.

- Network Engineer indicate no errors incrementing on switches.

- One other confusion point. When running the same test in the hotsite (right side of drawing) performance is ~450GB/hr.

Considering:

- Bypassing PA, but leaving all other network components in place.

- On the left portion of the drawing, moving the T4 link off the 2200 series FEX and connecting it directly to C7000.

Thank you in advance.

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-17-2014 08:02 AM

Maybe try configuring App override for NFS? In testing I have done with various PA platforms in our lab (PA 500, PA 2000s, PA5000s) I have seen performance improve with app overrides in place. Just something to try.

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-26-2014 06:38 AM

@ericgearhart: we have considered app override, this would be a work around at best not a fix.

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-26-2014 06:51 AM

Update: A Palo fly/fix engineer spend time with us this past week and helped discover some interesting pieces of information. First, our *nix engineers tuned the TCP MSS to 1M on the T4 systems. This could be why the PA's are dealing with significant amounts of packet re-assembly. There is also a difference when the client is pushing a file via NFS vs pulling a file. When uploading a file to the DD990, performance is +- 170GB/hr, when downloading a file performance is +- 300GB/hr. What's interesting, when downloading a file there is no packet fragmentation at all.

Both results are focusing our attention on client side tuning and it's impact. Also looking at the network topology and configuration, are either contributing to the problem.

- Mark as New

- Subscribe to RSS Feed

- Permalink

04-29-2014 12:58 PM

coldstone1 right I completely agree, I was more or less suggesting it as a data point. If App override suddenly makes a huge positive impact on performance, then at least the problem is narrowed down a bit

- 6471 Views

- 4 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Log Collector Redundancy in Panorama Discussions

- SD-WAN with ION's running 6.5.1-b5 performance issues in Prisma SD-WAN Discussions

- zoom.com score experience shows in orange in GlobalProtect Discussions

- Smart Alert Triage Across Multiple Tenants and Environments in Advanced Threat Prevention Discussions

- Which AWS Instance Type Meets VM-300 Requirements? Documentation Seems Inconsistent in VM-Series in the Public Cloud