- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

receive incoming errors / 'rcv_fifo_overrun'

- LIVEcommunity

- Discussions

- General Topics

- Re: receive incoming errors / 'rcv_fifo_overrun'

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

receive incoming errors / 'rcv_fifo_overrun'

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-05-2017 06:05 AM

Hello everyone.

Got a question to the community on an interesting situation:

- PAN-PA-3050 / PAN OS 7.1.10

- Drop Counter increases on two aggregated interfaces (ae3 - interfaces 1/3 & 1/4)

- connected via Cisco vPC tech with a Nexus FEX switch

- new patch cable

- only req. VLANs are on the trunk / CDP is deactivated

- no obvious layer 2 errors visible during packet capture

Counter details:

REDACTED (active)> show interface ethernet1/3

…

Hardware interface counters read from CPU:

--------------------------------------------------------------------------------

bytes received 2198604878

bytes transmitted 67193368

packets received 16782405

packets transmitted 541882

receive incoming errors 1817713

receive discarded 0

receive errors 0

packets dropped 0

--------------------------------------------------------------------------------

REDACTED (active)> show interface ethernet1/4

…

Hardware interface counters read from CPU:

--------------------------------------------------------------------------------

bytes received 2198281424

bytes transmitted 67192748

packets received 16779807

packets transmitted 541877

receive incoming errors 1682781

receive discarded 1

receive errors 0

packets dropped 0

REDACTED (active)> show system state filter sys.s1.p3.detail

sys.s1.p3.detail: { 'pkts1024tomax_octets': 0x73619d2bd, 'pkts128to255_octets': 0x7d82ed54, 'pkts256to511_octets': 0x2f804d10, 'pkts512to1023_octets': 0x4dd41a61, 'pkts64_octets': 0x21a793bf, 'pkts65to127_octe

ts': 0x2a3d76b7d, 'rcv_fifo_overrun': 0x1bbc71, }

REDACTED (active)> show system state filter sys.s1.p4.detail

sys.s1.p4.detail: { 'bad_crc': 0x1, 'pkts1024tomax_octets': 0x769a235ec, 'pkts128to255_octets': 0xe38e6000, 'pkts256to511_octets': 0x3106dc4a, 'pkts512to1023_octets': 0x4d595c47, 'pkts64_octets': 0x19c1a2b5, '

pkts65to127_octets': 0x284dd797b, 'rcv_fifo_overrun': 0x19ad5d, }

REDACTED (active)> show counter global filter delta yes severity drop

Global counters:

Elapsed time since last sampling: 12.214 seconds

name value rate severity category aspect description

--------------------------------------------------------------------------------

flow_rcv_dot1q_tag_err 55 4 drop flow parse Packets dropped: 802.1q tag not configured

flow_no_interface 55 4 drop flow parse Packets dropped: invalid interface

flow_ipv6_disabled 14 1 drop flow parse Packets dropped: IPv6 disabled on interface

flow_policy_deny 1684 137 drop flow session Session setup: denied by policy

flow_tcp_non_syn_drop 20 1 drop flow session Packets dropped: non-SYN TCP without session match

flow_fwd_l3_bcast_drop 1 0 drop flow forward Packets dropped: unhandled IP broadcast

flow_fwd_l3_mcast_drop 206 16 drop flow forward Packets dropped: no route for IP multicast

flow_fwd_notopology 3 0 drop flow forward Packets dropped: no forwarding configured on interface

--------------------------------------------------------------------------------

Total counters shown: 8

We do not encounter any kind of issues within the network, but this is pretty confusing. Is this a bug or a normal behaviour?

I might be mistaken, but does 'rcv_fifo_overrun' mean that the firewall cannot process the incoming traffic? This is almost impossible, since we do not experience connection issues. Is this maybe flood protection? Or am I missing something?

Any input would be appreciated.

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 08:57 AM

Hi vmilan,

Can I ask what is the model of the device you have problem with?

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 10:47 AM - edited 12-12-2018 10:49 AM

I have 2 different cases with different customer

One is in PA-850, and another is in PA-3060

In salesforce i found one of the case where enabling flow control both the side of the link resolved this issue, i tried in one of my case but doesn't work.

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 10:49 AM

Overruns occure when the buffer of the interface is full, but it is still trying to handle incoming traffic. The port has exceeded its buffer. This is usually due to bursty traffic more than anything else.

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 10:52 AM

Customer don't want this counters to increase....

Is there any way to reduce this counters ??

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 10:57 AM

Depends on the platform and what port we're talking about. If you can upgrade the interface to a faster SFP module than yes, if not or if this is an ethernet interface than no. Essentially you need to figure out if bursty traffic is expected on the interface in question, if not then look into why it's present. If bursty traffic would be expected, than there isn't much you'll be able to do.

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 11:03 AM

Hi BPry,

Yes, i believe this is what i wanted to know and it will be helpful to convience customer.

And hopefully it will resolve this issue.

Thank you very much for your words @BPry @EliSz @Martin-Kowalski

If i need any further help, i will let you know.

Regards,

Milan Variya

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-12-2018 11:11 AM

Thanks for so Valuable info.

Lot to learn from your posts.

Help the community: Like helpful comments and mark solutions.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-08-2019 02:57 AM - edited 02-20-2020 04:05 AM

Hello,

We had similar case for 3 devices, problem with rcv_fifo_overrun counters increasing on external interface directly connected to the ISP router.

Devices were on 8.1.5 and 8.0.12 PANOS, model PA820.

First we thought problem might be due to high packet burst where device can't handle it properly and simply drops the packets, also we suspected PAN-104116 leak or another thought was that the device could be simply to busy and drops the packets that can't handle.

Leak was fixed in 8.1.5 and we try the upgrade path, which didn’t help in the end.

Then when we noticed that 2 more devices (same model PA820) had the same problem, we have logged the case with PA TAC.

Upon troubleshooting engineer provided some more insight based on the past experience:

Data receive buffer overrun - is only indication of ingress traffic bursts on the marvell switch port(Firewall DP Ports) .rcv_fifo_overrun errors are suggesting that Marvell(PA's switch backplane) was experiencing buffer shortage/switch port on Marvell fabric is receiving more frames than can be managed and this can be related to bursty traffic pattern.

Errors can only be found on Marvell external ports (phy ports, RJ45) and not between internal

chipsets(From Marvell ---> Dataplane processor). Buffer size cannot be changed/adjusted.

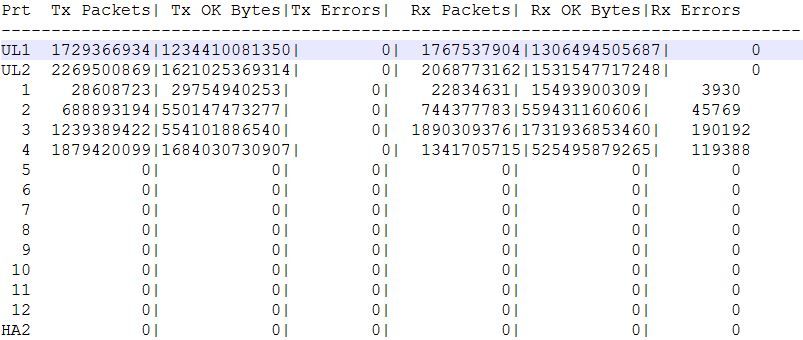

> debug dataplane internal pdt marvell stats

What we are interested in is the UL1 and UL2 (Uplinks to Octeon Processor) and

they appear to be good, in all of them indicating marvell is not sending pause frames or

any recv errors caused by hardware.

Also the traffic rate in my case and for that particular device was above 20K pps Rcv_fifo_overrun is expected/normal during bursty traffic pattern and it's not an issue on the firewall.

Problem is only when the traffic rate is low and if this counter increases, even with reasonable amount of traffic, then it needs investigation.

Also this doesn't have relevance to packet-descriptor depletion or leak as I have thought originally with reference to PAN-104116.

Because PD is a DP component and "rcv_fifo_overrun" is drop stage in marvell even before it makes it to DP.

In my case from TAC perspective there is not much we can do.

Kind Regards,

Eli

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-08-2019 07:05 AM

Thaanks for sharing this info @EliSz

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-17-2024 02:15 AM

Thank you for the detailed answer.

- Mark as New

- Subscribe to RSS Feed

- Permalink

12-17-2024 01:12 PM

Many Thanks for posting this information.

It will help the community.

Regards

Help the community: Like helpful comments and mark solutions.

- 21105 Views

- 12 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- PA-415-5G not working with fresh T-Mobile SIM in General Topics

- Interface Errors after upgrade - VM Series in Next-Generation Firewall Discussions

- Configuring Incoming SSL Inspection for email in General Topics

- Log collectors Incoming logs per second capacity in General Topics

- VM-500: HA1 Down, HA1 Backup Up, HA2 Up. in VM-Series in the Public Cloud