- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Expedition csv logs stuck in pending

- LIVEcommunity

- Tools

- Expedition

- Expedition Discussions

- Expedition csv logs stuck in pending

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

Expedition csv logs stuck in pending

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-26-2018 07:43 AM - edited 06-27-2018 07:55 AM

Hi everyone,

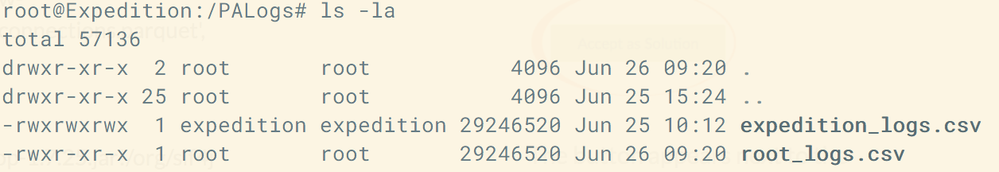

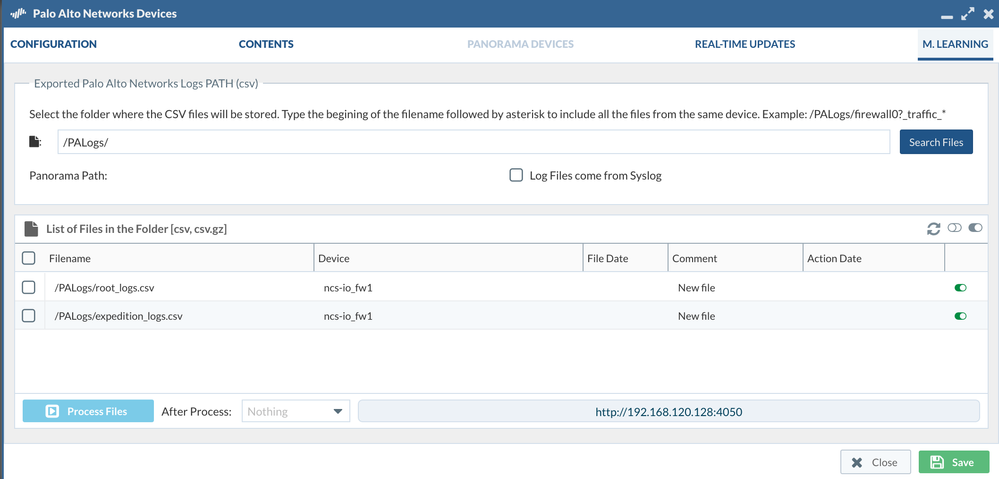

I have added firewall logs from our Palo Alto 5000 series to the Expedition VM /PALogs . I have copied the orginal .csv as a duplicate with root as the owner and the original with expedition as the owner. Both files appear in Devices > M.LEARNING. When I run Process Files the job remains in pending and nothing happens. Any ideas what the issue may be?

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-15-2019 01:34 PM

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-15-2019 01:40 PM

unfortunately, i've had this issue as well, and I had to revert to a snapshot....but after reverting and running through a few upgrades I have had that issue again.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-17-2019 12:25 AM

Could you contact us at fwmigrate@paloaltonetworks.com to do a remote session?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-15-2019 08:12 AM

Hi,

Did anyone find a workaround for this? We have the same issue also with the version 1.1.28

Thanks,

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-15-2019 08:29 AM

I've only run into this if I've filled my disk with logs....

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-15-2019 08:36 AM

Hi Sec101,

All checks are green and disk space is only 30% used... I checked the permissions to access the folder and i'm able to access it( expedition and www-data)... So i'm little bit lost as there is no much info in the errorlogs...

Any idea?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-24-2019 11:59 AM

Did you manage to resolve the issue?

If you have a CSV process hung, try to clear all the tasks via the Dashboard "Remove All" button to see if this enables the processing again.

Also, try to share with us any error shown in the /tmp/error_LogCoCo, as it may have helpful information.

Also, update expedition to 1.1.30-h2, as in 1.1.30 we updated the scheduled processing of CSV logs to provide more infromation when failing (which it is reported in /home/userSpace/panReadOrders.log)

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-25-2019 05:52 AM

Hey,

Still having the issue after updating to 1.1.30-h2 and removed all the pending tasks. All i can see in the error_logCoCo is the list of the

csv files

(/opt/Spark/spark/bin/spark-submit --class com.paloaltonetworks.tbd.LogCollectorCompacter --master localhost --deploy-mode client --driver-memory 3146m --supervise /var/www/html/OS/spark/packages/LogCoCo-2.1.1.0-SNAPSHOT.jar MLServer='X.X.X.X', master='local[1]', debug='false', taskID='106', user='admin', dbUser='root', dbPass='*******', dbServer='X.X.X.X:3306', timeZone='Europe/Helsinki', mode='Expedition', input=0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_15_last_calendar_day.csv:2,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_19_last_calendar_day.csv:10,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_24_last_calendar_day.csv:4,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_21_last_calendar_day.csv:2,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_18_last_calendar_day.csv:4,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_22_last_calendar_day.csv:1,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_20_last_calendar_day.csv:4,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_23_last_calendar_day.csv:1,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_16_last_calendar_day.csv:4,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_14_last_calendar_day.csv:3,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_17_last_calendar_day.csv:3,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_12_last_calendar_day.csv:2,0018010******:8.1.0:/home/expedition/ML/PA-3020-LAB_traffic_2019_07_13_last_calendar_day.csv:3, output='/home/expedition/ML/connections.parquet', tempFolder='/datastore'; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_15_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_19_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_24_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_21_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_18_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_22_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_20_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_23_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_16_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_14_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_17_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_12_last_calendar_day.csv; echo /home/expedition/ML/PA-3020-LAB_traffic_2019_07_13_last_calendar_day.csv; )>> "/tmp/error_logCoCo" 2>>/tmp/error_logCoCo &

---- CREATING SPARK Session:

warehouseLocation:/datastore/spark-warehouse

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.1.1-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

Exception in thread "main" java.lang.ExceptionInInitializerError

at org.apache.spark.SparkContext.<init>(SparkContext.scala:397)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2320)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:868)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:860)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:860)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:262)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:743)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.net.UnknownHostException: spanmig: spanmig: Temporary failure in name resolution

at java.net.InetAddress.getLocalHost(InetAddress.java:1506)

at org.apache.spark.util.Utils$.findLocalInetAddress(Utils.scala:870)

at org.apache.spark.util.Utils$.org$apache$spark$util$Utils$$localIpAddress$lzycompute(Utils.scala:863)

at org.apache.spark.util.Utils$.org$apache$spark$util$Utils$$localIpAddress(Utils.scala:863)

at org.apache.spark.util.Utils$$anonfun$localHostName$1.apply(Utils.scala:920)

at org.apache.spark.util.Utils$$anonfun$localHostName$1.apply(Utils.scala:920)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.util.Utils$.localHostName(Utils.scala:920)

at org.apache.spark.internal.config.package$.<init>(package.scala:189)

at org.apache.spark.internal.config.package$.<clinit>(package.scala)

... 17 more

Caused by: java.net.UnknownHostException: spanmig: Temporary failure in name resolution

at java.net.Inet6AddressImpl.lookupAllHostAddr(Native Method)

at java.net.InetAddress$2.lookupAllHostAddr(InetAddress.java:929)

at java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1324)

at java.net.InetAddress.getLocalHost(InetAddress.java:1501)

... 26 more

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_15_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_19_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_24_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_21_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_18_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_22_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_20_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_23_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_16_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_14_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_17_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_12_last_calendar_day.csv

/home/expedition/ML/PA-3020-LAB_traffic_2019_07_13_last_calendar_day.csv

For the /home/userSpace/panReadOrders.log i have the following logs:

------------------------------------------------------------

Wed Jul 24 15:13:34 EST 2019 : Starting readOrders.php

------------------------------------------------------------

------------------------------------------------------------

Wed Jul 24 15:15:41 EST 2019 : Starting readOrders.php

------------------------------------------------------------

Checking: PeriodicLogCollectorCompacter

Thu, 25 Jul 2019 01:00:50 -0400 Start Task

Thu, 25 Jul 2019 01:00:50 -0400 End Task

Thanks,

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-25-2019 06:04 AM

The log specifies that it cannot resolve the server's name:

java.net.UnknownHostException: spanmig: Temporary failure in name resolution

In your log, it shows X.X.X.X as an IP. I guess you modified it in the log so we do not get to see this information. Anyway, verify that the settings that you have in the ML section are valid, and even so, reenter them and save to make sure that other settings are spread.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-25-2019 10:28 AM

Thanks @dgildelaig !

After reconfiguring the hostname and the nameserver in /etc/resolv.conf i was able to resolve my issue.

Thanks for your help!

- Mark as New

- Subscribe to RSS Feed

- Permalink

09-08-2019 10:34 PM

spent a long time in "No supported files to process" until I read this post. I added Panorama as a device and retrieved all the managed devivces and even though the tool recognises the log format and the source shows the name of the firewalll I want, I had to delete the panorama entry and add the firewall instead under devices->imported devices. Shame it doesnt just work the other way around.

- Mark as New

- Subscribe to RSS Feed

- Permalink

09-09-2019 01:55 AM

You should still be able to process the logs from within the devices that were connected in a Panorama.

I will take a note so we disable the button within the Panorama and notify in how to process the logs.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-29-2020 05:58 AM

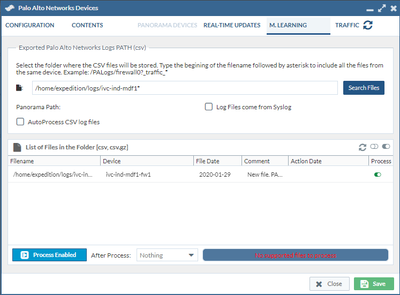

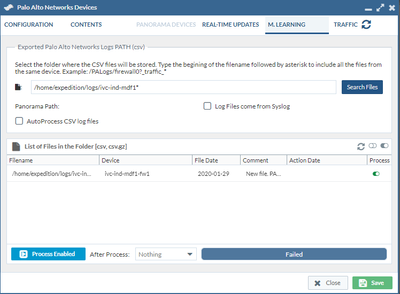

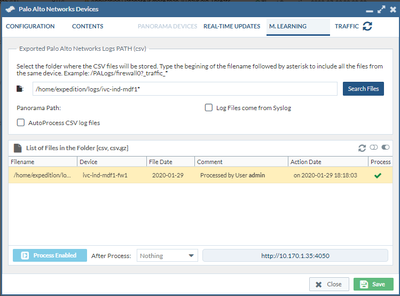

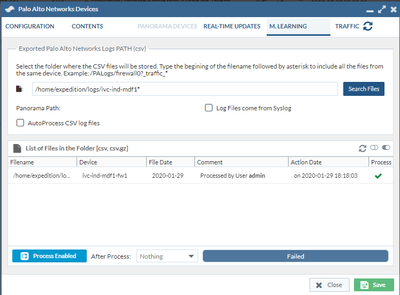

Having the same problem and cannot figure why Expedition will not process the CSV files. www-data is in the expedition group and has the permission to access the CSV files. Please help!

ubuntu@ip-10-170-1-35:/$ tail -f /tmp/error_logCoCo

at org.apache.spark.util.Utils$.localCanonicalHostName(Utils.scala:996)

at org.apache.spark.internal.config.package$.<init>(package.scala:302)

at org.apache.spark.internal.config.package$.<clinit>(package.scala)

... 17 more

Caused by: java.net.UnknownHostException: ip-10-170-1-35: Name or service not known

at java.net.Inet6AddressImpl.lookupAllHostAddr(Native Method)

at java.net.InetAddress$2.lookupAllHostAddr(InetAddress.java:929)

at java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1324)

at java.net.InetAddress.getLocalHost(InetAddress.java:1501)

... 26 more

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-29-2020 06:36 AM

I have the feeling that in your case, the issue is related to the name resolution of your Expedition instance.

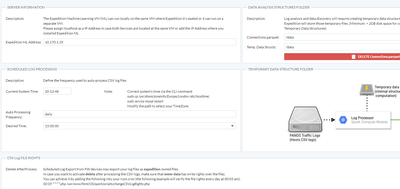

Check that the /etc/hostname is valid; and also check, via your Expedition web browser, that the ML Settings are correct pointing to your Expedition ip address.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01-29-2020 12:13 PM

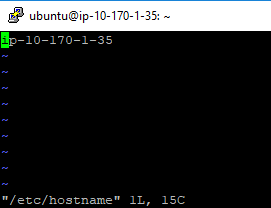

/etc/hostname shows ip-10-170-1-35

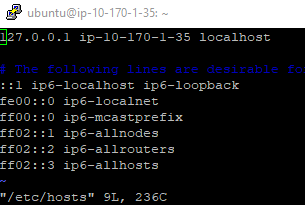

etc/hosts - changed from "127.0.0.1 localhost" to :127.0.0.1 ip-10-170-1-35 localhost"

Now I get a weird message stating http://10.170.1.135:4050

Then the process fails again.

My ML settings seem to look fine.

Any idea what else is causing this?

- 35913 Views

- 51 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Expedition not showing Panorama Device groups in API Output manager in Expedition Discussions

- Generate XML gets stuck in Expedition Discussions

- Expedition Stuck at "Reading Config files" in Expedition Discussions

- Can't generate output files in Expedition Discussions

- Expedition SRX to Palo Alto pending in Expedition Discussions