- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Multiple unexpected failovers - need help understanding FW behavior

- LIVEcommunity

- Discussions

- General Topics

- Re: Multiple unexpected failovers - need help understanding FW behavior

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

Multiple unexpected failovers - need help understanding FW behavior

- Mark as New

- Subscribe to RSS Feed

- Permalink

08-04-2022 09:55 PM

Hi all,

We have two PA-3220 devices configured in Active-Passive mode (no preemption). Firmware version is 10.1.2. In the last three weeks we had three failover incidents that we are investigating (no device reboots though). We've opened a support case regarding this, however I'm here just to confirm if the explanation we've been given is correct since it does not match our understanding of how things should work. I understand that to get to the bottom of the issue I would need to provide more information, however I just want to know if anyone else agrees with what support are telling us and our firewall behaved as expected with the settings that I will show below.

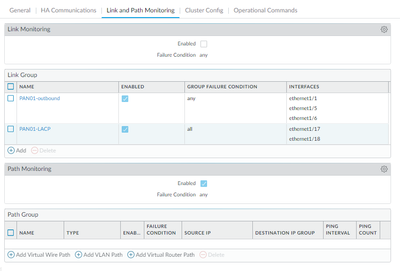

Here is a screenshot of our Link and Path Monitoring settings under High Availability. Notice that Link Monitoring is not enabled, although two Link Groups are configured. And notice that Path Monitoring is enabled, but no Path Groups are defined.

Here are the log entries around the time when one of the failovers occurred:

2022-07-18T05:23:37.477+00:00 SYSTEM nego-fail ethernet1/18 critical LACP interface ethernet1/18 moved out of AE-group ae8. Selection state Selected

2022-07-18T05:17:35.104+00:00 SYSTEM lacp-up ethernet1/17 critical LACP interface ethernet1/17 moved into AE-group ae8.

2022-07-18T05:16:13.612+00:00 SYSTEM lacp-up ethernet1/18 critical LACP interface ethernet1/18 moved into AE-group ae8.

2022-07-18T05:15:24.282+00:00 SYSTEM nego-fail ethernet1/17 critical LACP interface ethernet1/17 moved out of AE-group ae8. Selection state Selected

2022-07-18T05:15:13.056+00:00 SYSTEM nego-fail ethernet1/18 critical LACP interface ethernet1/18 moved out of AE-group ae8. Selection state Selected

2022-07-18T05:15:13.019+00:00 SYSTEM general high "9: dp0-path_monitor HB failures seen, triggering HA DP down"

2022-07-18T05:15:12.661+00:00 SYSTEM dataplane-down critical HA Group 1: Dataplane is down: path monitor failure

2022-07-18T05:15:12.660+00:00 SYSTEM state-change critical HA Group 1: Moved from state Active to state Non-Functional

2022-07-18T05:15:07.657+00:00 SYSTEM ha2-link-change critical HA2 link down

2022-07-18T05:15:05.065+00:00 SYSTEM general critical Chassis Master Alarm: HA-event

2022-07-18T05:15:05.062+00:00 SYSTEM rasmgr-ha-full-sync-abort informational RASMGR daemon sync all user info to HA peer no longer needed.

2022-07-18T05:15:05.029+00:00 SYSTEM ha2-link-change high HA2-Backup link down

2022-07-18T05:15:03.410+00:00 SYSTEM session-synch high HA Group 1: Ignoring session synchronization due to HA2-unavailable

2022-07-18T05:15:01.602+00:00 SYSTEM ha2-link-change critical All HA2 links down

2022-07-18T05:15:01.598+00:00 SYSTEM keymgr-ha-full-sync-abort informational KEYMGR sync all IPSec SA to HA peer no longer needed.

2022-07-18T05:15:01.586+00:00 SYSTEM routed-fib-sync-self-master informational FIB HA sync started when local device becomes master.

2022-07-18T05:15:01.584+00:00 SYSTEM satd-ha-full-sync-abort informational SATD daemon sync all gateway infos to HA peer no longer needed.

2022-07-18T05:15:01.579+00:00 SYSTEM general informational API key sent by peer is successfully set

2022-07-18T05:15:01.575+00:00 SYSTEM link-change ethernet1/1 informational Port ethernet1/1: MAC Down

2022-07-18T05:15:01.567+00:00 SYSTEM ha1-link-change high HA1-Backup link down

2022-07-18T05:15:01.561+00:00 SYSTEM connect-change high HA Group 1: HA1-Backup connection down

2022-07-18T05:15:01.548+00:00 SYSTEM link-change ethernet1/5 informational Port ethernet1/5: MAC Down

2022-07-18T05:15:01.541+00:00 SYSTEM link-change ethernet1/6 informational Port ethernet1/6: MAC Down

2022-07-18T05:15:01.536+00:00 SYSTEM link-change ethernet1/1 informational Port ethernet1/1: Up 1Gb/s-full duplex

2022-07-18T05:15:01.533+00:00 SYSTEM link-change ethernet1/9 informational Port ethernet1/9: MAC Down

2022-07-18T05:15:01.089+00:00 SYSTEM link-change ethernet1/17 informational Port ethernet1/17: MAC Down

2022-07-18T05:15:00.627+00:00 SYSTEM link-change ethernet1/17 informational Port ethernet1/17: Up 10Gb/s-full duplex

2022-07-18T05:15:00.116+00:00 SYSTEM link-change ethernet1/18 informational Port ethernet1/18: MAC Down

2022-07-18T05:14:59.661+00:00 SYSTEM link-change ethernet1/18 informational Port ethernet1/18: Up 10Gb/s-full duplex

2022-07-18T05:14:59.178+00:00 SYSTEM link-change ethernet1/17 informational Port ethernet1/17: Up 10Gb/s-full duplex

2022-07-18T05:14:58.729+00:00 SYSTEM link-change ethernet1/18 informational Port ethernet1/18: Up 10Gb/s-full duplex

2022-07-18T05:14:58.247+00:00 SYSTEM link-change ethernet1/9 informational Port ethernet1/9: Up 1Gb/s-full duplex

2022-07-18T05:14:57.771+00:00 SYSTEM link-change ethernet1/6 informational Port ethernet1/6: Up 1Gb/s-full duplex

2022-07-18T05:14:57.713+00:00 SYSTEM link-change ethernet1/5 informational Port ethernet1/5: Up 1Gb/s-full duplex

2022-07-18T05:14:57.297+00:00 SYSTEM general critical "all_pktproc_4: Exited 4 times, must be manually recovered."

2022-07-18T05:14:57.159+00:00 SYSTEM general critical "pktlog_forwarding: Exited 4 times, must be manually recovered."

2022-07-18T05:14:57.138+00:00 SYSTEM general critical "all_pktproc_3: Exited 4 times, must be manually recovered."

2022-07-18T05:14:57.103+00:00 SYSTEM general critical "all_pktproc_7: Exited 4 times, must be manually recovered."

2022-07-18T05:14:56.827+00:00 SYSTEM general critical "all_pktproc_8: Exited 4 times, must be manually recovered."

2022-07-18T05:14:56.375+00:00 SYSTEM general critical "all_pktproc_5: Exited 4 times, must be manually recovered."

2022-07-18T05:14:55.917+00:00 SYSTEM general critical "all_pktproc_6: Exited 4 times, must be manually recovered."

2022-07-18T05:14:54.923+00:00 SYSTEM general critical "tasks: Exited 1 times, must be manually recovered."

2022-07-18T05:14:54.903+00:00 SYSTEM ha2-link-change high HA2-Backup peer link down

2022-07-18T05:14:53.703+00:00 SYSTEM ha2-link-change high HA2 peer link down

2022-07-18T05:14:53.586+00:00 SYSTEM general critical "Internal packet path monitoring failure, restarting dataplane"

2022-07-18T05:14:53.584+00:00 SYSTEM general high dp0-path_monitor: exiting because service missed too many heartbeats

2022-07-18T05:14:22.654+00:00 SYSTEM general critical "dp0-path_monitor: Exited 1 times, must be manually recovered."

2022-07-18T05:14:18.148+00:00 SYSTEM general critical "internal_monitor: Exited 1 times, must be manually recovered."

2022-07-18T05:14:18.037+00:00 SYSTEM general critical The dataplane is restarting.

2022-07-18T05:14:18.016+00:00 SYSTEM ha1-link-change high HA1-Backup peer link down

2022-07-18T05:14:00.911+00:00 SYSTEM slot-starting informational Slot 1 (PA-3220) is starting.

2022-07-18T05:14:00.456+00:00 SYSTEM slot-starting informational Slot 1 (PA-3220) is starting.

2022-07-18T05:13:57.494+00:00 SYSTEM slot-starting informational Slot 1 (PA-3220) is starting.

2022-07-18T05:13:57.489+00:00 SYSTEM slot-starting informational Slot 1 (PA-3220) is starting.

2022-07-18T05:13:57.183+00:00 SYSTEM slot-starting informational Slot 1 (PA-3220) is starting.

2022-07-18T05:13:56.682+00:00 SYSTEM link-change ethernet1/2 informational Port ethernet1/2: Down 1Gb/s-full duplex

2022-07-18T05:13:56.660+00:00 SYSTEM link-change ethernet1/3 informational Port ethernet1/3: Down 1Gb/s-full duplex

2022-07-18T05:13:56.650+00:00 SYSTEM link-change ethernet1/4 informational Port ethernet1/4: Down 1Gb/s-full duplex

Instead of me retelling what support are saying, here is their analysis:

LACP stops receiving packets on both ports 17 & 18 and so the channel goes down.

LACP going down triggers path monitor failure, which forces the dataplane to go down. Somebody might have configured the FW's virtual router to do continuous icmp pings to sites upstream to the FW, and those upstream IPs were no longer responsive). Because no longer responsive, then the FW felt/believed that your monitored path was no longer existent, and wanted to fail over to your backup FW. So it forced the DP down (transitioned it to Non-Functional state actually) in order to force the FW into failover.

The second FW becomes active.

This happened in both cases.

Could you please check under virtual router if any of the path monitored under the virtual router to would go across that LACP channel? If it does a further step would be either the removal said monitor or more likely looking further into the LACP issue. Considering that it happened on both FW, both failed receiving LACP packets/traffic, and acted as they are configured, it might be worth investigating the sources of those LACP packets/traffic or problems along the path from the source towards the firewalls.

Now they are claiming that failover occurred due to path monitor failure, and moreover, we are told that it's the path monitors configured for static routes under virtual router configuration (we do have multiple static route path monitors configured). Our thoughts are, however, that firewalls cannot failover due to static route path monitor failures, and that we have no active link/path monitors configured under High Availability that would have caused the issue, so even though there was an issue with LACP link as the first event in the log snippet above, it should not have failed over as no automatic failover condition is configured at the moment.

Please share your thoughts on this. I just want to hear someone else's opinion as I know I might be in a tunnel vision mode and focus on the wrong thing instead of looking at it from some other perspective. We want to get to the bottom of it, and have the path we need to take to prevent this in the future, however I am not yet able to convince the support engineer that their theory is wrong, and on the other hand I cannot accept the explanation myself since I interpret things differently in this case.

Thanks and appreciate any help in advance.

- Mark as New

- Subscribe to RSS Feed

- Permalink

08-08-2022 04:25 AM

Hi @Nielsen

I would agree with you that TAC explanation doesn't make alot of sense.

But at the same time I am still puzzled by the logs messages - "Dataplane is down: path monitor failure".

You could be facing something similar to what is explained here - https://knowledgebase.paloaltonetworks.com/KCSArticleDetail?id=kA14u000000HCcXCAW&lang=en_US%E2%80%A...

Although you don't see repetitive logs it could still explain the two logs:

2022-07-18T05:14:53.586+00:00 SYSTEM general critical "Internal packet path monitoring failure, restarting dataplane"

2022-07-18T05:14:53.584+00:00 SYSTEM general high dp0-path_monitor: exiting because service missed too many heartbeats

2022-07-18T05:14:22.654+00:00 SYSTEM general critical "dp0-path_monitor: Exited 1 times, must be manually recovered."

2022-07-18T05:14:18.148+00:00 SYSTEM general critical "internal_monitor: Exited 1 times, must be manually recovered."Which could explain the rest of the logs - restarting the dataplane will restart the dataplane interfaces. And if you are using dataplane interfaces for HA2 and HA1 (instead of the dedicated one), this could also explain why all HA interfaces are going down as well.

Digging around for the "Internal packet path monitoring" show this - https://live.paloaltonetworks.com/t5/general-topics/pa-failover/td-p/107059 which is suggesting hardware issues. But probably TAC could confirm this.

I would suggest to push your TAC engineer to explain those logs and dig deeper in this direction.

- Mark as New

- Subscribe to RSS Feed

- Permalink

08-08-2022 05:17 PM

Thanks @aleksandar.astardzhiev . Support eventually conceded that they mixed things up and assumed that the log entry for path monitor failure referred to the static route path monitor. Instead it is in fact an internal path monitor that did fail when LACP group went down.

Once this has been cleared, I was given a link to the 10.1.6 Addressed Issues page, where one of the items is the following:

PAN-181245 Fixed an internal path monitoring failure issue that caused the dataplane to go down.

This makes much more sense because it does explain how the failover occurred after the path monitor (internal) failed. We have since updated from 10.1.2 to 10.1.6-h3. Hopefully this addresses the issue we've been having and we are monitoring the situation now. It doesn't explain why LACP failed though, so if the failover ever happens again I'll update this post.

- 4360 Views

- 2 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- Could you please tell me about the Embedded Browser Framework Upgrade, an enhancement of GlobalProtect version 6.3? in Prisma Access Discussions

- Cortex XDR PoC Lab ft. CVE-2021-3560 in Cortex XDR Discussions

- Multiple unexpected failovers - need help understanding FW behavior in General Topics

- Issue with correct FTP application detection in General Topics

- HA - Link Monitoring in General Topics