- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Troubleshooting GlobalProtect MTU Issues

- LIVEcommunity

- Products

- Network Security

- GlobalProtect

- GlobalProtect Articles

- Troubleshooting GlobalProtect MTU Issues

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

02-17-2021 08:30 AM - edited 06-12-2025 02:53 AM

Overview

MTU (Maximum Transmission Unit) usually refers to a maximum amount of data (Bytes) that we can place as a payload into a L2 frame. In most of the cases, we are talking about Ethernet on Layer2 and IP on Layer3, where the previous statement translates to maximum IP packet size that can be carried over by Ethernet Frame. Formally, each OSI (Open Systems Interconnection) model layer has its own PDU (Protocol Data Unit) which encapsulates the data called SDU (Service Data Unit) as its payload. SDU received on a layer n is a PDU for a layer n+1.

Note: Though we are often calling different Protocol Data Units (PDUs) at different layers "packet", the usual convention is that the PDUs are named as:

| Layer | PDU name |

| L2 / Data-Link Layer | Ethernet Frame |

| L3 / Network Layer | IP Packet |

| L4 / Transport Layer | TCP Segment or UDP Datagram |

- Why do we need to consider MTU size when we are talking about VPN solutions, such as GlobalProtect?

All VPN solutions are adding certain overhead to our original data, thus effectively reducing MTU we are able to use (if we are to avoid fragmentation). Additionally, MTU can be different (lower) along the whole connection path and introduce issues.

- What is a fragmentation and why do we want to avoid it?

Fragmentation is a process of splitting an IP packet into multiple fragments (which are also IP packets themselves) in case the data to be carried exceeds egress interface MTU. Some of the downsides of fragmentation are:

(a) lower throughput (performance) caused by additional overhead;

(b) additional (CPU) processing time used to handle fragmentation (regardless if we are fragmenting the data or reassembling received fragments);

(c) if a single fragment is missing on a receiving end, upper layer (such as TCP) retransmissions mechanism will cause all the fragments to be re-sent (there is no retransmission flow control mechanism on IP layer);

(d) IP fragmentation is used as one of the evasion techniques, making it harder for security devices (such as firewalls) to detect malicious payload (overlapping fragments, depleting or exceeding buffers, etc.)

Note: This article is focused on IPv4 MTU issues as IPv6 is quite different in handling fragmentation.

Overhead

IPSec Tunnel

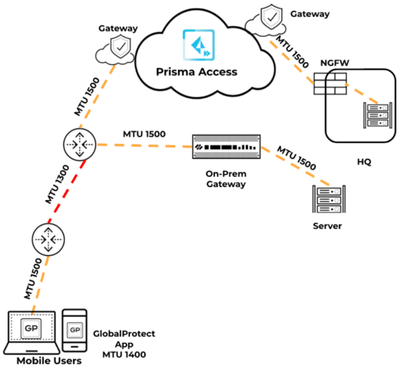

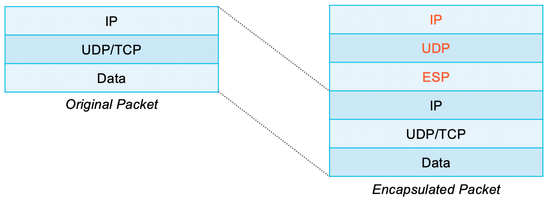

Looking at the overhead added in case of GlobalProtect IPSec tunnel, we have the following:

- additional IP header used to deliver the packet between tunnel endpoint (external tunnel IPs)

- UDP encapsulation used for NAT traversal (port 4501)

- ESP encapsulation

Exact overhead size depends on the cipher used and pad length (which varies based on the input data size).

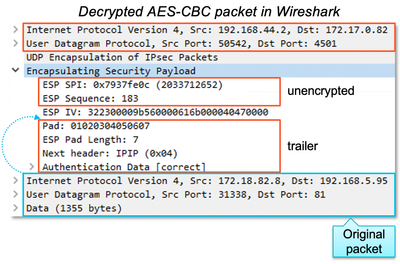

- For AES-CBC (Cipher Block Chaining) cipher, we have the following overhead size:

| Header / Trailer | Size (Bytes) |

| IP | 20 |

| UDP | 8 |

| ESP SPI | 4 |

| ESP Seq | 4 |

| IV (Initialization Vector) | 16 |

| Padding | 0 - 15 |

| Pad Length | 1 |

| Next Header | 1 |

| Authentication Hash | 12 |

| TOTAL | 66 - 81 |

Example of decrypted packet in Wireshark:

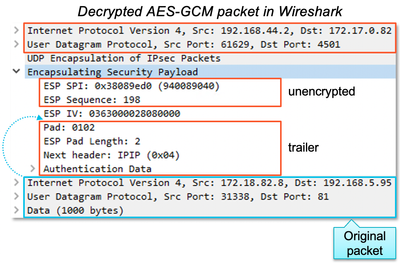

- For AES-GCM (Galois/Counter Mode) cipher:

| Header / Trailer | Size (Bytes) |

| IP | 20 |

| UDP | 8 |

| ESP SPI | 4 |

| ESP Seq | 4 |

| IV (Initialization Vector) | 8 |

| Padding | 0 - 15 |

| Pad Length | 1 |

| Next Header | 1 |

| Authentication Hash | 16 |

| TOTAL | 62 - 77 |

Example of decrypted packet in Wireshark:

SSL Tunnel

GlobalProtect can use SSL-based tunnel as well, which adds its own overhead.

Note: IPSec tunnel is preferred from a performance perspective. This is not just because SSL tunnels are adding a bit more overhead. The main reason is that the outer SSL tunnel is TCP-based and has flow control (unlike UDP encapsulated IPSec tunnel). This is especially visible for inner tunnel TCP based transfers (HTTP, HTTPS, FTP, SMB, etc.), as we have separate, out-of-sync flow controls for inner and outer tunnel flows.

Added overhead depends on the cipher suite used and padding.

| Cipher Suite | Max Overhead (Bytes) |

| AES_128_CBC_SHA | 113 |

| AES_128_CBC_SHA256 | 125 |

| AES_256_CBC_SHA256 | 125 |

| AES_128_GCM_SHA256 | 85 |

| AES_256_GCM_SHA384 | 85 |

- For example, when using AES_256_GCM_SHA384, overhead consists of:

| Header / Trailer | Size (Bytes) |

| IP | 20 |

| TCP | 20 |

| SSL Content Type | 1 |

| SSL Version | 2 |

| SSL Length | 2 |

| Authentication | 24 |

| Custom Meta-Data | 16 |

| TOTAL | 85 |

Tunnel Interface MTU

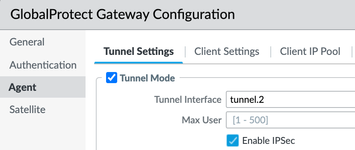

In order to accommodate additional overhead tunnel interface attached to the GlobalProtect Gateway, the configuration automatically adjusts MTU value based on the tunnel type (IPSec vs SSL) and cipher used. This in turn affects MSS (Maximum Segment Size) TCP option advertised across the tunnel.

Note that the show interface tunnel.<id> CLI command shows either:

- default platform MTU value or

> show interface tunnel.2

--------------------------------------------------------------------------------

Name: tunnel.2, ID: 259

...

Interface MTU 1500

> show system setting jumbo-frame

Device Jumbo-Frame mode: off

Maximum of frame size excluding Ethernet header: 1500

Current device mtu size: 1500- explicit MTU value configured on the Tunnel Interface (if configured).

> show interface tunnel.2

--------------------------------------------------------------------------------

Interface MTU 1444

We can see the auto-adjusted MTU value after GlobalProtect tunnel establishes by looking at the output of show global-protect-gateway flow tunnel-id <id> CLI command. Note that the auto-adjustment happens only if we don't have explicit MTU value configured on the tunnel interface (if that is the case, explicit value is used for each tunnel, regardless of negotiated tunnel type/cipher).

Let's say the default system MTU is 1500, and there is no explicit value configured on the tunnel interface, we can see different auto-adjusted values for the tunnels depending on the cipher used (in this case IPSec, AES-CBC and AES-GCM)

> show interface tunnel.2

Interface MTU 1500

> show global-protect-gateway flow tunnel-id 2

assigned-ip remote-ip MTU encapsulation

-----------------------------------------------------------------------------

172.18.82.8 192.168.44.2 1420 IPSec SPI 29053335 (context 24)

or

assigned-ip remote-ip MTU encapsulation

-----------------------------------------------------------------------------

172.18.82.8 192.168.44.2 1424 IPSec SPI 53DBCFFE (context 25)

Note: we can control cipher chosen for IPSec using GlobalProtect IPSec Crypto profiles attached to the GlobalProtect Gateway configuration.

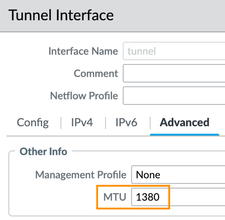

In case we've explicitly configured tunnel MTU value, the same is shown in both CLI command outputs:

> show interface tunnel.2

Interface MTU 1380

> show global-protect-gateway flow tunnel-id 2

assigned-ip remote-ip MTU encapsulation

-----------------------------------------------------------------------------

172.18.82.8 192.168.44.2 1380 IPSec SPI 29F7C1F9 (context 26)

Finally, auto-adjusted value does take into account the physical interface MTU to which GlobalProtect Gateway is tied to. For example, if the Gateway is configured on the loopback interface set with 1450B MTU, this will be the starting value we'll be deducting from to calculate the final MTU for a particular formed GlobalProtect tunnel (in this case 1450 - 80 = 1370).

> show interface tunnel.2

Interface MTU 1500

> show interface loopback.2

Interface MTU 1450

> show global-protect-gateway flow tunnel-id 2

...

inner interface: tunnel.2 outer interface: loopback.2

assigned-ip remote-ip MTU encapsulation

-----------------------------------------------------------------------------

172.18.82.8 192.168.44.2 1370 IPSec SPI 5F3753B8 (context 28)

The table below shows the auto-adjustment deduction depending on the tunnel type and cipher, in case we didn't specify explicit value on the tunnel interface.

| Tunnel / Cipher | Auto-adjustment deduction (Bytes) |

| IPSec / AES-CBC | 81 |

| IPSec / AES-GCM | 77 |

| SSL / AES_128_CBC_SHA | 114 |

| SSL / AES_128_CBC_SHA256 | 126 |

| SSL / AES_256_CBC_SHA256 | 126 |

| SSL / AES_128_GCM_SHA256 | 85 / 86 |

| SSL / AES_256_GCM_SHA384 | 85 / 86 |

MSS - Maximum Segment Size

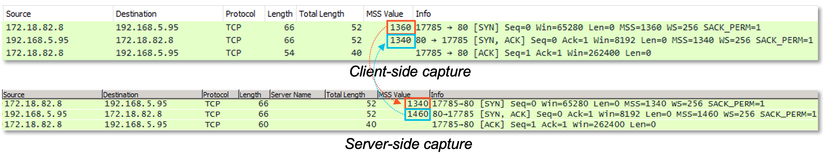

(Auto) Configured tunnel MTU value impacts MSS adjustment during TCP 3-way handshakes. Firewall is automatically adjusting MSS for inner tunnel connections based on the MTU value shown in the output of show global-protect-gateway flow tunnel-id <id> command. MSS is adjusted (reduced) in both direction by 40B (20B for IP header and 20B for TCP header). Note that there is no "Adjust TCP MSS" setting for tunnel interfaces (as we have it for physical interfaces).

Example:

- tunnel MTU value (as seen in show global-protect-gateway flow tunnel-id <id>) is 1380B

- GlobalProtect client is sending TCP SYN reaching the firewall with the MSS of 1360B (default PAN Virtual Interface MTU is 1400, thus expected MSS size is 1400 - 40 = 1360)

- the value is adjusted to 1340B since 1380 - 40 is lower than 1360 advertised by the client

- server side responds with SYN ACK and the MSS of 1460 (server on LAN network with default NIC MTU of 1500 and expected MSS of 1500 - 40 = 1460)

- the value is adjusted, again, to 1340B since 1340 is lower than 1460

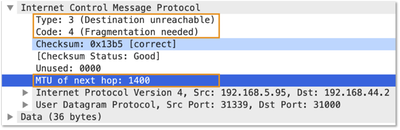

Path MTU Discovery (PMTUD)

Path MTU Discovery is a technique used to determine MTU for a given destination. In general, hosts would make an assumption on the MTU size based on the configuration of their NIC (Network Interface Card), which doesn't mean the same MTU can be applied to the whole network path between the source and the destination. The client can "probe" the path by sending the message to the final destination using the max-allowed MTU (based on the interface settings) and setting the DF (Don't Fragment) bit in the IP header. If there is a place in the network where fragmentation is needed (packet size exceeding egress MTU), a network device (usually a router or a firewall) should send back ICMP Type 3 Code 4 message (Destination Unreachable, Fragmentation Needed and DF set) to the sender, alongside the next hop (egress) MTU. This would allow for the sender to dynamically adjust MTU size when sending packets to the particular destination. Note that ICMP message is not sent if DF bit is not set (even if fragmentation needs to occur).

PMTUD is often crippled as network devices (mostly firewalls) are commonly configured to filter out (drop) ICMP messages due to security concerns.

As a result, traffic may be black holed: packets exceeding MTU can't be fragmented, due to DF bit set, while no return information is reaching the sender. In case DF bit isn't set, traffic exceeding MTU should be fragmented on egress. Still there is no guarantee that fragmentation isn't disabled on a network device and some devices may be set to drop fragments instead of forwarding them.

We can check whether a host dynamically adjusted MTU for a destination using netsh interface ipv4 show destinationcache on Windows or ip route get and tracepath -n on Linux. For example, two destinations listed below both have the same next hop address, but different MTU, as the network path leading to them is different.

> netsh interface ipv4 show destinationcache

Interface 12: LAN-Trust

PMTU Destination Address Next Hop Address

---- --------------------------------------------- -------------------------

1500 192.168.5.95 192.168.5.80

1400 192.168.44.2 192.168.5.80

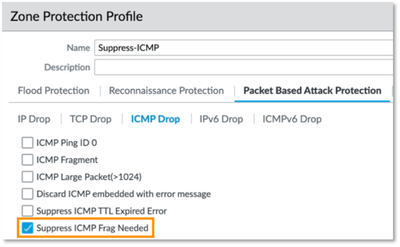

Palo Alto Networks firewall can send ICMP Type 3 Code 4 message if the following conditions are met:

- DF bit is set for the packet,

- Egress interface MTU is lower than the packet size,

- Suppression of "ICMP Frag Needed" messages is not configured in Zone Protection profile attached to the packet's ingress zone.

In this case, following global counters would increment:

flow_fwd_ip_df_drop Packets dropped: exceeded MTU but DF bit present

flow_dos_icmp_replyneedfrag Packets dropped: Unsuprressed ICMP Need FragmentationIf we did set the suppression of "ICMP Frag Needed" messages, we'll see the following global counters:

flow_fwd_ip_df_drop Packets dropped: exceeded MTU but DF bit present

flow_dos_pf_noreplyneedfrag Packets dropped: Zone protection option 'suppress-icmp-needfrag'

of that if DF bit is not set, ICMP packet is not sent (regardless of whether or not fragmentation is needed).

Palo Alto Networks firewall does not copy the inner GlobalProtect tunnel traffic DF bit value to outer tunnel IP header. Outer tunnel encapsulation does not have the DF bit set!

This implies that the outer tunnel traffic can always be fragmented by intermediate devices, unless these devices explicitly don't perform fragmentation (due to confirmation or some other limitation).

The GlobalProtect client, on the other hand, doesn't set the DF bit for IPSec traffic, but does set it for SSL tunnel.

Scenarios

Let's have a look at some sample scenarios illustrating different behaviors and potential issues.

Scenario 1

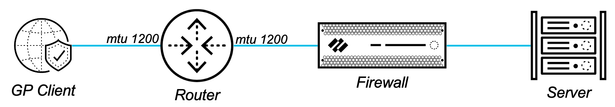

Router in the network path between GlobalProtect client and GlobalProtect gateway has lower MTU.

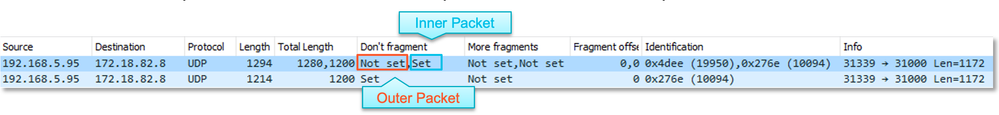

- Encapsulated (tunneled) packets sent from GlobalProtect client and the firewall don't have DF bit set (IPSec tunnel)

- This means that the packets should be fragmented by the router on the path if 1200 MTU is smaller than the actual packet size

- Problem may arise if the router on the path doesn't perform fragmentation

- In that case, traffic would be black holed (dropped), and manually adjusting (lowering) MTU on GlobalProtect client/server end may be required

- For SSL (TCP) based tunnel, the issue can be mitigated if the router is able to adjust (lower) MSS in transit (during three-way handshake)

Scenario 2

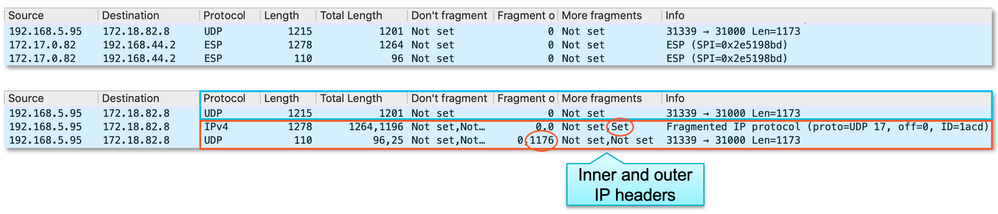

Server sending packets larger than MTU used on the GlobalProtect gateway tunnel interface.

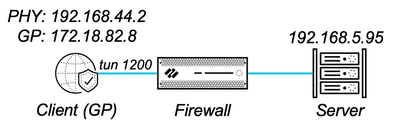

- Server sends a packet which is 1B above the tunnel limit - 1201B, without DF bit set

- Firewall is able to fragment the data and encapsulates the fragments

- Global counters will show fragmentation: packet received exceeding MTU and two fragments sent (packet divided into 2 parts)

flow_fwd_mtu_exceeded 1 info flow forward Packets lengths exceeded MTU

flow_ipfrag_frag 2 info flow ipfrag IP fragments transmitted

- Debug logs will show the same:

IP: 192.168.5.95->172.18.82.8, protocol 17

version 4, ihl 5, tos 0x00, len 1201,

...

Resolving tunnel 2

Packet exceeded MTU 1200, perform IP fragmentation prior to encap or original packet contains fragmentation

Packet entered tunnel (2) encapsulation

...

version 4, ihl 5, tos 0x00, len 1196,

...

version 4, ihl 5, tos 0x00, len 25,- Finally, PanGPS logs on GlobalProtect client side will show fragmented data received

Debug(2039): Received a tunnel packet with fragment 0x2000

Debug(2039): Received a tunnel packet with fragment 0x93

- Note that the 0x2000 and 0x93 are presenting IP header fragmentation flags (3 bits: Reserved, DF - Don't Fragment, MF - More Fragment) and fragment offset field (13 bits representing the offset in 8B blocks)

- 0x2000 translates to MF (More Fragment) flag set and 0 offset (first fragment)

- 0x93 translates to 147 decimal, multiplied by 8 (for 8B blocks) equals to 1176 offset and no flags set (which can be seen in the Wireshark capture above made on the firewall)

Scenario 3

Looking at the same setup as in Scenario 2, what happens if the firewall receives 1300B packet from the GlobalProtect client on a tunnel interface (without fragmentation) with 1200B MTU?

- Firewall will accept the packet

- There is not MTU check on ingress, MTU is only considered when packet is egressing the interface

- Logs are showing packet with the size of 1300B is received on an interface (id 259, matching tunnel.2) with 1200B MTU set

Packet received at ingress stage, tag 0, type ATOMIC

Packet info: len 1314 port 4 interface 259 vsys 1

IP: 172.18.82.8->192.168.5.95, protocol 17

version 4, ihl 5, tos 0x00, len 1300,

...

> show interface all

tunnel.2 259 1 GP-zone vr:default

> show interface tunnel.2

Interface MTU 1200

MTU vs MRU

- There is a difference between MTU and MRU (Maximum Receive Unit)

- MRU is usually larger than MTU and can be checked via sdb (for non-VM platforms); In the example below, MRU is 10048B for jumbo-frame enabled interfaces with 9192 MTU:

> show system state filter-pretty sw.dev.runtime.ifmon.port-states

sw.dev.runtime.ifmon.port-states: {

ethernet1/1: {

farloop: False,

link: Up,

mode: Autoneg,

mru: 10048,

nearloop: False,

pause-frames: True,

setting: 1Gb/s-full,

type: RJ45,

> show system state filter-pretty sys.s1.p*.cfg

> show system state filter-pretty sw.dev.interface.config

ethernet1/1: {

hwaddr: b4:0c:25:cc:bb:aa,

mtu: 9192,

},

Scenario 4

Again, looking at the same setup as in Scenario 2, but what will happen if the server is sending the packet larger than MTU size (1201B vs 1200B MTU) with the DF bit set:

- Firewall will drop the packet, as it can't be fragmented (note 0x4000 for fragmentation related fields in the IP header)

Packet received at ingress stage, tag 0, type ORDERED

Packet decoded dump:

IP: 192.168.5.95->172.18.82.8, protocol 17

version 4, ihl 5, tos 0x00, len 1201,

id 56862, frag_off 0x4000, ttl 64, checksum 64403(0x93fb)

...

Route found, interface tunnel.2, zone 4, nexthop 172.18.82.0

Packet dropped, Need fragment for intf 259 but DF bit present

Notify ICMP error flow 163534 type 3 code 4- Counters will show that the packet has been dropped and that ICMP message has been sent to the server (in case suppression hasn't been configured)

flow_fwd_ip_df_drop 1 drop flow forward Packets dropped: exceeded MTU but DF bit present

flow_dos_icmp_replyneedfrag 1 warn flow dos Packets dropped: Unsuprressed ICMP Need Fragmentation

Ignore DF bit

- In PAN-OS 10.0.0 / 9.1.3 / 9.0.9 we've added the feature to ignore (clear) DF bit

- This is a global command (affects all the traffic)

- It can be activated via CLI command debug dataplane set ip4-ignore-df yes, as seen here:

> debug dataplane set ip4-ignore-df yes

> show system state filter-pretty sw.comm.s1.dp0.flow-data

...

ip4-ignore-df: True,- If the packet is fragmented while ignoring DF bit, global counters will show:

flow_fwd_mtu_exceeded 1 info flow forward Packets lengths exceeded MTU

flow_fwd_ip_df_ignore 1 info flow forward Packets overwrite DF bit off

flow_ipfrag_frag 2 info flow ipfrag IP fragments transmitted

- debug logs will show:

Overwrite DF bit off on intf 259

Fragmentation Detection

The easiest way to detect fragmentation happening on the firewall (GlobalProtect gateway) side is looking over the global counters:

flow_fwd_mtu_exceeded info flow forward Packets lengths exceeded MTU

flow_ipfrag_frag info flow ipfrag IP fragments transmitted

flow_ipfrag_recv info flow ipfrag IP fragments received

flow_ipfrag_merge info flow ipfrag IP defragmentation completedNote: Global counters flow_fwd_mtu_exceeded and flow_ipfrag_frag may increment, indicating fragmentation activity, even when there is no actual fragmentation happening on the wire. This can happen only if the firewall side packet captures are enabled at the same time! The reason for this is that the DP (Data Plane) needs to encapsulate packets received on the wire with additional header and meta-data when forwarding the same to MP (Management Plane) varrcvr process, which is storing the packets in the configured capture file(s). If the captured packets are close to the MTU limit, additional overhead will likely make them go above the DP configured MTU, thus triggering fragmentation towards the MP. When using global counters to confirm if the fragmentation is happening, make sure firewall side packets captures are turned off.

- Also note that it's easy for fragmented packets to remain unnoticed when viewing the .pcaps in Wireshark with filters applied. For example, if we are filtering for UDP or TCP traffic, Wireshark will show only one fragment/packet, as only one contains a L4 header, while the rest of the fragments will not be shown. Again, fragmentation is done on L3 layer (IP) and everything above is considered as "data" (L4 and other upper layer headers and data).

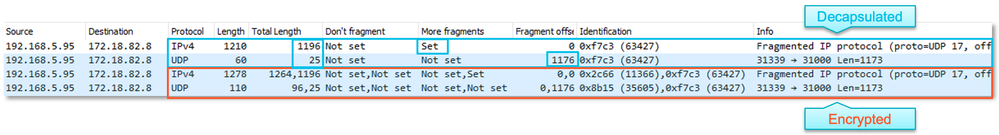

- Firewall side packets captures will show encrypted data in transmit stage. In order to see whether the inner payload has been fragmented, we would need to decrypt the data (assuming we have session keys). Images below are showing encrypted and decrypted Tx (Transmit) stage for the packets that were fragmented (exceeding tunnel MTU) and then encapsulated. Wireshark was set to present Fragmentation related IP fields as columns, and for decrypted data, we can see both inner and outer packet flags.

- Firewall captures will show fragmented traffic (without decrypting the data) if the fragmentation was done on the opposite end of the tunnel (GlobalProtect client), since we'll see the packets in the Rx (Receive) stage both as ESP and then again after decryption (decapsulation).

- Firewall captures would also show fragmentation if intermediate devices would fragment external tunnel traffic (no DF bit set, eligible for fragmentation).

- On the GlobalProtect client side we can use PanGPS logs which will show when fragmented packets are received from the tunnel (virtual) interface

Debug(2039): Received a tunnel packet with fragment 0x2000

Debug(2039): Received a tunnel packet with fragment 0x93

- As mentioned before, 0x2000 and 0x93 are presenting IP header fragmentation flags (3 bits: Reserved, DF - Don't Fragment, MF - More Fragment) and fragment offset field (13 bits representing the offset in 8B blocks), where 0x2000 translates to MF (More Fragment) flag set and 0 offset (first fragment) and 0x93 translates to 147 decimal, multiplied by 8 (for 8B blocks) equals to 1176 offset and no flags set

- If the client is receiving fragmented data locally for the tunnel, log messages will show:

Debug(1327): Received a virtual interface packet with fragment 0x2000

Debug(1327): Received a virtual interface packet with fragment 0xAC

Configuration Options

GlobalProtect Gateway Side

In case the auto-adjusted MTU value for GlobalProtect gateway side tunnels is not low enough and we are seeing fragmentation-related issues or performance impact, we can set the preferred value statically on the tunnel interface (see Tunnel Interface MTU section). One benefit of having auto-adjusted values is that different MTU can be applied to individual tunnels based on the protocol/cipher selection. If we are to configure the value explicitly, we should take a worst-case scenario in term of protocol/cipher selection. Additionally, we should account for specific environment:

- whether there is extra encapsulation added (not directly related to GlobalProtect remote access solution),

- what are the ISP MTU limitations,

- what are the network path limitations (though it's hard to know this upfront, if clients can access GlobalProtect gateway from any place/network).

GlobalProtect Client Side

The default MTU on GlobalProtect client side is 1400B. Most of the time, this value is good enough, meaning that it doesn't break any functionality and it's not too low to increase potential performance/throughput impact. There are cases where the value isn't low enough - usually due to additional (unaccounted for) encapsulation being added on the client side (such as GRE encapsulation added by third-party software) or ISP-related limitations.

It is possible to change the MTU value manually using commands such as:

//Windows

> netsh int ipv4 set subinterface "Ethernet 4" mtu=1300

PS > SET-NetIPInterface -InterfaceIndex 12 -NlMtuBytes 1300

//macOS

sudo ifconfig utun2 set mtu 1300or push the settings via GPO or other enterprise tools.

The preferred method is changing the value via GlobalProtect Portal configuration, which is possible starting with GlobalProtect client version 5.2.4 and content release 8346.

As of Globalprotect application/agent version 5.2 the mtu is configurable from the Globalprotect portal with the option "Configurable Maximum Transmission Unit for GlobalProtect Connections" 🙂

Hello, what would be the lowest possible value, that is safe for performance. We have tens of thousands of clients and most of the time there is no issue, however for some clients there is keep-alive timeout issues all the time. We currently have 1360 value for MTU. What could be the risk if we make it too small - for example 1200?

- 88703 Views

- 4 comments

- 26 Likes

- Host Compliance Service for GlobalProtect in GlobalProtect Articles

- GlobalProtect Support for DHCP-Based IP Address Assignments in GlobalProtect Articles

- GlobalProtect App Log Collection and Troubleshooting FAQ in GlobalProtect Articles

- Troubleshoot Split Tunnel Domain & Applications and Exclude Video Traffic in GlobalProtect Articles

-

DHCP

1 -

ESXi

1 -

Global Protect

1 -

GlobalProtect

19 -

GlobalProtect App

1 -

globalprotect gateway

1 -

GlobalProtect-COVID19

12 -

GlobalProtect-Resources

9 -

Layer 3

1 -

NGFW Configuration

11 -

Prisma Access

1 -

PrismaAccess-COVID19

1 -

VM-Series

1

- Previous

- Next