- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Double NAT return packet dropping in firewall

- LIVEcommunity

- Discussions

- General Topics

- Re: Double NAT return packet dropping in firewall

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

Double NAT return packet dropping in firewall

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-29-2022 10:49 AM - edited 06-29-2022 10:50 AM

Can anyone help point out if I am missing something obvious here.... I have a new vendor over an AmazonAWS VPN that I have to double NAT inbound traffic for (because they are using IP ranges that clash with our existing network and best practices, i.e. using 10.0.0.0/24 and public IPs in their private AWS).

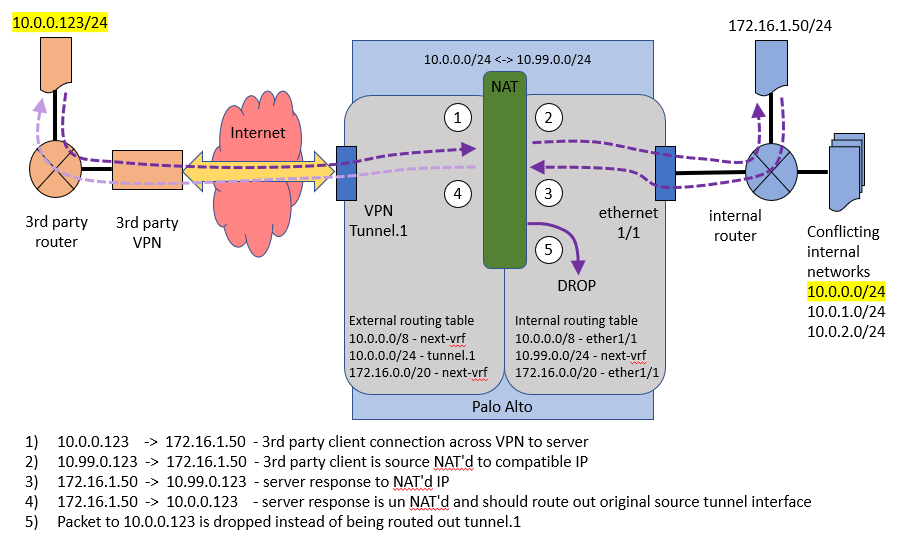

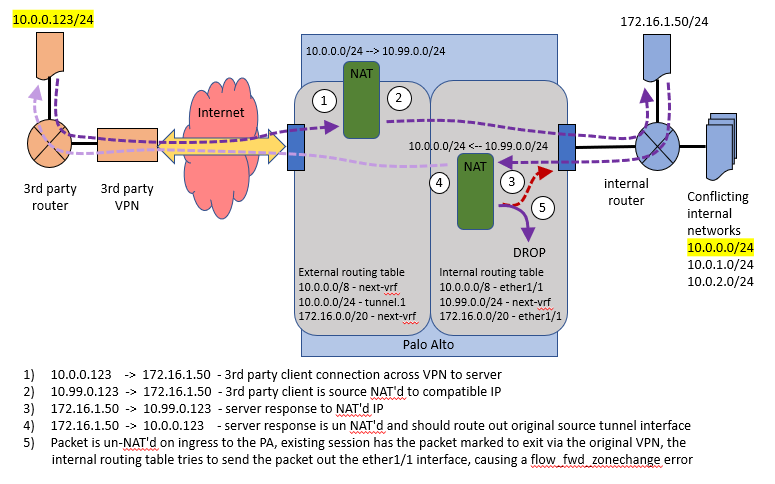

The VPN comes a a tunnel on a VPN security zone and our external routing table. I have a double NAT to convert their source and destination packet addresses from their network ranges to our network ranges. The new NAT'd packet then goes to our internal routing table, the server responds, the response packet comes back, is un-NAT'd, and then drops... The Monitor Traffic logs say the packet is allowed and NAT'd correctly. I have the NAT'd source network in my internal routing table pointing to the external routing table. I have the vendors destination network in my external routing table, pointing over the VPN, I can not put it in my internal routing table because it conflicts with existing internal routes. What am I doing wrong?

Vendor sends packet from their source to our destination across VPN:

10.0.0.57 -> 10.240.7.3 *packet in receive capture

We receive and double NAT traffic:

10.0.0.57 -> 10.240.7.3 becomes 10.79.7.57 -> 10.20.0.102

Packet routed to internal network and response from server:

10.79.7.57 -> 10.20.0.102 *packet in transmit capture

10.20.0.102 -> 10.79.7.57 *packet in receive capture

Packet is un-NAT'd to go back out VPN tunnel:

10.20.0.102 -> 10.79.7.57 un-NATs to 10.240.7.3 -> 10.0.0.57

Packet should be sent out VPN tunnel, but is instead dropped:

10.240.7.3 -> 10.0.0.57 *packet in drop capture

External routing table where VPN is attached:

10.0.0.0/8 -> next-vr internal

10.0.0.0/24 -> tunnel.8000 (VPN)

10.240.7.0/24 -> tunnel.8000 (VPN)

Internal routing table:

10.0.0.0/8 -> core router

10.79.7.0/24 -> next-vr external

* 10.0.0.0/24 can not exist here, pointing to the VPN/external table, because it conflicts with internal networks

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-30-2022 03:11 AM

did you trace the global counters to correlate why these packets are getting discarded ?

show counter global filter delta yes packet-filter yesit could help to set up a policy based forwarding rule with symmetric return enabled to ensure packets go back to your external router no matter what the routing table dictates

are they at all capable of performing source nat on the AWS side so you receive connections from a different subnet entirely?

PANgurus - Strata & Prisma Access specialist

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-30-2022 05:33 AM

Hi @Adrian_Jensen ,

I would agree with @reaper that global counters could be useful to understand the main reason why the packet is being dropped - zone protection with spoof protection or/and strict ip check, could be one possible reason.

Unfortunately I am almost certains that the problem is in the missing route in the internal vr... I am also not sure that symmetric return in PBF rule will help, because this feature rely on the ARP table and the MAC addresses - https://docs.paloaltonetworks.com/pan-os/9-1/pan-os-admin/policy/policy-based-forwarding/pbf/egress-... There is no ARP involved for the tunnel interfaces nor for the inter-vr traffic...

Definately check the counters first and solve any zone protections first, as I could be wrong, but for me the only solution is to have traffic source NAT-ed at the AWS side, before traffic being received by the PAN FW. Unfortunately it seems this is not easy task as well - https://aws.amazon.com/premiumsupport/knowledge-center/configure-nat-for-vpn-traffic/

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-30-2022 11:37 AM - edited 06-30-2022 12:29 PM

So I've spent a couple hours playing with PBFs and trying not to break all our other routing. The only way I could make a PBF that affected traffic was to either 1) have a PBF that took inbound VPN traffic and routed it to the internal interface, but that broke NAT'ing, or 2) create a PBF from the internal IP to the un-NAT'd vendor network IP and routed it our the VPN, the packet no longer shows up in the drop capture, but it also doesn't show up transmit capture. Where the vendors subnet conflict with the internal subnet, this just breaks things anyways.

According to the global counters, it looks like the packets are being dropped for zone policies:

flow_policy_deny 21 0 drop flow session Session setup: denied by policy

flow_fwd_zonechange 283 1 drop flow forward Packets dropped: forwarded to different zone

But I already have wide-open security policies allowing VPN zone to internal and internal to VPN zone. Using the test policy match, everything comes up allowed with the expected rules. Zone protection is not enabled on either the internal Trust zone or the site-to-site-VPN zone where the VPN tunnel terminates.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-05-2022 03:22 PM - edited 07-05-2022 03:23 PM

So I am extremely frustrated with this. It appears that Palo Alto can not do double NAT. More specifically, PA can not do the first half, source NAT of incoming traffic on an interface and properly return that traffic (once un-NAT'd) out the same interface. Source NAT'ing outbound traffic works, source NAT'ing inbound traffic doesn't work.

Being able to source NAT inbound traffic is important where you have a third party connection sending you traffic (such as over a VPN) from a source range that conflicts with your internal networks and the third party is unable or unwilling to source NAT outbound traffic on their side.

Example traffic flow:

I can place an internal route, or a PBF, to 10.0.0.0/24 in the PA and force traffic out the VPN, but that breaks all other traffic actually destined for that block on the internal network and negates the whole purpose of source NAT'ing.

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-06-2022 07:35 AM - edited 07-06-2022 07:37 AM

Hi @Adrian_Jensen ,

I would argue that the problem is PAN specific...You should have issue with any other network vendor in such situation - you need to have route for the network after the NAT.

You may want to try Packet Tracing - https://beacon.paloaltonetworks.com/student/path/1028945 but as suggested in the training it should be performed during maintenance window.

"Packets dropped: forwarded to different zone" is usually related to routing change during active session. I am wondering is this constant traffic? Like constant ping or UDP or something else that is constantly trying to send traffic or it is initiated on-demand? I am wondering if it worth trying to clear any related sessions and check if you stil see "forwarded to different zone".

But I was thinking that probably reply is "un-NATed" and after that a route lookup is performed, which is taking the route in the internal VR, which points to different zone and reply no longer match existing session (aka traffic is forwarded to different zone from what original packet was received).

It will be interested to see the output from the packet trace if you have a chance to peform it.

P.S. I would suggest you to use "mentioning" with "@<name>", which will help the mentioned person to follow the topic when there is new post 🙂

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-06-2022 12:01 PM - edited 07-06-2022 12:03 PM

@aleksandar.astardzhiev Yeah... just venting more than anything in the previous post. PA support hasn't been any help and doesn't seem to understand the problem. I use to do complicated NATing like this on Mikrotik devices all the time. And I haven't been able to find any good PaloAlto packet flow diagrams (there are 2 versions of one diagram, but only show destination NAT and not source NAT or more importantly where un-NATing takes place).

I think the root of the problem is that both NATing and un-NATing take place on the ingress interface, instead of un-NATing taking place on the egress interface. Therefore, when you try to do inbound source NAT'ing (from an external routing table), the return packet always gets un-NATd before reaching the outbound interface (in the internal routing table). The session for the original incoming packet is marked as arriving from an external interface (/VPN). But the return packet for that session is un-NATd and then tries to route back to the internal interface, causing a zone forwarding error. As in the following:

https://knowledgebase.paloaltonetworks.com/KCSArticleDetail?id=kA10g000000Cm1GCAS

I think that explains the "flow_fwd_zonechange" error in the global counters. And what is really happening in packet flow diagram is this:

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-07-2022 01:58 AM

Hi @Adrian_Jensen ,

That is my assumption as well for the "flow_fwd_zonechange" error.

But on another hand I have similar setup which is working. It is little different from your case, but I think the concept is the same:

- I have FW with two virtual-routers (VRs) each connected to different ISP as primary and backup. In each VR there is default route pointing to the relevant ISP.

- I have server in network associated with the primary VR, which needs to be publically reachable over the primary and backup ISP. So I configured two destination NAT rules translating public IP from each ISP to server. For primary VR this works fine, because server is in the primary VR and both directions are working fine.

- Traffic from backup ISP is also NAT-ed, routed to primary VR and forwarded to the server, but return traffic is dropped, because it tries to follow the default from primary VR.

- For that reason I have configured twice NAT for the NAT rule from backup ISP

- translating the destination public to server private

- translating source public to FW ip assigned to backup outbound (just to be sure server will follow default to fw)

- in backup VR I have route for server network with next-vr primary

- in primary VR I have route for FW ip assigned to backup outbound with next-vr backup

And this is working. In my case there is monitoring that is constantly pinging the two public server IPs. Initially the ping probes over backup ISP were dropped with same error "flow_fwd_zonechange". After that I realized that return traffic is taking the primary default (because primary VR haven't failovered to backup), so for the monitoring to work I had to do the source nat. At first I still had blocked traffic with same error, but I think it was solved once I cleared all sessions.

So as you can see I have done exactly what you are trying to do - NATing the source and routing it between VRs.

For that reason I asked if this is constant traffic, which you may need to clear in order for the source nat and the next-vr routes to work.

Another question is what FW version are you running? My solution is working on 9.1, I am wondering if you are not hitting any bug if running 10.1...

I still believe it will be very valuable to consider the packet tracing. Do you have a chance to perform it?

- Mark as New

- Subscribe to RSS Feed

- Permalink

07-07-2022 06:25 PM

@aleksandar.astardzhiev I think I understand your setup, but I need to ponder it a bit to apply your fix to mine... Like you, I have 2 ISPs, 3 VRs (one for each ISP and an internal VR), 2 DMZs (one for third-party physical VPN routers, one for internet facing routers), 2 GlobalProtect portals/gateways, 17 security zones, and 70+ IPSec VPNs... so it all gets a bit confusing...

At one point I did try reversing the methodology and source NAT outbound with bidirectional turned on, but I couldn't have bidirectional source NAT and destination NAT at the same time (interface error'd, refused to commit). This particular traffic will all be inbound anyways. For now I have had to give up and install PBFs to force the return traffic from the internal VR out the VPN tunnels. We had been trying to get the double-NAT working for 2 weeks without it and couldn't. Had to get something working so the vendors could start testing across the VPN to internal servers.

We are currently running 9.1.13-h3. There is both intermediate and constant traffic (multiple pings a second) across the VPNs as I was testing/making changes. So I deliberately disabled the IPSec/IKE for a couple minutes to terminate any existing sessions in the PA and on the vendor side. I haven't been able to do a packet trace as of yet, been buried in other issues...

- 9709 Views

- 8 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!

- GRPC status UNAVAILABLE in intelligent offload in VM-Series in the Private Cloud

- DHCP Relay over SDWAN issue in Advanced SD-WAN for NGFW Discussions

- Issue with PA-445 Failover - Interface Reset in General Topics

- Not able to log XFF (Actual Client IP) in PaloAlto Logs even when we enable XFF and URL filtering profile in Palo's in Next-Generation Firewall Discussions

- Issue with call recording (flow_predict_convert_rtp_drop) in Next-Generation Firewall Discussions