- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Log Processing / DB connectivity problem

- LIVEcommunity

- Tools

- Expedition

- Expedition Discussions

- Log Processing / DB connectivity problem

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-31-2018 12:31 PM

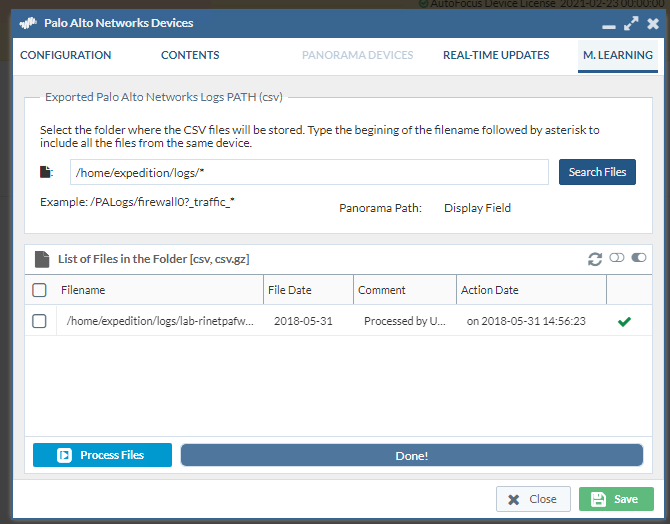

New issue - Able to "see" the Panorama, the firewalls that are controlled by Panorama, and the log export from said firewalls is working. However, when trying to process those logs via the "Process Files" button under the M.Learning tab of the device, I get the following output in the /tmp/error_logCoCo file.

root@Expedition:~# cat /tmp/error_logCoCo

/opt/Spark/spark/bin/spark-submit --class com.paloaltonetworks.tbd.LogCollectorCompacter --deploy-mode client --supervise /var/www/html/OS/spark/packages/LogCoCo-1.2.4-SNAPSHOT.jar MLServer='198.18.2.73', master='local[1]', debug='false', taskID='62', user='admin', dbUser='root', dbPass='paloalto', dbServer='198.18.2.73:3306', timeZone='Europe/Helsinki', mode='Expedition', input='010108000490:8.0.3:/home/expedition/logs/lab-rinetpafw-1_traffic_2018_05_31_last_calendar_day.csv', output='/datastore/connections.parquet', tempFolder='/datastore' ---- CREATING SPARK Session:

warehouseLocation:/PALogs/spark-warehouse

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/Spark/extraLibraries/slf4j-nop-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/Spark/spark-2.1.1-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.helpers.NOPLoggerFactory]

+--------------------+------------+--------+--------------------+

| rowLine| fwSerial|panosver| csvpath|

+--------------------+------------+--------+--------------------+

|010108000490:8.0....|010108000490| 8.0.3|/home/expedition/...|

+--------------------+------------+--------+--------------------+

8.0.0:/home/expedition/logs/lab-rinetpafw-1_traffic_2018_05_31_last_calendar_day.csv

LogCollector&Compacter called with the following parameters:

Parameters for execution

Master[processes]:............ local[1]

User:......................... admin

debug:........................ false

Parameters for Job Connections

Task ID:...................... 62

My IP:........................ 198.18.2.73

Expedition IP:................ 198.18.2.73:3306

Time Zone:.................... Europe/Helsinki

dbUser (dbPassword):.......... root (************)

projectName:.................. demo

Parameters for Data Sources

App Categories (source):........ (Expedition)

CSV Files Path:.................010108000490:8.0.3:/home/expedition/logs/lab-rinetpafw-1_traffic_2018_05_31_last_calendar_day.csv

Parquet output path:.......... file:///datastore/connections.parquet

Temporary folder:............. /datastore

Exception in thread "main" com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at com.mysql.cj.jdbc.exceptions.SQLError.createCommunicationsException(SQLError.java:590)

at com.mysql.cj.jdbc.exceptions.SQLExceptionsMapping.translateException(SQLExceptionsMapping.java:57)

at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:1606)

at com.mysql.cj.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:633)

at com.mysql.cj.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:347)

at com.mysql.cj.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:219)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$createConnectionFactory$1.apply(JdbcUtils.scala:59)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$createConnectionFactory$1.apply(JdbcUtils.scala:50)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:59)

at org.apache.spark.sql.execution.datasources.DataSource.write(DataSource.scala:518)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:215)

at org.apache.spark.sql.DataFrameWriter.jdbc(DataFrameWriter.scala:446)

at com.paloaltonetworks.tbd.TaskReporter.Print(TaskReporter.scala:72)

at com.paloaltonetworks.tbd.LogCollectorCompacter$.main(LogCollectorCompacter.scala:385)

at com.paloaltonetworks.tbd.LogCollectorCompacter.main(LogCollectorCompacter.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:743)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: com.mysql.cj.core.exceptions.CJCommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.cj.core.exceptions.ExceptionFactory.createException(ExceptionFactory.java:54)

at com.mysql.cj.core.exceptions.ExceptionFactory.createException(ExceptionFactory.java:93)

at com.mysql.cj.core.exceptions.ExceptionFactory.createException(ExceptionFactory.java:133)

at com.mysql.cj.core.exceptions.ExceptionFactory.createCommunicationsException(ExceptionFactory.java:149)

at com.mysql.cj.mysqla.io.MysqlaSocketConnection.connect(MysqlaSocketConnection.java:83)

at com.mysql.cj.mysqla.MysqlaSession.connect(MysqlaSession.java:122)

at com.mysql.cj.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:1726)

at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:1596)

... 21 more

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.cj.core.io.StandardSocketFactory.connect(StandardSocketFactory.java:202)

at com.mysql.cj.mysqla.io.MysqlaSocketConnection.connect(MysqlaSocketConnection.java:57)

... 24 more

...connecting to the DB port (3306) is failing, when trying to go to the IP tied to the network interface. I *can* however get a response from the same port on (3306) on the loopback. Assuming that this is the same thing as the "Expedition ML Address", trying to change it is like trying to change your wifes mind about something...I put in "loopback", click save, and it seems to take until you reload the page. Is there a process I can stop, change the address, and restart that will allow the change to actually take effect?

Accepted Solutions

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-01-2018 02:50 AM

Next release will override the my.cnf file with that change version 1.0.86. And we will suggest for a custom values to create a file and add it to /etc/mysql/conf.d/override.cnf to avoid be overrided with the next upgrade.

- Mark as New

- Subscribe to RSS Feed

- Permalink

05-31-2018 12:58 PM - edited 06-01-2018 11:33 AM

I went and changed the /etc/mysql/my.cnf file to allow the DB to bind to all interfaces, as an interrim fix, and it seems to have worked.

# Instead of skip-networking the default is now to listen only on

# localhost which is more compatible and is not less secure.

bind-address = 0.0.0.0

#changed from 127.0.0.1

I am seeing the Process Files complete as expected.

- Mark as New

- Subscribe to RSS Feed

- Permalink

06-01-2018 02:50 AM

Next release will override the my.cnf file with that change version 1.0.86. And we will suggest for a custom values to create a file and add it to /etc/mysql/conf.d/override.cnf to avoid be overrided with the next upgrade.

- 1 accepted solution

- 7012 Views

- 2 replies

- 0 Likes

Show your appreciation!

Click Accept as Solution to acknowledge that the answer to your question has been provided.

The button appears next to the replies on topics you’ve started. The member who gave the solution and all future visitors to this topic will appreciate it!

These simple actions take just seconds of your time, but go a long way in showing appreciation for community members and the LIVEcommunity as a whole!

The LIVEcommunity thanks you for your participation!