- Access exclusive content

- Connect with peers

- Share your expertise

- Find support resources

Click Preferences to customize your cookie settings.

Unlock your full community experience!

Best Practices for scripting RQL queries

- LIVEcommunity

- Products

- Cloud Native Application Protection

- Prisma Cloud

- Prisma Cloud Articles

- Best Practices for scripting RQL queries

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

on 12-26-2023 10:09 AM - edited on 11-07-2024 11:16 AM by RPrasadi

By Paul Burega, CSE Team Lead

and

Adam Hamilton-Sutherland, Customer Success Engineer

Introduction

Prisma Cloud collects data about cloud resources in your cloud accounts and allows extracting information about those cloud resources such that answers to common security questions can be answered, such as show me ec2 volumes that are not encrypted. These queries are written in Resource Query Language (RQL), and can be debugged and run on the Investigate page in Prisma Cloud.

-

Allows interactively running a query and manually examining or downloading the results.

-

The user interface currently shows the first 100 results, a “load more” button will retrieve the next 100 results.

-

The download option is limited to a maximum of 100,000 results.

While a great environment for debugging and validating queries with a small number of results, this environment does not easily lend itself to batch operation and integration with other security tools in the enterprise. A larger tenant with many cloud accounts and cloud resources may have tens of thousands of results or more and may require more than 100,000 results.

This LIVEcommunity article demonstrates the use of several Python libraries that are provided by Prisma Cloud to overcome the above two limitations. This requires the use of a command line interpreter in your environment (Linux, MacOS, or Windows with Windows Subsystem for Linux).

The first library is designed to create a CSV format file just like the download results, but allowing more results to be downloaded. The second library is designed to give you back the JSON formatted data for the query results, allowing you to pass this information on to other applications.

1.0 The PCPI Python Package

Palo Alto Networks provides a GitHub library: Prisma Cloud Python Integration - PCPI, which contains our Python3 toolkit for Prisma Cloud APIs and returns a CSV formatted file.

1.1 Python Best Practices: Create Virtual Environments

As part of Python's best practices, we create a virtual environment to install all our libraries. This allows us to keep installed libraries separate for each project we are working on. Within your command prompt, enter the command: python3 -m venv env

This command creates a virtual environment named “env”. We now need to enter that virtual environment. To do this, enter the command: source env/bin/activate

1.2 Install Python Libraries

To install PCPI for the first time:

$ python3 -m pip install pcpi

Collecting pcpi

Downloading pcpi-0.1.0-py3-none-any.whl (15 kB)

…

Installing collected packages: pyyaml, charset-normalizer, certifi, idna, urllib3, requests, pcpi

Successfully installed certifi-2022.9.24 charset-normalizer-2.1.1 idna-3.4 pcpi-0.1.0 pyyaml-5.4.1 requests-2.28.1 urllib3-1.26.12

To update to the latest release of PCPI:

$ python3 -m pip install pcpi —-upgrade

1.3 Obtain a Prisma Cloud Access Key

Before using the Prisma Cloud User Interface, you must log in with your userid and password. Your organization may require you to use a single sign-on such as Okta or Azure AD.

In order for your script to authenticate against the Prisma Cloud API, you will need to obtain an Access Key ID and a Secret Access Key from the Prisma Cloud console. You cannot use single sign-on with a script and the API.

You will need to get authorization from your Prisma Cloud Administrator to have the ability to create an Access Key. Please follow the best practices for security listed in:

Scripting and Automation Best Practices with Prisma Cloud CSPM JWT

Security best practices from your organization should be followed with password expiration of your access key. Do not create Access Keys without expiry dates, as these are a security exposure. You are allowed two access keys per permission level at any time: active key and next active key. Please ensure that access keys are rotated frequently.

2.0 Generate a Prisma Cloud CSV file from RQL

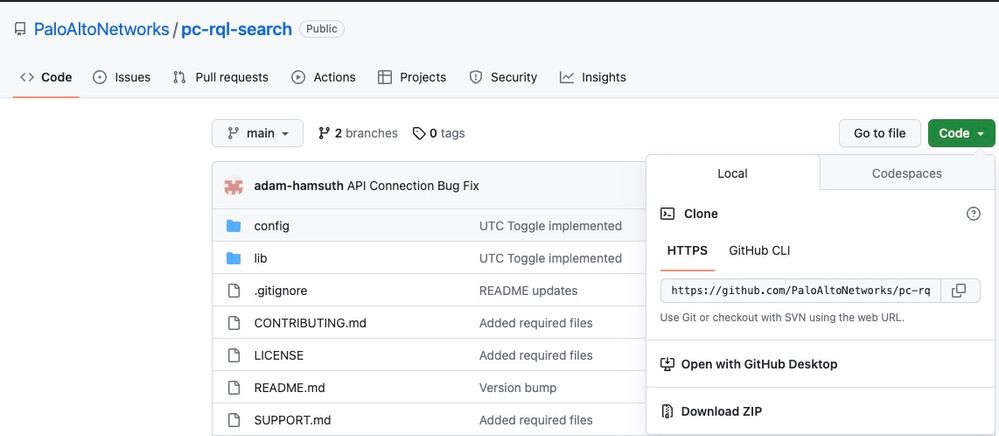

For performing an RQL query where you are looking for a CSV file identical to the one you can download from the Prisma Cloud User Interface, we have the library: PaloAltoNetworks / pc-rql-search. This script contains a README that details how to install the script's dependencies and how to set up the configuration file. You can download the contents of the script from github by selecting the green ‘Code’ button and ‘Download ZIP’ entry’.

Figure 1: PC-RQL-Search_palo-alto-networks

This library uses a configuration file, configs.yml in the config directory:

prisma_cloud:

username: "01234567-0123-4567-89ab-9876543210"

password: "my-secret-key-password"

customer_name: "MyCompany"

api_base: "api3.prismacloud.io"

filename: "Xout.csv"

rql: "config from cloud.resource where resource.status = Active and api.name = 'aws-ec2-describe-instances' "

utc: TRUE

The following variables are used:

username: the Access Key ID

password: the Secret Key

customer_name: a name used in the output file

api_base: specify the Prisma Cloud Stack that your account is on such as app2 or app.eu

filename: name of the CSV output file

rql: provide the RQL from the investigate page that you want to run and generate a CSV file

utc: TRUE provides the Date field in UTC .z time, FALSE gives time in Timezone format. The time zone will be based on the timezone on the machine running the script.

Once you have filled out the configuration file, the script will be ready to run. This script is designed to give you results that mimic the CSV download from the Prisma Cloud user interface, while not having a limit to the number of records returned.

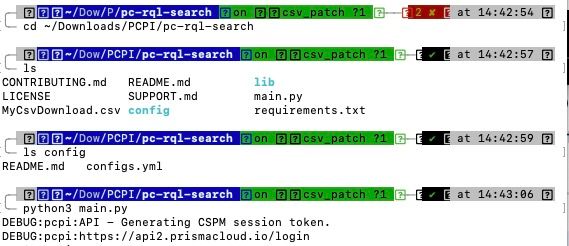

To run the script the first time, ensure that section 1.0 was followed to install PCPI. Open a command prompt and ensure you are in the same folder/directory that the script from section 2.0 is in. Create config.yml before your first run. Then run the following command: python3 main.py

Figure 2: Script_palo-alto-networks

The script will collect the results of the RQL query and create a CSV file containing the default fields. You can add additional fields by using the ‘addcolumn’ function in RQL. You do not have to make changes to the script as it is capable of processing extra fields being returned.

Once the script is done, a file with the name you specified in the configuration will be created. This CSV file contains the complete results of your RQL query.

2.1 100,000 DB Record Limitation

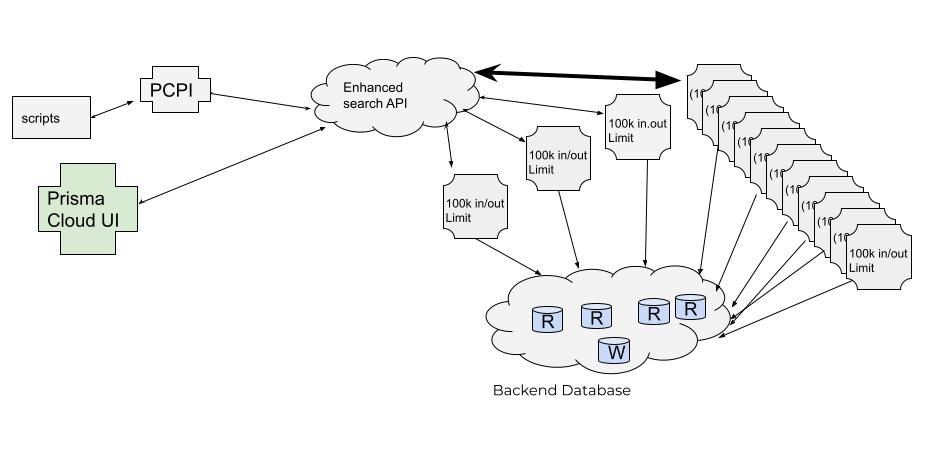

This script uses the paginated API, which queries up to 10 cloud accounts and returns the results from those cloud accounts. The paginated API is designed to work with a large number of Database records spread across a large number of cloud accounts, as opposed to a small number of cloud accounts with a large number of records in each.

Figure 3: Paginated API_palo-alto-networks

Note that there still is a limit of 100,000 records retrieved per 10 cloud accounts. If you use a json.rule, this will filter from the maximum of 100,000 records retrieved from the 10 cloud accounts. This means you cannot retrieve 100,000+ records per 10 accounts and then return 100,000 records passing the json.rule through the API.

If you have 35 cloud accounts, then the maximum number of Database records that can be retrieved is 35/10 * 100,000 =400,000 records. The backend searches a random 10 accounts at a time, and each time you run the query, the random 10 accounts will be different

|

Cloud Accounts 1-10 |

Up to 100,000 results |

|

Cloud Accounts 11-20 |

Up to 100,000 results |

|

Cloud Accounts 21-30 |

Up to 100,000 results |

|

Cloud Accounts 31-35 |

Up to 100,000 results |

|

Total for Cloud Accounts 1-35 |

Up to 400,000 results |

Figure 4: Random Account Query_palo-alto-networks

2.2 Large Cloud Accounts

A large account is one in which a particular api you wish to query has >9500 values in it.

Breaking a single query into two queries can help when you have several large accounts. The first query would run against 3-6 large accounts, and the second query would run against the remaining accounts.

This can be accomplished by using the “cloud.account IN (x,y,z)” followed by the second run with “cloud.account NOT IN (x,y,z)”.

2.3 Fixing Errors

The file configs.yml has a bad format if you get:

File "/Users/abc/Downloads/PCPI/pc-rql-search-main/lib/config_helper.py", line 7, in __init__

config = self.read_yml('configs')

^^^^^^^^^^^^^^^^^^^^^^^^

Ensure you have valid input data, the quote marks are correct and the last line in configs.yml should be:

utc: TRUE

3.0 Python Script to return JSON data

There are times where the limited data fields of the above CSV file are not sufficient for what is required of the data. Cloud objects can contain a lot of nested data, and this is best presented using JSON.

For a use case that requires the complete API JSON data, this section presents another Prisma Cloud script that will return the raw results for each object in JSON format. A user program can then examine the JSON data to find the exact information about the resulting cloud objects. .

An example use case would be to correlate the Prisma Cloud data with an external data source and enhance the data with additional fields that your organization could use to determine ownership of the actions on the data.

As an example, you may have an internal database that contains project and owner information, and you need to retrieve Prisma Cloud data filtered or sorted by that external data source.

Please note that the 100,000 record limit described in 2.1 above applies in this script as well. In this script, you have the capability to run 2 or more queries and collect all the results in one run should you choose to handle some really large accounts.

3.1 Conventions Used in Python Script

Comments are lines starting with “#”

#This is a comment to tell us what the script is doing here

This prints the raw json returned by the API. It is not very readable in most cases, but is very compact:

print(res.json())

This will print all the json return by the API, but will format the print, and indent the json:

print(json.dumps(res.json(), indent=4))

This will print only the first json object returned by the API, formatting and indenting the json:

print(json.dumps(res.json()[0], indent=4))

For purposes of the demo script, we are writing the JSON data to a file that can be viewed at a later date and will persist after you close your terminal. This is done at the end of the script where we open a file, then use the json.dump() function to output our JSON on data to a file.

3.2 Sample Script which runs RQL and returns a file containing JSON results

Using your favorite code editor, such as VS Code, create a file named prisma_cloud_search.py (the “.py” extension is what makes this a script, “.py.docx” is not an executable script) with the following Python content:

from pcpi import session_loader

import json

#Author: Adam Hamilton-Sutherland - Palo Alto Networks

#Title: Prisma Cloud RQL Search

#Description: Runs a Prisma Cloud RQL search via API calls

# and collects all results through pagination.

#When the script is done, results are saved to a json file.

#

#The contents of the script are stored in memeory before being written to a

# file. If your RQL query is exceptionally large, your machine may run out of

# memeory and the script could crash before the output file is created.

# You can also run out of disk space for the output file.

#SETUP PCPI Session

session_manager = session_loader.load_config('prisma_creds.json')[0]

session = session_manager.create_cspm_session()

#Create API Payload for the config search endpoint

# 2000 objects per API call is considered the best practice for this REST API.

#There is no limit to how many total results this API will return.

#Eg, in a large Tenant, you may receive millions of results.

limit = 2000

#PRISMA CLOUD RQL CALL

rql = "config from cloud.resource where resource.status = Active \ AND api.name = 'aws-ec2-describe-instances'"

payload = {

"query": rql,

"limit": limit,

"timeRange": {

"relativeTimeType": "BACKWARD",

"type": "relative",

"value": {

"amount": 24,

"unit": "hour"

}

},

"withResourceJson": True,

"heuristicSearch": True

}

#Make initial API request to the search endpoint to kick off the RQL

# query

response = session.request('POST', f'/search/config', json=payload)

#To get all the results from the RQL query, you must paginate

# through the results.

# A few variables are needed for the pagination logic and for

# processing the results

total_rows = 0

complete_res_list = []

complete_res_dict = response.json()

#res_data var used for while loop

res_data = response.json()['data']

#Add the first API call's response to the complete list of responses

complete_res_list.extend(res_data.get('items'))

#Update variables used for pagination

retrievedRows = res_data.get('totalRows')

total_rows += retrievedRows

counter = 0

while 'nextPageToken' in res_data:

#update payload

payload.update({'pageToken': res_data.get('nextPageToken')})

#call page endpoint

response = session.request('POST',

f'/search/config/page',

json=payload)

counter += 1

#update res_data with the paginated response

res_data = response.json()

#Add results from each page API call

complete_res_list.extend(res_data.get('items'))

retrievedRows = res_data.get('totalRows')

total_rows+= retrievedRows

#Update response dict to be the some format as the

# 'data' object in a typical RQL res

complete_res_dict['data'].update(

{'totalRows':total_rows, 'items': complete_res_list}

)

with open('rql_json_response.json', 'w') as outfile:

json.dump(complete_res_dict,outfile, indent=4)

3.3 Running the Python Script

When the script is run for the first time, answer the following prompts:

- Prisma Cloud URL

- your Access Key

- your Secret key

- name of your Prisma Cloud tenant

- enable/disable certificate verification.

The last two questions are both optional and can be left blank. Simply hit the enter key to skip the question.

If you have closed your command prompt after installing PCPI, you may need to re-enter your virtual environment. The command is: source env/bin/activate

Open up your terminal and type the following command:

$ python3 prisma_cloud_search.py

No credentials file found. Generating...

Enter credentials for the tenant

Enter tenant name or any preferred identifier:

log-my-first-script

Enter tenant url. (ex: https://app.ca.prismacloud.io)

Adjusted URL:

Enter tenant access key:

00000000-0000-0000-0000-000000000000

Enter tenant secret key:

0000000000000000000000000

API - Validating credentials

SUCCESS

…

After setup, the script will make API calls to the Prisma Cloud RQL Search endpoint. A number of APIs calls will be made as this endpoint is paginated, as per current Rest API best practices for return payload size and response time. Having a paginated API allows the query to return a very large number of results, much larger than is supported in the Prisma Cloud UI. (see RESTful web API design)

DEBUG:pcpi:API - Generating CSPM session token.

DEBUG:pcpi:https://api2.prismacloud.io/login

INFO:pcpi:SUCCESS - 0.779 seconds

DEBUG:pcpi:https://api2.prismacloud.io/search/config

INFO:pcpi:SUCCESS - 1.443 seconds

DEBUG:pcpi:https://api2.prismacloud.io/search/config/page

INFO:pcpi:SUCCESS - 0.457 seconds

DEBUG:pcpi:https://api2.prismacloud.io/search/config/page

INFO:pcpi:SUCCESS - 0.473 seconds

DEBUG:pcpi:https://api2.prismacloud.io/search/config/page

INFO:pcpi:SUCCESS - 0.367 seconds

DEBUG:pcpi:https://api2.prismacloud.io/search/config/page

INFO:pcpi:SUCCESS - 0.357 seconds

$

At this point, the json results of your RQL are stored in the file: rql_json_response.json. This file contains all data that was returned from the Prisma Cloud API.

As the entire script is included, users may decide to process the data returned in the script and change which data is included in the output.

4.0 Conclusion

You now have experience using the Python programming language to consume Prisma Cloud APIs and to process the resulting data. Literacy in scripting languages such as Python unlocks a whole new set of capabilities for automating workflows and generating customized reports for your most important use cases.

References

- Prisma Cloud API documentation

- Prisma Cloud Python Integration - PCPI

- Scripting and Automation Best Practices with Prisma Cloud CSPM JWT

About the Authors

Paul Burega and Adam Hamilton-Sutherland are cloud security engineers specializing in Cloud Security Posture Management. Paul and Adam utilize collaborative approaches to break down complex problems into solutions for global enterprise customers and leverage their multi-industry knowledge to inspire success.

- 6091 Views

- 0 comments

- 2 Likes

-

Access Control

1 -

Access Key

1 -

Active Directory Groups

1 -

Agentless

2 -

Agentless VM Scanning

1 -

AI

1 -

AISPM

1 -

Amazon ECS

1 -

Amazon Eventbridge

1 -

API

1 -

Application Programming Interface

1 -

ApplicationSecurity

1 -

Approach

1 -

Asset inventory

1 -

Asset Inventory Dashboard

1 -

Asset Inventory. AWS EKS

1 -

Assets

1 -

Attack path vectors

1 -

Audit

1 -

audit stream

1 -

Authentication

1 -

Authorization

1 -

Auto Map

1 -

auto-remediation

2 -

AWS

1 -

AWS Account Onboarding

1 -

AWS Cloud Watch

1 -

AWS EC2

1 -

AWS Secrets Manager

1 -

Azure

4 -

Azure Cloud Accounts

1 -

Azure RBAC

1 -

Azure Sentinel

1 -

Azure Single Sign On (SSO)

1 -

Azure VM Image Scanning

1 -

Beginner to Intermediate Level

5 -

CICD

1 -

CLI command

2 -

cloud assets

1 -

Cloud Security

10 -

Cloud Security Posture Management

1 -

Cloud Security Posture Management (CSPM)

6 -

Cloud Workload Protection

1 -

Cloud Workload Protection Platform (CWPP)

4 -

Collection Scoping

1 -

compliance scanning

1 -

Compute

1 -

Compute Edition

4 -

Compute SaaS

1 -

Configuration

1 -

console

1 -

Container Defender

2 -

Container Image

1 -

Containers

1 -

Control Plane

1 -

cspm

2 -

Custom rules

1 -

CVE

2 -

CWP Token

1 -

CWPP

1 -

DaemonSet

1 -

Daemonsets

1 -

Dashboards

1 -

defender

1 -

Defender Deployment

5 -

Defender Upgrade

1 -

DevSecOps ShiftLeft

1 -

DevTools

1 -

docker swarm

1 -

EKS logs

1 -

Event Assisted Ingestion

1 -

Eventbridge

1 -

Events

1 -

FN

1 -

FP

1 -

GCP Organization Onboarding

1 -

General Articles

2 -

GitHub

1 -

GitOps

1 -

GKE

1 -

Helm Charts

1 -

Host Defender

1 -

host vulnerabilities

2 -

Hosts

1 -

Hub account

1 -

IAC

1 -

IAM

1 -

IAM misconfigurations

1 -

Identity federation.

1 -

Image Scanning

1 -

Ingress

1 -

integration

1 -

Intermediate Skill Level

2 -

Jfrog Artifactory

1 -

JIT

1 -

JWT

1 -

JWT Token

1 -

Kubernetes

6 -

Kubernetes auditing

1 -

Kubernetes Clusters

1 -

lateral movement

1 -

Least Privilege

1 -

Least privilege Principle

1 -

Log4j

1 -

MFA (Multi-Factor Authentication)

1 -

Microsoft Azure subscriptions

1 -

Microsoft Entra.

1 -

Nested Account Groups

1 -

Network Exposure

1 -

NGINX

1 -

NVD

1 -

onboarding

1 -

Onebox

1 -

Organizing Cloud Resources

1 -

PAM (Privileged Access Management)

1 -

PCEE

1 -

playbooks

2 -

prioritize risk

4 -

Prisma "cloud code security" (CCS) module

1 -

Prisma Cloud

19 -

Prisma Cloud Asset Inventory Dashboard

1 -

Prisma Cloud Code Security

1 -

Prisma Cloud Compute

6 -

Prisma Cloud Compute Edition

7 -

Prisma Cloud Compute Edition Administrator’s Guide

1 -

Prisma Cloud Enterprise Edition

2 -

Prisma Cloud Intelligence Stream(IS)

3 -

Prisma Cloud Intelligence Stream(IS) feed

2 -

Prisma Cloud SaaS API

1 -

Prisma Cloud Terraform Provider

1 -

PrismaCloud

1 -

privilege escalation

1 -

Radar

1 -

RBAC (Role-Based Access Control)

1 -

Recursive Hierarchy

1 -

reference “Prisma Cloud Administrator’s Guide”

1 -

reference “Prisma Cloud Compute Edition Administrator’s Guide”

2 -

Risk Prioritization

1 -

RQL

1 -

Runtime Security

3 -

SDLC

1 -

Secrets

1 -

SecureDevelopment

1 -

Security

1 -

Security Posture

1 -

security-relevant events

1 -

Serverless

1 -

Service Account

1 -

SSO (Single Sign-On)

1 -

Terraform

1 -

thread feed

4 -

threat intelligence

4 -

Token

1 -

Visibility

1 -

VM

1 -

VM Scanning

1 -

VSCode

1 -

vulnerabilities

1 -

vulnerability detection

7 -

Vulnerability Management

7 -

Vulnerability Protection

1 -

WAAS

1 -

Webshell

1 -

Windows

2 -

XSOAR

2

- Previous

- Next